Final Update (11/1/14): The drivers and bundle in question work with newer Flex-10 interconnect firmware. My configuration was out of the blessed recipe of firmware and driver versions and I had problems as a result. According to HP and independent community feedback on Twitter and by email, I can safely say that if you are running within an HP recipe, you should not experience this issue. Also, for anyone out of recipe compliance, running Virtual Connect Flex-10 4.10 firmware and Onboard Administrator 4.12 firmware with the September ESXi HP bundle, expect problems with ESXi 5.1, but ESX 5.5 will run without issues. Downgrading to June seems to solve ESXi 5.1.

As a rule, stay within HP’s published recipes from vibsdepot.hp.com and you will avoid most problems.

HP released a new set of vibs with drivers and HP software to run inside of ESXi last week. As part of a support case due to a hardware issue, the updated drivers bundle was installed on two BL460c Gen8 blades with Emulex converged network adapters and caused the system to experience issues with the NIC. Working with VMware support, I downgraded to the driver to the previous version I was running the problem disappeared. This occurred with VMware ESXi 5.1 Update 2 as the operating system and I am not clear if it effects 5.5 versions or not. The affected hardware are HP FlexFabric Emulex 10Gb 554FLB adapters.

Symptoms

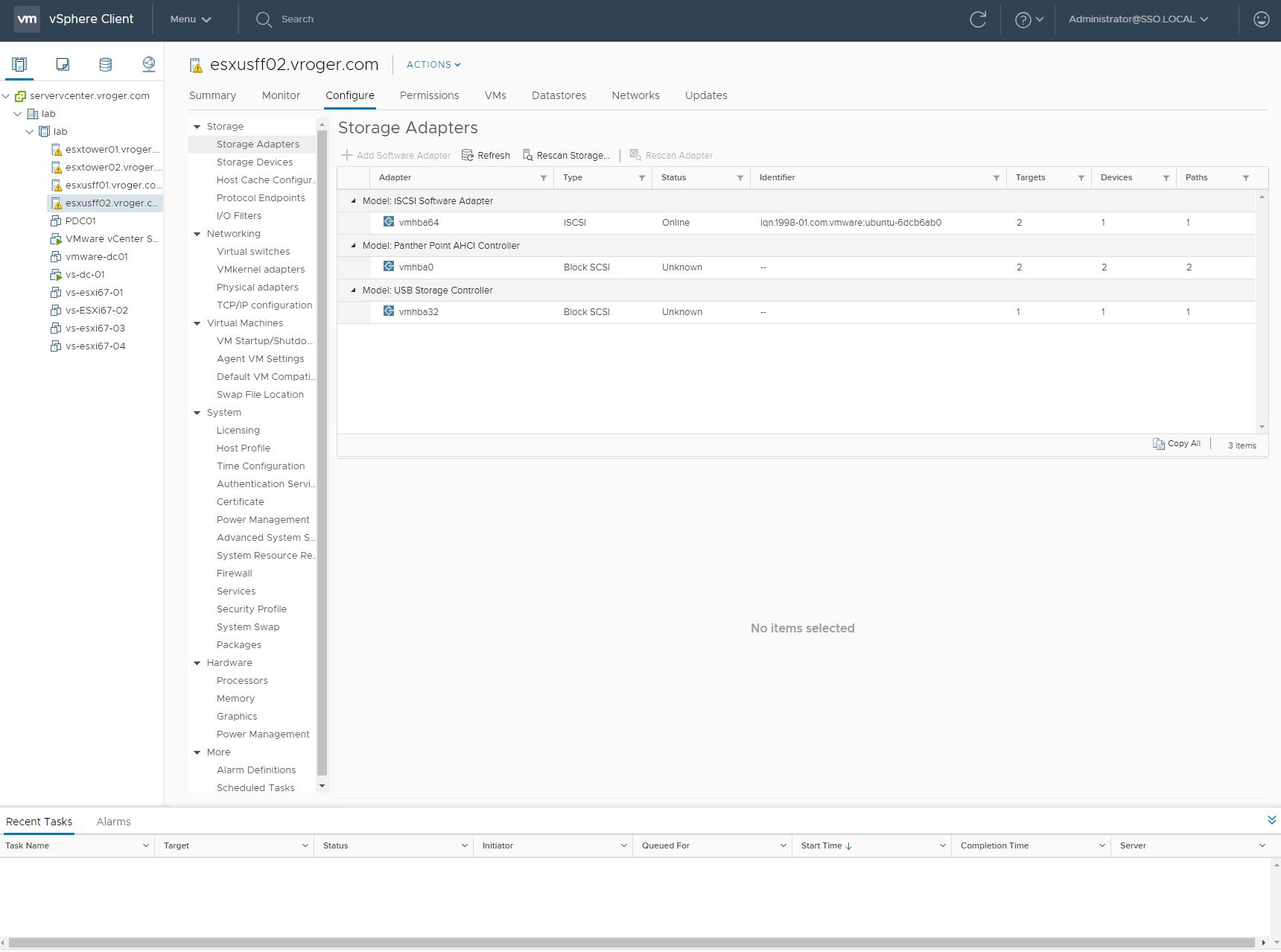

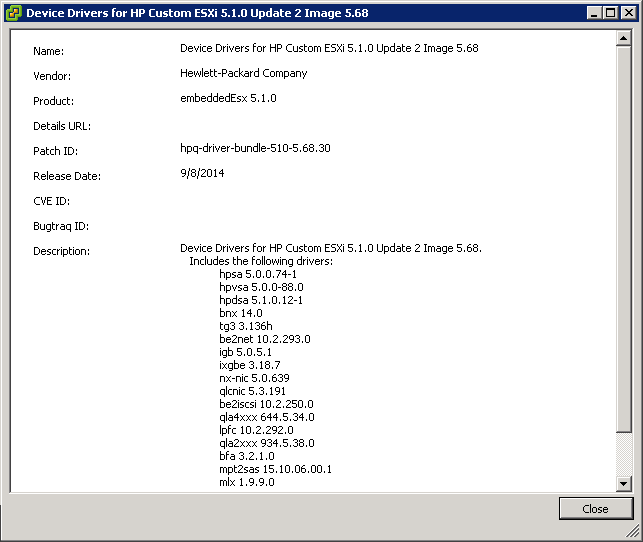

After successfully installing the Device Drivers for HP Custom ESXi 5.1.0 Update 2 Image 5.68, the system comes back online and appears to be fine. The first symptoms appear while trying to vMotion virtual machines onto the host. The host goes offline from vCenter and the virtual machines that had successfully moved are also unreachable over the network. No network traffic is passing into the host for management, virtual machines or vMotion. Using the ESXi Shell for troubleshooting, the system appears very sluggish and unresponsive. When examining the vmkernel.log, VMware noted NIC disconnects that were seen by ESXi.

Attempting to recreate the issue after reboot, leaving the system stays in maintenance mode with no virtual machines, it stays online. Soon after one or more virtual machines is relocated to the host, the system will go unresponsive in vCenter and the virtual machines will be offline. This was repeated several times on the two affected hosts.

One ESXi 5.1, the Emluex NIC driver that appears to be causing the issue is be2net version 10.2.293.0. This version was released with the 5.68 Device Drivers for HP Custom ESXi on September 9, 2014. Prior to loading this device driver bundle, I was running the next latest bundle from June 2014 on the system with no issues. The be2net driver listed as current on the vibsdept.hp.com site for June 2014 is version 4.9.288.0, first included in the February 2014 bundle.

Workaround

The workaround that I implemented with VMware was to downgrade to the version of be2net driver available in the June 2014 bundle. You can locate this at the HP VibsDepot website, http://vibsdepot.hp.com/hpq/jun2014/esxi-510-devicedrivers/ or http://vibsdepot.hp.com/hpq/jun2014/esxi-510-drv-vibs/be2net/, and it is installed from ESXi command line either in ESXi Shell or over SSH using esxcli. If you SFTP the vib file into /tmp on the host, you can install with the following command line.

[code]esxcli software vib install -v /tmp/Emulex_bootbank_net-be2net_4.9.288.0-1OEM.510.0.0.802205.vib[/code]

Followup

I tweeted about the potential problem last week and waited to hear back from HP Support before putting something out, but I didn’t want to wait any longer. If you use the HP Custom ESXi and stay up to date, this is something to pay attention to. It seems pretty specific to the Emulex converged network adapters and affects at least ESXi 5.1 Update 2.

I have an ongoing case with HP and as I find out more or see an advisory, I will update this post with new information.