DobiMigrate Ensures Data Security and Integrity Objectives Met During Migration; Cuts Months Off Project Timeline

Datadobi, the global leader in unstructured data management software, today announced that the CBX agency has migrated its entire distributed data storage infrastructure with DobiMigrate enterprise-class migration software for network-attached storage (NAS) and object data. In doing so, CBX now enjoys greatly expanded features and functionality, including greatly enhanced data security and support for cloud tiering.

Recently, the NetApp data storage platform and associated software on which CBX had long relied on was end-of-life. This meant that no additional firmware, patches, or upgrades would be made available. In addition, warranty support would soon come to an end. CBX decided that its best option would be to remain with NetApp and upgrade to the vendor’s newest platform. In doing so, it would not only remain with a brand it trusted but CBX would also benefit from increased capabilities and heightened security. With this decision made, the next critical consideration was how best to migrate the NAS data from its existing data storage environments to the new. The data migration would involve the entirety of CBX’s client and internal business production data housed across two geographically dispersed locations in Minneapolis and New York City.

CBX reached out to their technology vendor SHI, for a recommendation on how best to proceed. SHI highly recommended Datadobi, and its DobiMigrate enterprise-class migration software for NAS and object data.

“SHI assured us that DobiMigrate could be trusted to deliver in the most complex and demanding environments, while ensuring that we meet our data security and integrity objectives for migration to our new storage,” said Don Piraino, IT Manager, CBX. “The fact that Datadobi is also vendor-agnostic and has the ability to move data from any vendor’s platform to another heterogeneous platform on prem, remote, or in the cloud added to my comfort level. It is nice knowing that I am not locked into any one vendor. But more importantly for me, this spoke to Datadobi’s industry unique experience managing diverse migrations across many different platforms and environments.”

“We couldn’t allow even a moment of downtime or loss of data availability of any kind. The migration involved every single piece of CBX data – from client to CBX business data, spread across two geographically dispersed locations,” Piraino explained. “Typically, we use NetApp SnapMirror, but we knew it wasn’t the answer for this level of a migration. With DobiMigrate, we finished in weeks, rather than in months, with not even one hiccup.”

When asked what advice Piraino would offer others contemplating an enterprise class data migration, he replied, “We had a Datadobi Implementation Specialist dedicated to ensuring the success of our migration initiative… Having that level of expertise was invaluable. Nothing happened without Datadobi making sure it was being done correctly. It was such a load off my mind.”

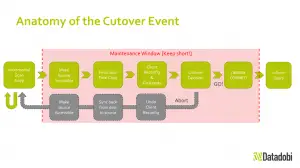

Piraino concluded, “The final cutover was really smooth – with absolutely zero impact on any of our team’s work. We completed it on a Friday evening. And on Saturday morning, everything was up and running. When you, your internal users, and your boss feel zero pain, that’s always a good thing.”

To read the CBX case study in its entirety, please visit: https://datadobi.com/case-studies/.

System Administration

Datadobi Offers Ultimate Unstructured Data Migration Flexibility with S3-to-S3 Object Migration Support

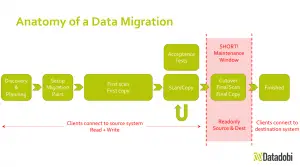

I was lucky enough to sit in on a product demonstration of DobiMigrate last week and was thoroughly impressed by the level of thought and attention to detail that goes into dobiMigrate,

every migration planned to the highest degree in order to minimise the service window with rollback procedures in place.

Now with Added S3 support Datadobi represents a truly cross-platform solution for data migration which is easy to recommend, if you want to know your data is safe.

Attached is their press release.

Datadobi Offers Ultimate Unstructured Data Migration Flexibility with S3-to-S3 Object Migration Support

DobiMigrate 5.9 provides the ability to migrate or reorganize file and object storage data to the optimal accessible storage service

LEUVEN, Belgium – June 2, 2020 – Datadobi, the global leader in unstructured data migration software, today announced DobiMigrate 5.9 with added support for S3-to-S3 object migrations. The addition of S3-to-S3 object migrations offers the most flexible and comprehensive software suite for migration of unstructured data. Customers can move data in any direction between on-prem systems of different vendors, cloud storage services, or a mixture of both. All objects and their metadata are synchronized from the source buckets to the target buckets.

Together with existing support for all NAS protocols, DobiMigrate customers can now benefit from the ultimate flexibility to migrate or reorganize their file and object storage data.

“We have always been a company that can manage our customer’s most difficult migration projects and have been involved in a number of different types of object migration projects over the years,” said Carl D’Halluin, Chief Technology Officer, Datadobi. “In this release, we are incorporating everything we have learned about S3 object migrations into our DobiMigrate product, making it available to everyone. We have tested it with the major S3 platforms including AWS to enable our customers’ journey to and from the cloud.”

The ability to move unstructured data whenever needed allows customers to continuously optimize their storage deployments and data layout. This keeps data on the optimal accessible medium needed at any moment, enables users to decommission ageing hardware, and offers the freedom to reconsider which data belongs on premises and which data better lives in cloud services.

With its unrivalled knowledge of cross-vendor storage compatibility, Datadobi has qualified migrations for an ever-expanding list of storage protocols, vendor products, and storage services, including S3 object storage, SMB and NFS NAS storage, and Content Addressed Storage (CAS) and is a partner with most of the major storage vendors.

“The exponential growth in the creation, storage, and use of unstructured data can create serious migration bottlenecks for many organizations,” commented Jed Scaramella, Program Director for IDC Infrastructure Services. “Migration services that leverage automation will mitigate risk, accelerate the process, and provide insurance that data transfers will proceed without interruptions.”

About Datadobi

Datadobi, a global leader in data management and storage software solutions, brings order to unstructured storage environments so that the enterprise can realize the value of their expanding universe of data. Their software allows customers to migrate and protect data while discovering insights and putting them to work for their business. Datadobi takes the pain and risk out of the data storage process, and does it ten times faster than other solutions at the best economic cost point. Founded in 2010, Datadobi is a privately held company headquartered in Leuven, Belgium, with subsidiaries in New York, Melbourne, Dusseldorf, and London.

For more information, visit www.datadobi.com, and follow us on Twitter and LinkedIn.

Monitoring and management software company Solarwinds has announced the release of Network Performance Monitor 12 – a release that includes their most significant updates in the history of the product. Originally known as Orion, NPM is a comprehensive network monitoring and management tool. The new release features to major new features known as NetPath and Network Insight.

NetPath is a feature that visually maps hybrid network paths. It gives IT pros the ability to track down and pinpoint performance issues in their environments, including cloud-based applications. The feature is able to diagnose where the issues are occurring on the internal network, with the WAN provider and in the cloud applications own network. This sort of feedback should assist network administrators in faster resolutions to complicated network problems.

Marketing materials for NPM 12 list the following six features of NetPath:

- Dynamic and visual hop-by-hop analysis of critical paths and devices along the entire network delivery path—on-premises, in the cloud or across hybrid IT environments.

- Specific, actionable information to resolve network issues regardless of network ownership.

- Automatic and dynamic thresholds to identify unhealthy critical paths and network nodes, providing an estimated 50 percent faster time to resolution.

- Visualized critical path performance over time for historical views of network latency.

- Identification of device configuration changes along critical paths when integrated with SolarWinds Network Configuration Manager.

- Immediate insight into the traffic travelling across flow-enabled devices impacting network performance when integrated with SolarWinds NetFlow Traffic Analyzer.

In addition to NetPath, NPM 12 is also introducing a feature called Network Insight. The feature is an in-depth monitoring for load balancers. Initially available for F5 BigIP installations, Solarwinds expects to adopt additional load balancing and application delivery platforms in the future.

Network Performance Monitor 12 also includes a refreshed user interface that has been modernized. All of the additions, including video tutorials, are covered on the Solarwinds website.

These feature join a bevy of network monitoring capabilities in the product. NPM has a long list of capabilities for managing both wired and wireless networks. These include automatic discovery for your network devices, monitoring of a long list of known problem conditions, dashboards and summaries and maps of network locations for multi-site environments. The system monitors relationships and links between network devices, monitoring for error conditions and latency.

Network Performance Monitor 12 is available today at a starting price of $2,895. For more information, see the Solarwinds website.

Self service IT kiosks and vending machines could improve IT support satisfaction

HP was showing off some of its new ideas for IT support at HP Discover. The idea behind internal initiatives were to improve overall IT services to its internal customers – its employees.

One of the initiatives was setting up areas in offices similar to a genius bar in an Apple store where employees could bring their system in for help. The idea was shown in video form on the Discover show floor. Known as myITpc within HP, it is meant to keep support technicians in a single place to provide face to face support for offices that are large enough to warrant this.

For areas that did not have enough users, remote access stations with video chat, KVM and the vending machine provide 24/7 access for employees to get the support they needed. The video chat allows a centralized help desk to support many more individuals without the need to travel. For systems that won’t even boot, there is a KVM attached to allow for remote troubleshooting and recovery from critical failures. But, sometimes the systems are too far gone and this is where the vending machines come into play.

The IT vending machines offer two things to employees within HP – first, it offers the ability to self-service for common parts that break or need replacement and second, it offers a depot for swapping out hardware and performing repairs.

The IT vending machine resembles a normal snack machine where users can swipe or tap an ID card and put in a code to have a particular part dispensed. Everything is tracked by ID number and a code that is assigned for the transaction.

In addition, there are also lockers on the side. If a user has a system that has completely failed, they can get a loaner system from a pre-assigned locker and place their broken machine the locker. This gets the user back to work faster and allows for fewer pickups and drop-offs at remote locations for technicians along with less coordination with the employees. Employees can come to the machine at their convenience to pickup the repaired system and return the loaner.

At upgrade or end-of-lease times, employees can pickup their new hardware from a locker and transition their data across on their own. When done with the old system, they may return it to a locker for return and disposal.

Configuring system-wide proxy for .NET web applications, including SharePoint

Several years ago, I wrote about setting proxy settings to enable the SharePoint App Store to work when running behind a web proxy and about resolving it when running HTTPS/SSL. Today, I want to revisit that solution since we found problems with it in the enterprise setting. The solution I found originally was to configure web.config on each of the SharePoint virtual sites in IIS, but there is an easier way and one that is more flexible. SharePoint, by design, creates many virtual sites automatically so manually configuring each web.config is cumbersome and not a great solution.

Instead, .NET 4 is able to use the system proxy settings, which allows the administrator to set (and change) the proxy settings by group policy or manually on the SharePoint web servers. This is preferable and more maintainable than setting manual settings on each IIS virtual site using the web.config file. Since you have set these in the default .NET web.config files, you don’t need to change newly generated web.config files for each new site you create in SharePoint. That becomes painful quickly in an enterprise setting with multiple web front-end servers and multiple sites. It is also easy to miss one here or there.

This method also works with any .NET web application running on a Windows system, so it applies beyond just SharePoint.

Configuring the default .NET proxy settings

To set the default .NET proxy settings, you will need to edit the web.config file in the .NET Framework folders, located at these two paths:

- C:\Windows\Microsoft.NET\Framework\v4.0.30319\Config\web.config

- C:\Windows\Microsoft.NET\Framework64\v4.0.30319\Config\web.config

In the web.config file, you will need to set the defaultproxy settings as shown below.

[code]<system.net>

<defaultProxy>

<proxy usesystemdefault="true" />

</defaultProxy>

</system.net>[/code]

Once this is set and you restart your web services, they should now inherit and use the system default proxy settings.

The next step is to ensure you have the correct proxy settings configured on the host. You may inspect the current settings using netsh:

[code]netsh winhttp show proxy[/code]

If correct, there is nothing else to do. If you have a group policy that sets the proxy settings, you may already have them correctly configured.

If you need to change them, you will use netsh again:

[code]netsh winhttp set proxy proxyserver:8080[/code]

For the full specifics of the netsh proxy commands, you may refer to this TechNet article. You may need to set a bypass list of internal sites in addition to the proxy server and port number.

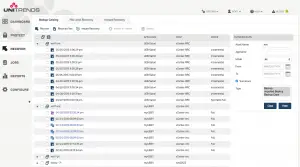

So, I am a little late to the party, but I just found Unitrends new Free Backup offering. Launched in May, the new backup offering is distributed as a virtual appliance and has a 1TB protected limit, but is otherwise free and clear for you to use. This is a perfect backup for your home lab or even many small customers virtualization deployments.

So, I am a little late to the party, but I just found Unitrends new Free Backup offering. Launched in May, the new backup offering is distributed as a virtual appliance and has a 1TB protected limit, but is otherwise free and clear for you to use. This is a perfect backup for your home lab or even many small customers virtualization deployments.

Since the backup is metered by protected space, there are no limits the number of VM or CPU’s that can be protected. Backups are incremental forever, there is cloud integration with Amazon and Google, automated scheduling and instant VM recovery. As I’ve written before, the restore is what is most important in a backup strategy. The free backup is virtualization focused with support for VMware and Hyper-V.

As for system requirements, the backup appliance needs:

- A minimum of 2 virtual processors (CPUs)

- A minimum of 4 GB of RAM

- 90 GB of space for the VM’s initial disk

- At least 128 GB of attached virtual disk backup storage

To find out more, head over to http://www.unitrends.com/products/unitrends-free-backup-software.

Unitrends is known for its appliance based backup technology, but with the merger with PHDVirtual a couple years ago, Unitrends has a complete lineup for virtualization to physical backup. They’ve grown a lot since being founded here in Myrtle Beach. Currently headquartered in Burlington, MA, the company has been a pioneer in disk to disk backup.

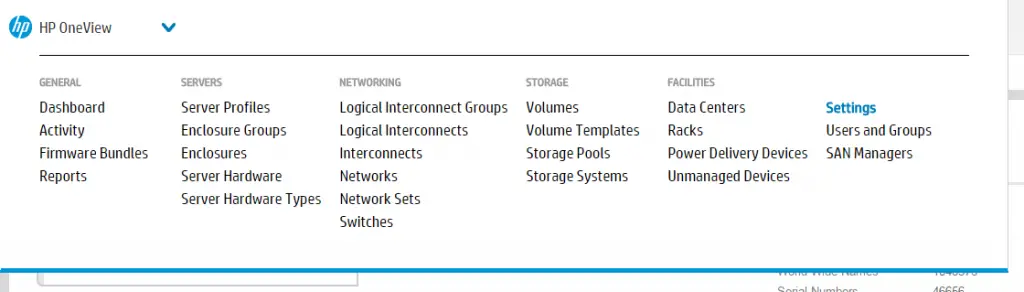

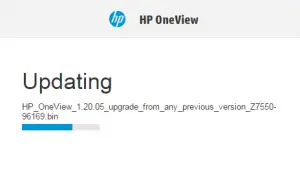

HP announced the OneView 2.0 will be shipping soon during its HP Discover in June. In the meantime, they’ve released version 1.20.05 which addresses several security vunleratilities, including Poodle over TLS, and a bunch of other bugfixes. The release notes for 1.20.05 provide the full details, along with the previous 1.20 releases and their changes.

In case you haven’t upgraded your HP OneView appliance since it has been deployed, here is a walk-through to show you how simple the appliance is to update. The 1.20.05 release is a good choice to install since it packages up all the previous releases into a single bundle that can be installed to bring up completely up-to-date.

HP OneView Upgrade

- Obtain the patch for HP OneView from the HP Software Depot.

(You will need an HP Passport account to ‘order’ them, but there is no charge.) - Login to the OneView web interface as an administrative user.

- Navigate to the Settings section in the OneView menu.

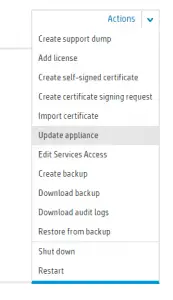

- Under Settings, select the Actions button and choose the Update Appliance option.

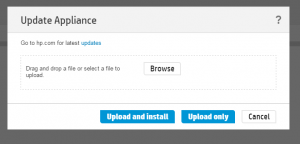

- In the Update Appliance section, select Browse and select the .bin file you downloaded in step 1. Choose the Upload and Install button to begin uploading the patch. You may also select Upload to stage, but not install, the patch.

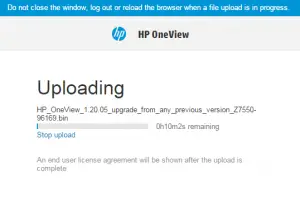

- The update will upload to the appliance.

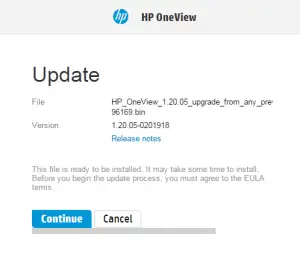

- You will be prompted again to Continue before the update installs.

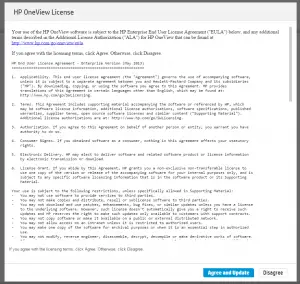

- Choose Agree and Update for the EULA when displayed.

- The appliance will update and restart once completed.

Power disguised as command line – 10 reasons SysAdmins should learn PowerShell scripting

I’m a big proponent of learning PowerShell as an administrator, engineer or any other practitioner role. And I think you should learn it. It is the path to the future.

- The pipeline. I’ve written a blog post in my head many times about the PowerShell pipeline. I think it is the single feature that makes PowerShell powerful. No, Microsoft didn’t invent the pipeline and I know I’ll be chastised by *nix admins (ooo we had it first), but the pipeline really does make PowerShell incredibly effective. Once you have a data set, you can easily send it into another command where it accepts and makes changes based on that data. Get-this | Do-that. Its that simple!

- Data Objects. Even non-developers have encountered object oriented programming at one time or another. Objects exist to encapsulate, define and allow data to be easily manipulated. Objects are well formed and defined. You can explore them and find out more about your data. Objects are mini packages and inside the package are many tools to manipulate the data.

- Time. Doing things in PowerShell will save you lots of time. If you are repeating a task, it is easy to find a command to retrieve a list of data – whether its mailboxes, accounts, computers, servers, etc. – that you need to change and then pipe it over to another command and make the change you need. Why click 500 times when you can do it in 30 characters in PowerShell?

- Extensibility. You can DIY til your heart desires. You’re not constrained to a list of executable files and built in paramters in PowerShell. If you find something lacking or missing, you can easily change the data in an object, scope it down, and change it to meet your needs. And you’re not alone. PowerShell has a thriving community of DIY-ers, many of whom freely contribute their scripts and ideas online.

- It is Friendly. Learning scripting in VBscript, C#, or many other languages require you to really dig in and learn a language. PowerShell isn’t that way. You don’t have to learn a lot of complex syntax to begin using PowerShell. From the first time you launch PowerShell, For the most part things make a lot of sense and you can pickup the language quickly (but I won’t mention formatting numbers, for instance).

- Break the GUI glass. Sometimes you run up against a problem in the Windows management tools where you can’t export or get to the data you really want from the GUI. PowerShell gives you access to many repositories of information you’d work much harder to access with other tools. Not only this, PowerShell includes a wide range of formatting commands to take data objects and output them quickly, easily and simply in CSV, HTML, and plain text tables, lists and other views. With simple SQL-like commands like Select, Where and Sort, you can also limit the output to exactly what you, or more like, what your manager wants to see.

- It is Microsoft’s future. Microsoft has publicly stated that all future server products starting with Wave 15 (which included SharePoint 2013, Exchange 2013, etc.) would include PowerShell as the de-facto administration platform. Any GUI environments would become secondary and may be missing some things that can be only done in PowerShell. Don’t get left behind by not adopting PowerShell. Not only is it Microsoft’s future, but automation is a big part of the future of IT. PowerShell gives you an effective way to automate.

- Its what *nix geeks always wanted. For years, Unix and Linux admins griped at all the things missing from Windows and how abysmal the command line was in Windows. Microsoft took all those complaints off the table with PowerShell. It is a very powerful and capable environment to perform lots of work quickly. My *nix counterparts have always raved about having MAN pages for everything. PowerShell also has its version of MAN pages using the Get-Help command to explore other commands and find out parameters and options for each one, including examples. Going even further than *nix, PowerShell also includes tools like Get-Method to help you discover the functionality hidden in objects.

- You can join your Devs and commit your script to Git. Ok, so I joke a bit, but many time Devs look down on administrators – aka server jockeys – because they are the ones who create the code. Once you learn proficiency in scripting PowerShell, don’t be afraid to share with the community and start committing your scripts to Git and public repositories for others to use. That’s how the community grows and learns from one another. Don’t only be a taker – be a giver, too… As a byproduct, you may understand your developers a little better, too.

- Its not just Microsoft… Many other vendors have adopted PowerShell for scripting against their tools. I’ve written a lot (including a book) about PowerCLI, which is VMware’s PowerShell environment. What is great about third-party support is the ability to leverage everything you already know about the base PowerShell and combine it with specific commands for a particular product. The same goes for Microsoft’s specific modules for its server products. Once you adopt PowerShell for Active Directory and Exchange, it is a natural extension to use it for SharePoint and Lync (Skype for Business).

Now, how to you learn?

- Microsoft’s Official Home for PowerShell – https://msdn.microsoft.com/en-us/powershell

- Powershell.org – ’nuff said

- PowerShell Magazine – Particularly the Tips & Tricks Column

- Pluralsight has great courses to help you adopt PowerShell

- Books… Many many PowerShell titles out there.

- Twitter is a another great source for finding people who create PowerShell scripts and make them available. The first you should follow is the father of PowerShell – Jeffery Snover (@jsnover). A couple more you’ll want to follow are the Ed Wilson/The Scripting Guys (@ScriptingGuys) and Adam Bertram (@adbertram).

- Microsoft has a great resource called the Script Center – https://technet.microsoft.com/en-us/scriptcenter. If you check out the Other Resources section here, you’ll see the archive of Hey Scripting Guy!, a blog from Ed Wilson and others.

- You should also look for PowerShell Saturdays scheduled time to time, which are an onsite event devoted to PowerShell learning. They’re free and all around the world.

- In addition to Twitter, Adam Bertram runs a blog called Adam the Automator where he shares a lot of the scripts he creates. He is a Microsoft PowerShell MVP, which is Microsoft title for community contributors for specific products.

- When it comes to PowerCLI, you’ll want to follow VMware’s Official PowerCLI account (@PowerCLI), Alan Renouf (@alanrenouf), and Brian Graf (@vBrianGraf). These guys are all involved with VMware’s PowerCLI effort and you can see great content on the office PowerCLI Blog at VMware.

- PowerShell on GitHub – https://github.com/powershell

- You might even find a few PowerShell and PowerCLI scripts on this site.

HP offers a show-and-tell of OneView 2.0 features, release date unannounced

Back in June, HP announced that OneView 2.0 would be coming soon. There is not an exact ship date for the product, but HP did share the major new features of the new version. From the list of features, some of the highlights are the introduction of templates to server profiles, enhanced firmware and device driver deployments, deeper storage integration, and an import feature for existing BladeSystem Virtual Connect Manager domains.

The introduction of templates allows you to define a configuration that can be replicated. While HP OneView 1.x allowed you to clone a server profile, once the profile was cloned, there was no relationship between the original and clone. With template, however, the server profiles continue to stay linked to the template and this allows you to make changes and push those automatically to all the linked servers. The use case here, for me, is ESXi hosts and clusters. I can easily define the configuration and when a change is required, like adding a network uplink or VLAN, I make this on a single template and push out to all the hosts. That is a huge time saver and it keeps manual error to minimum.

Another big benefit of the templates is the ability to link HP’s Service Pack for Proliant to a template and then automatically apply the firmware and device driver updates to all the hosts built from that template. This another huge automation step for many environments and a potential time savings for environments small to large.

I had a chance to talk with folks on the show floor and the fibre channel zoning management within OneView is particularly cool. Once you attach HP OneView to your fibre channel switches and your 3PAR arrays, OneView gains visibility to the storage environment. You configure a set of provisioning definitions that include how to name zones and how to zone systems to the storage. OneView makes no changes to existing zones until you make configuration changes to the systems associated and when that occurs, HP OneView rebuilds the zoning per the definition you specified and removes the manually created zones at that time. This provides a non-disruptive transition from manually created zones to HP OneView managed zoning.

Combining the storage management with the templated server profiles provides an automated method for ensuring that all systems within a cluster are zone properly and there are not manual errors or omissions in the zoning or presentation.

Another storage enhancement promised in OneView 2.0 is the ability to monitor SAN health from within the OneView console. Instead of needing to correlate between two consoles, you are able to see a more holistic view of the entire system from inside of OneView.

Fibre channel over Ethernet (FCoE) support has also been introduced in the new release.

For a list of all the new details on HP OneView 2.0, check out the frequently asked questions document from HP.