DH2i Closes 2021 As Another Year of Record Sales Growth, Product Innovation, and Strategic Partnership Development

Strengthened Foothold Across Key Verticals and Transitioned to 100% Software as a Subscription Model

Strengthened Foothold Across Key Verticals and Transitioned to 100% Software as a Subscription Model

News Release

Helping Customers Get Hybrid Cloud Right:

iland Offers new Private Cloud Capabilities and Upgrades that Unify Hybrid Environments

New capabilities enable customers to mix and match application needs with business requirements through new high performance options and cost optimized hardware configurations

HOUSTON – June 11, 2020 – iland, an industry-leading provider of secure application hosting and data protection cloud services built on proven VMware technology, today announced new capabilities to iland Secure Cloud that enhance iland’s comprehensive hybrid cloud offering by maximizing application performance, while at the same time controlling costs.

This is the latest update to iland’s Infrastructure as a Service (IaaS) portfolio that includes secure public cloud, hosted private cloud, and hosted bare-metal services. Together, these solutions provide the common foundation for secure application hosting, backup as a service (BaaS), and disaster recovery as a service (DRaaS), and reduce barriers to adoption through flexible and thoughtful cloud design, performance and cost optimization.

“iland’s Secure Cloud offering managed by our unified Secure Cloud Console, is key in achieving hybrid cloud success for our customers. This combination of solutions recognizes the inherent complexities in creating and managing hybrid environments and also provides the numerous options necessary to architect the right levels of performance and cost, as well as delivering integrated security, compliance, data protection, disaster recovery, and more,” said Justin Giardina, CTO, iland.

“This holistic approach, which focuses on truly understanding our customers needs rather than overselling or oversimplifying the process, is made even more powerful by new server configurations and licensing options as well as full private cloud integration into our console,” added Giardina.

As a specialized cloud provider, iland is able to deliver the flexibility, scale and agility normally associated with large hyperscale cloud providers, but without compromising on infrastructure control, visibility, or ease-of-use. In addition to secure public cloud options, dedicated private clouds, and bare-metal services, iland manages all cloud resources up to the hypervisor, includes end-to-end planning, deployment, onboarding, support services and a complimentary assessment tool to help customers right-size their cloud service to meet individual application requirements.

“iland gives us the flexibility to rapidly modify our infrastructure to address our immediate needs and what lies ahead,” said Lawrence Bilker, executive vice president and CIO of Pyramid Healthcare. “If you’re looking for a partner that can provide an IaaS platform that has high availability at a reasonable cost and the flexibility to adapt to changing business needs, iland is a phenomenal choice.”

The new features include expanded server options designed for I/O intensive applications that require higher quantities of RAM for data processing, as well as new hardware options that provide the flexibility to increase or decrease CPU core counts based on specific application licensing models. These new features enable customers to scale their performance and capacity resources independently to ensure application and cost expectations are met. Additional capabilities with this announcement include updates to the iland Secure Cloud Console for improved hybrid cloud management across iland public and private clouds.

New iland Secure Private Cloud features and services announced today include:

About iland

iland is a global cloud service provider of secure and compliant hosting for infrastructure (IaaS), disaster recovery (DRaaS), and backup as a service (BaaS). They are recognized by industry analysts as a leader in disaster recovery. The award-winning iland Secure Cloud Console natively combines deep layered security, predictive analytics, and compliance to deliver unmatched visibility and ease of management for all of iland’s cloud services. Headquartered in Houston, Texas and London, UK, and Sydney, Australia, iland delivers cloud services from its cloud regions throughout North America, Europe, Australia, and Asia. Learn more at www.iland.com.

Unique solution, commitment to open-source innovation, and world-class customer experience keys to success

CUPERTINO, Calif.–(BUSINESS WIRE)–Rancher Labs, creators of the most widely adopted Kubernetes management platform, more than doubled its commercial revenue and enterprise customer base in 2019. This significant year-on-year growth demonstrates an accelerating demand for containers within the enterprise and, specifically, containerized workloads running on Kubernetes.

@Rancher_Labs achieves 169% revenue growth, doubles enterprise customer base in 2019 as multi #cloud, multi-cluster #Kubernetes management market matures

The rapid adoption of Kubernetes has been widely predicted. According to 451 Research, 76% of enterprises worldwide will standardize on Kubernetes by 2022. Additionally, Rancher Labs’ own industry survey indicates multi-cluster environments are the norm, hybrid cloud deployments are prevalent, and edge is emerging as an important use-case.

“The Kubernetes marketplace saw significant consolidation in 2019 with the acquisitions of Heptio and Pivotal by VMware, and Red Hat by IBM,” said Sheng Liang, CEO of Rancher Labs. “Understandably, many organizations are concerned by the possible consequences of this consolidation and are turning to Rancher as the only vendor-neutral, multi-cluster, multi-cloud Kubernetes management platform.”

Unique Approach to Managing Kubernetes

Rancher’s unique Run Kubernetes Everywhere strategy is built on three simple tenets:

Commitment to Open-Source Innovation

Illustrating Rancher Labs commitment to open-source innovation, in 2019 the Cloud Native Computing Foundation (CNCF) accepted Rancher’s vendor-neutral container storage solution − Longhorn − as its latest Sandbox project. Longhorn joined 20 other current projects and was accepted in recognition of the unique value it brings to the cloud-native ecosystem.

Additionally, Rancher Labs received a Firestarter Award by 451 Research, awarded to the company for innovation, enterprise leadership, and its ‘trailblazing work that made it possible to handle a range of Kubernetes implementations from a common management platform.’

Gartner Research also recognized Rancher Labs in 2019, citing the company in two of its Container Industry reports and five different Gartner Hype Cycle reports.

World-Class Customer Experience

Rancher’s customers include many of the world’s largest enterprises. As such, another key factor in Rancher’s continued growth is their commitment to a world-class customer experience which includes onboarding services, certification programs, professional and consulting services, and 24/7 support. Rancher’s commitment to their customers is reflected in the company’s industry-leading net-promoter-score of 70.

What Customers Are Saying

One of the largest commercial banks in the Netherlands is standardizing on Kubernetes and Rancher. Erik van der Meijde, Platform Delivery Manager at de Volksbank comments: “Containers are now at the heart of our growth and innovation strategy. In order to be competitive, we need to increase the velocity of development through automation, while maintaining reliability and security. Rancher represents ‘next level’ containerization that allows us to become more agile and innovative.”

Netic is one of a group of companies creating the first truly national and entirely digital health service in Denmark; making Rancher an intrinsic part of its deployment plans. Kasper Kay Petersen, Sales and Marketing Director at Netic comments: “For a hybrid environment, Rancher is ideal for managing components across on-premise and the cloud. The ongoing health of the Danish population relies on a healthcare infrastructure that is ‘always on’ and completely reliable. As new applications are added to FUT the volume of patients connecting to the service will quickly expand. Rancher allows us to scale rapidly, develop and test new services concurrently securely and in a stable way.”

Supporting Resources

About Rancher Labs

Rancher Labs delivers open source software that enables organizations to deploy and manage Kubernetes at scale, on any infrastructure across the data center, cloud, branch offices, and the network edge. With 30,000 active users and greater than 100 million downloads, their flagship product, Rancher, is the industry’s most widely adopted Kubernetes management platform. For additional information, visit www.rancher.com and follow @Rancher_Labs on Twitter. All product and company names herein may be trademarks of their registered owners.

In a somewhat surprise move, HPE announced that it is going to make its entire portfolio available in a consumption-based procurement model in the next 3 years. Every product, every category. While the company will continue to offer traditional capital procurement, the shift to subscription is big news for the company.

HPE has been on the path to consumption-based IT for the past several years. Back in 2017, the company rebranded its consumption offerings as HPE Greenlake and began working with larger enterprise accounts to transition to op-ex procurement for its IT resources. The company announced that as of HPE Discover, it is working with around 600 customers and about $2.5 billion in revenue.

The move to consumption-based IT will occur over the next 3 years, encompassing all product lines and offerings according to company staff. This change also moves the target from the high-end addressable market to the entire market by opening access to the small & medium business markets.

Subscription models are not new – companies like Microsoft and Adobe has successfully navigated the transition to subscription model businesses away from traditional acquisition models. But the shift of an entire company and product line in the hardware space is a first. HPE staff say that the company is best positioned in the industry because of the work and learning they have done with HPE Greenlake in the last two years.

The advent of cloud-computing brought consumption-based IT to the masses. It opened up a ton of new capabilities that were previously cost prohibitive to customers who could not afford to deploy their own analytics infrastructure or specialized services – that could be consumed by cloud.

Consumption-IT models open up lots of capabilities that may not be available otherwise to smaller customers. There are many examples of analytic services and capabilities that were out of reach of smaller SMB customers prior to cloud computing. Greenlake could present opportunities to companies that may not be able to afford the on-prem solution.

HPE has confirmed that they will offer partner solutions as part of the offerings with Greenlake. Nutanix, Commvault and Veeam are all partners offered in the Greenlake consumption model paired with the HPE hardware solutions.

Disclaimer: HPE paid for my trip to attend HPE Discover. All thoughts and writing in this post are my own views and opinions and not reviewed or dictated by HPE.

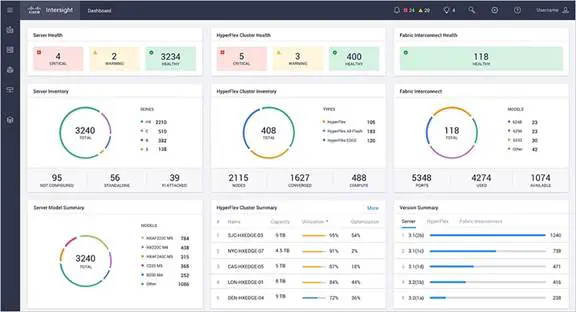

Last year, Cisco released a new cloud-based system management software named Intersight. In the same vein as CIMC Supervisor, Intersight allows individual hosts to be registered into a central portal where they can be managed. Currently licensed as a base, free level and an paid, essentials level, Intersight gives basic system management for your systems. Intersight is a good first release, although the features that it offers are very slim.

Officially, the Base license offers the following ‘features,” however Base offers very little functionality that is compelling for C-Series users to adopt Intersight at the current time. If you are using UCS B-Series and HyperFlex, you may get more out of the box – however, I don’t have any systems linked to our instance of either to know for sure.

The Essentials License is priced on a subscription basis in 1-year, 3-year and 5-year terms. The official list of functionality is listed below from their app:

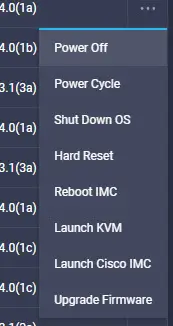

For C-Series users, this is where things come alive. Unfortunately, there are some definite deficiencies. The nicest features are the single-signon ability to launch KVM and CIMC access directly from the cloud portal.

For C-Series users, this is where things come alive. Unfortunately, there are some definite deficiencies. The nicest features are the single-signon ability to launch KVM and CIMC access directly from the cloud portal.

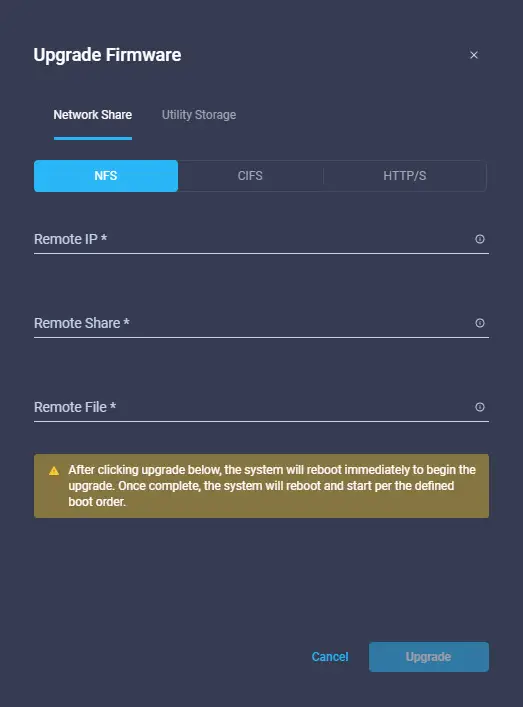

Firmware management for C-Series is nowhere near what I would expect of a product like this. There is no automation, no library – simply the ability to push a firmware update with a lot of complicated controls from the website. Firmware management is a let down.

The screenshot to the right shows the tangible tools you can expect on a C-Series server licensed with Essentials.

Cisco Intersight supports Cisco UCS and Cisco HyperFlex platforms with the software versions:

Cisco Intersight, when combined with Cisco UCS Director 6.6 and later, allows for updates to the southbound connectors as they are released, instead of having to wait for the next UCS Director release. This allows customers to rapidly support new hardware without having to go through a UCS Director upgrade.

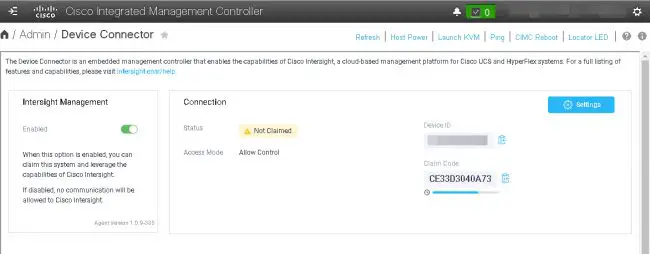

Registration is fairly simple – you have a Device ID for each of your CIMC and it communicates directly with Intersight. In addition, you have a Claim Code for a system that is able to talk with the cloud. The Claim Code is basically a rotating password that allows you to register the device in the portal.

Once registered, all of the devices appear in the portal interface. The inventory is activated and you are able to see system details. Every system has a health indiciator and you can clearly see all the management IP’s for systems. If a system is connected to a Fabric Interconnect, you also get the name of UCS Domain or HyperFlex cluster and its associated Server Profile.

Firmware update for stand-alone systems is a disappointment. It doesn’t offer anything differentiated. Your options are to choose a lot of manual commands to kick off an immediate firmware update on a host. Manual and immediate. Neither are helpful features to administrators. There is a lot of improvement to be had by the company that introduced the world to release sets and firmware bundles. There should be a way to choose directly from the Cisco library of firmware and push those down to managed systems – without having to set all the manual options.

I have had a long relationship with Hewlett Packard and now Hewlett Packard Enterprise. Today, I sat in a strategy session with Paul Miller and Mark Linesch. It is an interesting time as these two guys have transitioned into marketing roles for the whole of HPE – reacting to the transition of IT purchasing away from silos and into an holistic strategy.

I have had a long relationship with Hewlett Packard and now Hewlett Packard Enterprise. Today, I sat in a strategy session with Paul Miller and Mark Linesch. It is an interesting time as these two guys have transitioned into marketing roles for the whole of HPE – reacting to the transition of IT purchasing away from silos and into an holistic strategy.

Creating a good strategy can be tough and marketing a strategy is even tougher. These two guys have been engaged for several years creating solutions within HPE that move towards a vision of hybrid IT. What has evolved is the desire from customers to operate on-premises infrastructure as a cloud – with the same acceleration and benefits that cloud brings in terms of agility. This was echoed in a later session with research from Futurum Research saying that customers, given the choice, would continue to operate on-premises or in their own colocation if they can operate it like the cloud. So, its finally confirmed from customers – cloud is a method of operation and not a destination.

Two major things emerged from the session – HPE and Hewlett Packard Labs may be heavily invested in research for new technologies and architectures, but it is trying to bring along the industry with partnerships. HPE worked diligently with other compute vendors to establish RedFish specification – a common programmatic way to interact with IP management interfaces across the industry. More recently, with the research around memory-centric computing architectures, the company is working with partners in the Gen-Z Consortium. HPE released early that memory-centric computing would require a lot of changes and innovations that would take time for software vendors and customers to adopt – meaning it needed to create a pipeline and support with other companies for this technology. Production products in this sector are around 2 years away from release.

This week’s Composable Cloud announcement is another step towards the operation of on-premises resources as cloud. Composable Cloud take the technology acquired with Plexxi and marries it with rack-mount ProLiant servers (specific SKUs currently) to be able to create a composable network fabric. Plexxi creates flat networks that are programmable through a controller management console. That leaves the company with a slightly split strategy currently between HPE Synergy which uses traditional networking and the Plexxi-based Composable Cloud for rack mount servers.

These guys also did something that many at HPE do not often know how to do – they admitted that there were mistakes and missteps in their strategy over the last 5 years. Helion Cloud, CloudSystem and others come to mind. These were not the right way to do hybrid IT. Do they have the right strategy now? Time will tell.

Composable Cloud is the next step in HPE’s vision of the future in hybrid IT – enabling on-premises IT to operate like cloud and manage cloud consumption across multiple clouds. The major news during the conference is Composable Fabric – the new branding of Plexxi within the HPE portfolio. Composable Fabric was the missing piece that allows HPE to compose rack-mount servers, in addition to the HPE Synergy platform.

While HPE has been talking about Composable Fabric for a while on the Synergy platform, it didn’t have a product that allowed its rack servers to have composed networking. HPE OneView has been a great addition to the automation portfolio for HPE, but the lack of networking support is glaring in the lineup, until now. Synergy has a narrowly defined number of ways that it can be installed and connected, so networking in Synergy is somewhat easier for the company to handle. So, for now, HPE has two Composable Fabric solutions – one in its Synergy chassis and one based on Plexxi for rackmount.

Composable Fabric/Plexxi is a very cool solution. It is intended to be used all the ways that a rack-mount installation may be created. With Plexxi switches, you take the switches out of the box and cable them together in any way you desire and they form a base broadcast network that knows all the elements and can load balance traffic across the fabric. Add cabling to your Plexxi enabled DL380’s and you can begin to build a fully automated pool of systems.

Plexxi OS, the switch software, runs on HPE/Plexxi certified switches, which are open standard switches (aka whitebox switches). The Plexxi folks I spoke with said the code would likely run on other open switch platform, however, it would not be certified and supported. Like all other open networking solutions, the secret is in the software. A controller is used to define the configuration of the network and then handle all of the ways that interconnection can occur.

Plexxi OS, the switch software, runs on HPE/Plexxi certified switches, which are open standard switches (aka whitebox switches). The Plexxi folks I spoke with said the code would likely run on other open switch platform, however, it would not be certified and supported. Like all other open networking solutions, the secret is in the software. A controller is used to define the configuration of the network and then handle all of the ways that interconnection can occur.

In some ways, it seems that Cisco’s network-centric design that encompassed both blades and rack mount servers was the right approach and the Plexxi acquisition is a step to correct this. Albiet, the two approaches are different – one with open hardware (HPE Composable Fabric) and specific software versus the Cisco method of tightly coupled hardware and software. Also glaring at this point is that Cisco has a single management plane and HPE’s solution has two – the Plexxi management and HPE OneView.

Unfortunately, Plexxi is HPE’s solution for rack-mount servers only currently, though I expect to see something similar introduced on Synergy – it is just logical (but I have no inside information). I also wonder how long the Plexxi controller will exist separate from OneView – again I think it is a logical step to merge those two functions together. While the composable cloud includes the newly rebranded Plexxi code and release, HPE also introduced a Simplivity/Plexxi package also during the conference based on the latest 4.x release.

For more information, check out the news release from HPE.

HPE has announced support for 3PAR storage with its HPE InfoSight cloud analytics product, acquired with Nimble, along with new updates to InfoSight focused on proactive recommendations. Even before the conference officially begins, HPE is releasing announcements that customers can dig into more during the Discover event in Madrid this week.

As promised earlier, the InfoSight support for 3PAR is coming to all existing customers with active support contracts on their 3PAR arrays. Since 3PAR already had a rich set of telemetry built into the platform, the main hurdle of integration was letting the InfoSight Data Scientists loose on the raw data to map and make sense of it. HPE leveraged existing code and analytical models against the 3PAR data to do this quickly [HPE completed the Nimble acquisition just 7 months ago].

The first iteration of InfoSight for 3PAR includes three feature sets:

Not resting on past accomplishments, the InfoSight team also has a new set of capabilities it is unleashing to customers – Preemptive Recommendations. For these, HPE is drawing insights from the data it is getting from the storage and virtualization platform to make recommendations that prevent issues, improve performance and optimize the environment.

To a large degree, what HPE is talking about in preemptive analytics in InfoSight sounds a lot like the problems VMware is trying to solve in vSphere with proactive recommendations from vRealize Operations Manager (vROPS). Where these recommendations can potentially shine in InfoSight are in the deeper insights that correlate between the hardware and the software since InfoSight has deeper visibility into that platform. Clearly, all tech companies are realizing that there is tremendous value in machine learning applied to IT support.

HPE is using machine learning and expert defined conditions to generate preemptive recommendations. Since the learning engine is cloud based, the refinement and self-learning should power a number of wins for customers as the install-base widens and customers adopt this support tool, making it difficult to replicate for on-premises prediction engines.

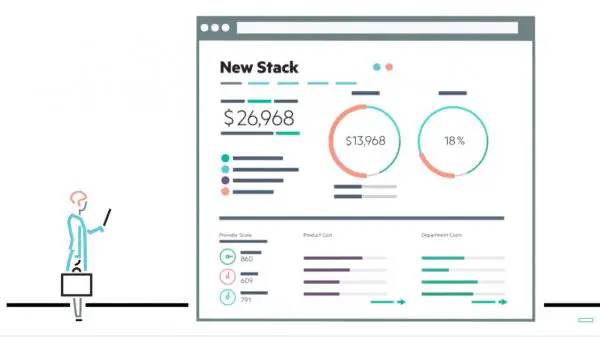

One of the most interesting announcements this week at HPE Discover was a project called New Stack. The project is a technology preview that HPE is ramping up with beta customers later this year, but the intentions of the project are to integrate the capabilities of all the “citizens” it manages to provide IT with cloud-like capabilities based on HPE platforms and marry that with the ability to orchestrate and service multiple-cloud offerings. That’s a mouthful, I know – so lets dig in.

If you assess the current HPE landscape of its infrastructure offerings, it has been building a management layer utilizing its HPE OneView software layer and its native API’s to allow for a full software-definition of its hardware. Based on OneView, HPE has created hyperconverged offerings and its HPE Synergy offerings building additional capabilities on top of OneView. With the converged offerings, the deployment times are reduced and the long term management has been improved with proprietary software.

With the HyperConverged offerings, HPE calls it VM Vending – meaning its a quick way to create VM workloads for traditional application stacks easily. It does not natively assist with cloud-native types of workloads. Certainly, you can deploy VM and VMware solutions to assist with OpenStack and Docker. Synergy improves upon the hyperconverged story by including the Image Streamer capability to allow native stateless system deployment and flexibility, in addition to the traditional workloads. Synergy is billed as a bimodal infrastructure solution that enables customers to do cloud-native and traditional workloads, side-by-side.

And then there is a gap. The gap is the required orchestration layers to enable cloud-like operations in on-premises IT solutions. HPE has a package called CloudSystem, which also existed as a productized converged solution, that include this orchestration layer to enable cloud-like . The primary problem with CloudSystem is cost. Its expensive – personal experience. Its also cumbersome. CloudSystem pieced together multiple different software packages that already existed in HPE – including some old dial-up billing software that morphed into cloud billing. CloudSystem was clearly targeted towards service providers.

The reality is today that regular IT needs to transform and be able to operate with cloud methodologies. It needs to be able to offer a service catalog to its user – whether developers, practitioners, or business folks ordering services for their organization. Project New Stack is planned to allow this cloud operation and orchestration across on-premises and multiple clouds.

New Stack is entirely new development for the company, based around HPE’s Grommet technology and integrating technology from CloudCruiser, which it acquired in January of 2017. CloudCruiser provides cost analysis software for cloud and so that provides a big part of what has been shown for Project New Stack during Discover. CloudCruiser focused on public cloud, so HPE has had to do some development around costing for on-premises infrastructure. It attempts to give a cost and utilization percentages across all your deployment endpoints.

Project New Stack taps into the orchestration capabilities of Synergy and its hyperconverged solutions to assist with pivoting resources into new use cases on-premises. It uses those resources to backend the services offered in a comprehensive service catalog. Those services are offered across a range of infrastructure, on-site and remote. Administrators should be able to pick and choose service offerings and map them from a cloud vendor to their users for consumption, but this is not the only method of consumption.

Talking with HPE folks on the show floor, they don’t see Project New Stack as the interface that everyone in an organization will always use. Developers can still interact with AWS and Azure from directly within their development tools, but Project New Stack should take any of those service spun-up or consumed and report them, aggregate them and allow the business to manage them. It doesn’t hamper other avenues of consumption and explains the focus on cost analysis and management for the new tool.

Orchestration is only part of the story. New Stack should gives line of business owners the ability to get insights from their consumption and make better decisions about where to deploy and run their apps. Marrying it with a service catalog gives a new dimension of decision making ability, based around cost. As with all costing engines, the details will make or break the entire operation. Every customer gets varying discounts and pricing from Azure and AWS. How those price breaks translate into the cost models will really dictate whether or not the tool is useful.

The ability to present a full service catalog shouldn’t be diminished. It is a huge feature – enabling instantiation of services in the same way, no matter where the backend cloud or on-premises. On-premises is new territory for most enterprises with cloud services. Enterprise is slow to change, but if the cloud cliff is real, more companies will face making the decision and brining those cloud-native services back on-premises for some use cases.

What I do not hear HPE talking about is migration of services from one cloud to another. While it may be included, this is typically the achilles heal for most cloud discussions. This is easier to accomplish with micro-services and new stack development than with traditional workloads. Bandwidth constraints and incompatibilities between clouds still exist for traditional workloads. And I didn’t get far enough into the system on the show floor to see how an enterprise could leverage the system to deploy their own cloud-native code, or simply draw insights from the cloud-native code deployed.

Project New Stack was the most interesting thing that I saw HPE Discover this week. While HPE has been developing software defined capabilities for its infrastructure, what has been lacking is an interface to integrate it all together. OneView is a great infrastructure-focused product made for defining and controlling the full on-premises infrastructure stack. Its incredibly important to allowing cloud-like operations on-premises. In the early forms, it won’t tie everything up in a nice little package, but it is early and that’s intention on HPE’s park to get the product out and into the hands of customers and then refine.

Unique, new types of datasets are forcing companies to investigate cloud-powered analytics to tap into data that can steer a company’s direction and its decision making. These datasets have challenging characteristics that require moving the analytics platform and not the data that should be mined.

Unique, new types of datasets are forcing companies to investigate cloud-powered analytics to tap into data that can steer a company’s direction and its decision making. These datasets have challenging characteristics that require moving the analytics platform and not the data that should be mined.

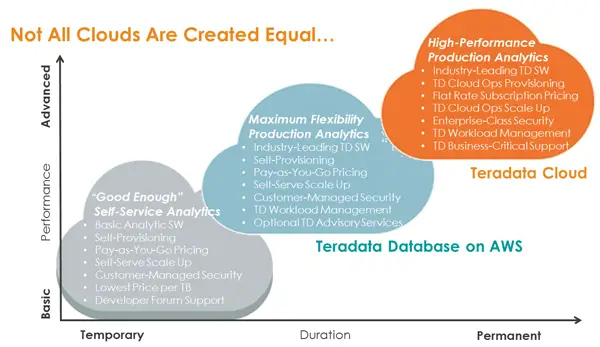

A few months ago, I was part of a briefing from Teradata, one of the largest data warehouse and analytics software vendors in the world. Teradata announced that they would be bringing their analytics platform to Amazon Web Services and offering their data platform on a utility based consumption model. But it wasn’t the announcement as much as the reason that I found really interesting on the call. According to Teradata, data proximity is one of the key driving factors to move analytics onto cloud platforms like Amazon’s.

Big Data has been an industry buzzword for several years, now. Working for a medium business, its occurred to me that big data and big data problems just were outside of my area of concern. We don’t have that much data, I thought, but I have realized I was wrong. You don’t have to be a big business to have big data problems and benefit from the analysis of big data sets. The rate at which data is being generated has exploded in the last couple years and it will continue to explode in years to come because of new innovation.

First, there are massive amounts of data being generated online through social networks like Facebook, Twitter, Instagram and others. This data has the potential to provide insights into customer perception and concerns for any brand and company, if they can tap into the data and make sense of it.

Sensor data is another major area of concern. Enterprise has long had appliances with sensors that report back environmental conditions in data centers. Hardware vendors have built sensors into their servers and racks to report back hot-spots and cold-spots and look at optimal power usage and optimize operational costs. But the truth is that Internet of Things is bringing a massive expansion of sensor data. Everything from your toaster to light bulbs have sensors and data that can be tapped for different uses. Depending on the business and use cases, there are massive datasets created by these devices that may have use cases for the enterprise.

As another example, municipalities are increasingly deploying cameras throughout their territory to watch for accidents and gain visibility when crimes occur. Video takes an immense amount of storage and event more than just storing the data, making sense of that data once it is acquired is more than a human and manually perform. This requires an automated software method to analyze and make sense of the video data being transmitted.

All three of these examples share one common trait. The data generated exists outside of the corporate firewall – the usual place where analytics platforms are run – and sometimes outside of the control of the enterprise – as is the case with Internet sites and sensor data from products sold to customers. This presents the idea of data proximity and locating the analytics platform closer to the data it should mine.

Data proximity is an interesting concept. Cloud services – like Twitter, Facebook and other social services – have created a ton of new data but it is not necessarily data that needs to be transported and stored within the confines of the corporate firewall. Datasets like video are far too large to be transported once they are at rest and this may also be a driving factor for locating the analytics closer to the dataset.

The Teradata Database on AWS is the solution announced in October. Running on the Amazon Web Services, it offers customers a pay-as-you-go consumption model with self-provisioning services and scale up capabilities. It joins Teradata’s existing Teradata Cloud offering that is a dedicated analytics platform hosted by Teradata in its datacenters. While the Teradata Cloud is targeted at high-performance analytics, the Teradata Database on AWS is targeted at middle ground customers who need a reliable, production analytics platform with the economies of scale and pricing of utility cloud computing.

In addition to allowing the software to run on AWS, Teradata also announced optional advisory services to assist customers with the setup and configuration in AWS. The white-glove configuration allows customers a well-supported environment configured by professional with years of experience, rather than a do-it-yourself approach to getting your own analytics running in the cloud.

Teradata Database on AWS intends allow companies of all sizes to run a powerful analytics platform, locating it closer to the datasets it needs to investigate while enabling adoption with a more approachable cost.