HP says that they are ready and waiting with Virtual Volume (vVol) support on the 3PAR StoreServ platform when VMware releases vSphere 6. One of the most discussed topics in storage at VMworld is vVol support and HP says that they have been working with VMware since the pre-acquisition days of 3PAR to implement and support vVols on the platform. The HP 3PAR StoreServ has also the test architecture for fiber channel with vVols for VMware.

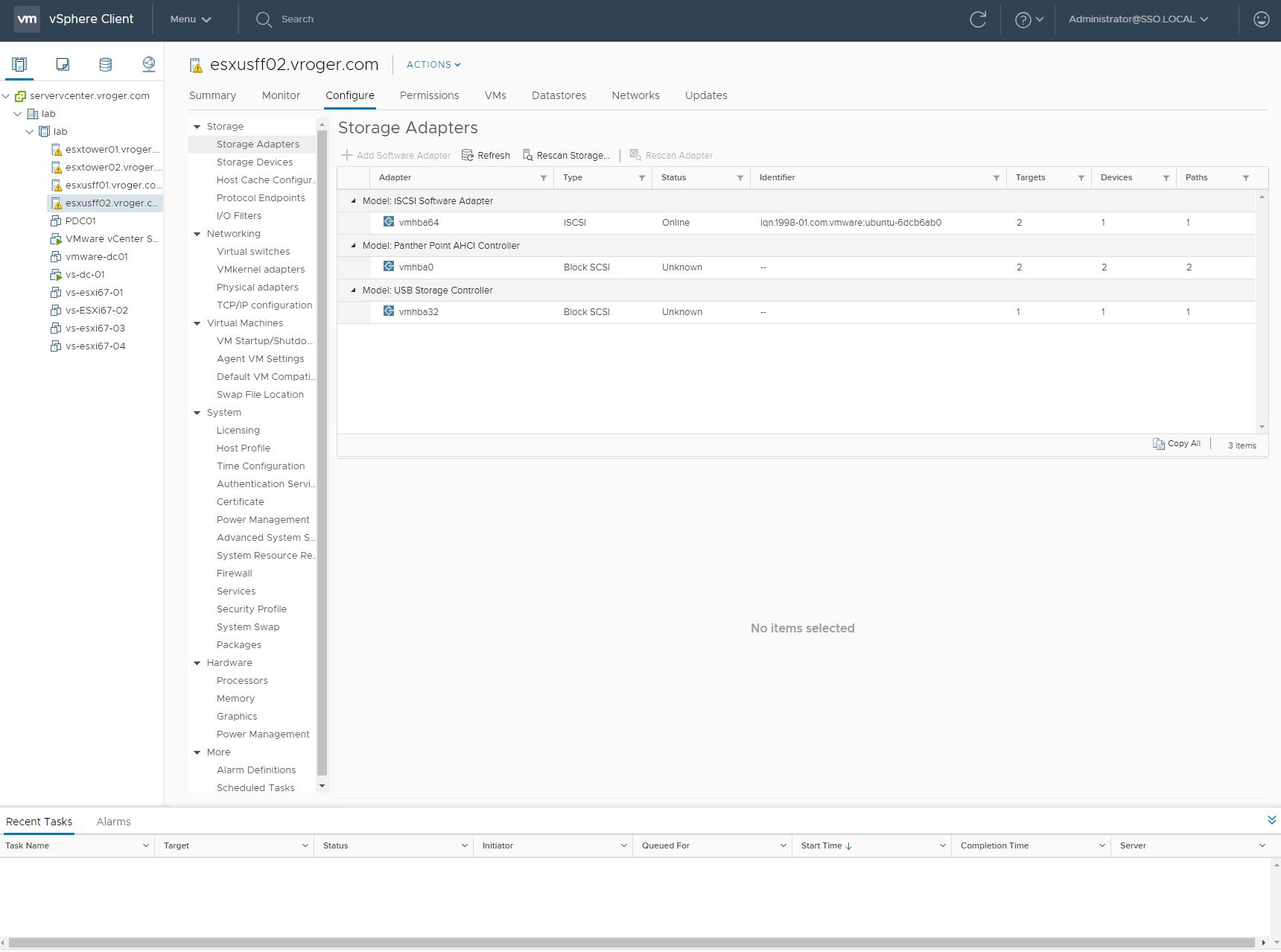

I was able to get a demo of the support at the HP booth during VMworld this week. The vVol objects look a lot like standard 3PAR Virtual Volumes (VV’s) on the platform. The two technologies share the same name, which could lead to some confusion, but they do act differently. vVols create the individual VM objects against the 3PAR system utilizing the Common Provisioning Group (CPG) as the object that they create into. 3PAR VV’s are the traditional LUNs that get provisioned from the pool of disks and are also carved out of a CPG.

From 3PAR CLI, the vVol support on 3PAR looks a lot like standard 3PAR VV’s on the platform. The thing that becomes clear is that vVols will create exponentially more objects on the 3PAR for the storage administrator to manage against. Today, within a single 3PAR VV (LUN), you may have 10 or 12 virtual machines. The storage administrator’s view is a single object, the VV.

This pivots with vVols where the VM provisioning in vSphere creates 2 objects on the 3PAR, a Config- and a Data- object. In addition, at least 5 objects for a running VM are active on the 3PAR, potentially increasing the number of objects in the storage admin’s view 50x or more. Adding an additional problem, the naming is generated with the Config- and Data- prefix along with a identifier string which may make correlation difficult. HP is working to ease the management headaches that a potential 50x increase in objects to manage.

3PAR has implemented separate commands in the 3PAR CLI to handle vVols, using things like showvvol instead of showvv administrators currently use. What’s more, its not an all or nothing choice. vVols and normal 3PAR VV’s can coexist on the same array and in the same vSphere hosts. Even while the object count increases in the 3PAR console, the usable information also increases. Since the objects correspond to an individual VM, its easier to use 3PAR’s reporting and CLI to pinpoint performance issues and address them. vVols also unlock native array capabilities at the VM level, like performing array based snapshots on an individual VM instead of an entire LUN all from the normal workflow and interface of the vSphere client.

vVols support relies on VMware API’s for Storage Awareness (VASA) providers to communicate between vSphere and the storage arrays. According to a presentation by Ivan Iannaccone (@IvanIannaccone), HP has put their VASA providers directly onto the 3PAR controllers, meaning that customers will not need an additional layer of software located outside of the array to enable vVol support.

I asked a couple HP folks about replication and vVols and at this point, it is not present in the vSphere implementation. HP says 3PAR is ready to handle replication with vVols since they are basically the same as LUN virtual volumes on the platform, but it will need VMware to deliver support in vSphere first. That also means users with Peer Persistence configurations cannot benefit from vVols until a later time.

To find out more and see vVols on 3PAR in action, check out this older video that Calvin Zito, @HPStorageGuy, posted back in 2012 showing of vVol on 3PAR. In the video, Calvin focuses on the 3PAR InServ Management Console (IMC) to show the virtual volumes. The demo I saw focused on the 3PAR CLI, but you will get the idea.

The HP Pro 612 is targeted at business users and includes the full range of security offerings that consumer products are missing. Starting at just 2 lbs., the tablet has a range of processors including an Intel i5, offering desktop quality processing inside a tablet. The Pro 612 includes the HP Sure Start BIOS, which offers a primary and backup copy of BIOS in the event of corruption or infection, it includes a smart card reader and an optional finger print reader. It is equipped with LTE and WiFi and features one USB 3.0 port.

The HP Pro 612 is targeted at business users and includes the full range of security offerings that consumer products are missing. Starting at just 2 lbs., the tablet has a range of processors including an Intel i5, offering desktop quality processing inside a tablet. The Pro 612 includes the HP Sure Start BIOS, which offers a primary and backup copy of BIOS in the event of corruption or infection, it includes a smart card reader and an optional finger print reader. It is equipped with LTE and WiFi and features one USB 3.0 port. Without backup software to actually use Federated Catalyst, the technology itself doesn’t do much good, so my first question is when would Data Protector support Federated Catalyst. The good news is that Data Protector 8 and 9 will both support Federated Catalyst when it becomes generally available with the new 3.11 StoreOnce firmware.

Without backup software to actually use Federated Catalyst, the technology itself doesn’t do much good, so my first question is when would Data Protector support Federated Catalyst. The good news is that Data Protector 8 and 9 will both support Federated Catalyst when it becomes generally available with the new 3.11 StoreOnce firmware. This week at HP Discover, HP announced a new Federated Catalyst feature for the StoreOnce family of disk to disk backup arrays. The new capability will allow backup administrators to make large Catalyst stores on a StoreOnce and allow for data to be striped across all the back-end service sets in the array. Federated Catalyst is available immediately on the StoreOnce B6500 line and will be coming in a few weeks to the StoreOnce B6200 line.

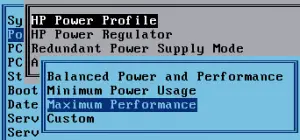

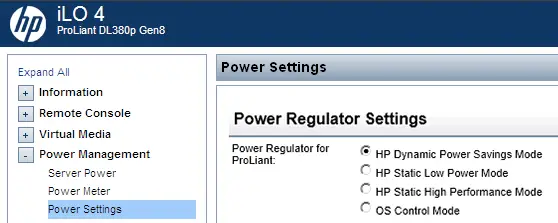

This week at HP Discover, HP announced a new Federated Catalyst feature for the StoreOnce family of disk to disk backup arrays. The new capability will allow backup administrators to make large Catalyst stores on a StoreOnce and allow for data to be striped across all the back-end service sets in the array. Federated Catalyst is available immediately on the StoreOnce B6500 line and will be coming in a few weeks to the StoreOnce B6200 line. I checked this on my HP ProLiant blade servers and they were set to the default “Balanced Power and Performance.” The label doesn’t sound all that bad, but on further searching, this setting enabled the dynamic power management within a server. With this enabled, the CPU’s seemed to be powering down and taking additional time to power up when demand from vSphere increases.

I checked this on my HP ProLiant blade servers and they were set to the default “Balanced Power and Performance.” The label doesn’t sound all that bad, but on further searching, this setting enabled the dynamic power management within a server. With this enabled, the CPU’s seemed to be powering down and taking additional time to power up when demand from vSphere increases.

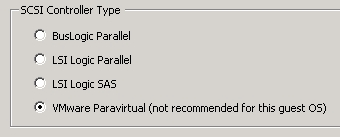

VMware unanimously makes the recommendation to the the Paravirtualized SCSI adapters within guest OSes when running business critical apps on vSphere in all the courses linked above. Paravirtualized SCSI adapters are higher performance disk controllers that allow for better throughput and lower CPU utilization in guest OSes according to

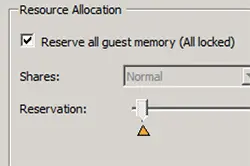

VMware unanimously makes the recommendation to the the Paravirtualized SCSI adapters within guest OSes when running business critical apps on vSphere in all the courses linked above. Paravirtualized SCSI adapters are higher performance disk controllers that allow for better throughput and lower CPU utilization in guest OSes according to  VMware recommends reserving the entire memory allotment for virtual machines running business critical apps. This ensure no contention where high performance applications are concerned. vSphere 5 and higher with virtual hardware version 8 or higher has a checkbox that allows for reserving the entire allotment of vRAM, even as allocations change.

VMware recommends reserving the entire memory allotment for virtual machines running business critical apps. This ensure no contention where high performance applications are concerned. vSphere 5 and higher with virtual hardware version 8 or higher has a checkbox that allows for reserving the entire allotment of vRAM, even as allocations change.