SQL Server is ready to take a ride directly on Microsoft’s Storage Spaces Direct (S2D), its own software defined storage (SDS) platform. It is not new news – Microsoft has been talking about SQL on S2D since 2015 – but changes in the NVMe product offerings are changing the possibilities of very high performance from SDS architectures.

NVMe has evolved and with the acceleration of new technologies like Intel’s Optane storage class memory drives and expanding capacities like the Intel P4800 series, building highly-performant, software-defined storage is getting really easy. That provides another opportunity for acceleration beyond what current NVMe drives can do today.

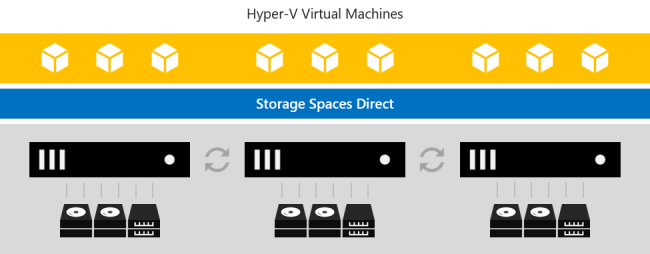

While Microsoft has been busy building Storage Space Direct as a hyper-converged, software-defined storage platform for its Hyper-V hypervisor – it also built a robust replicating file system suitable for SQL Server and even highly-available File Services platforms.

Storage vendors are starting to introduce Optane storage class memory as a caching layer, with HPE announcing this for its 3PAR and Nimble lines last week. Pure Storage and NetApp are also busy working with storage class memory for their products and integrating NVMe into already shipping products.

Microsoft, for its part, has been busy building out support for its Storage Spaces Direct feature, which pools direct attached storage into a software SAN within Windows 2016 servers. And about 2 years ago, during Microsoft Ignite 2016, Microsoft announced support for SQL Server 2016 on Storage Spaces Direct. While it didn’t click initially, the use of NVMe and even Storage Class Memory with Storage Spaces direct may drive adoption for uses other than Hyper-V in many environments.

Storage Spaces Direct offers benefits and flexibility that traditional storage arrays do not. While storage vendors are busy trying to establish ways to handle NVMe on fabric, Storage Spaces Direct and its use of RDMA have already solved some of these issues.

NVMe bypasses the traditional SCSI protocol for storage and uses its own protocol. In a lot of use cases, it is not ready for prime time because it lacks the robust features like multipathing and bus sharing. But under an SDS solution, NVMe can shine quite well.

The rise of high speed NVMe drives has given way to all sort of inventive ways to use them. Hyperconverged architectures have been using NVMe for acceleration in their solutions for many years now, but with capacities of NVMe expanding, 2019 is going to be the year for primary storage shifting onto NVMe drives. Because of the added cost, however, you won’t see a mass market shift, but you’ll see the highest value workloads exploring ways to make NVMe its primary storage. I see a lot of bare bones arrays embracing NVMe rather than the big, traditional storage arrays whose data services (like compression and deduplication) would slow things down.