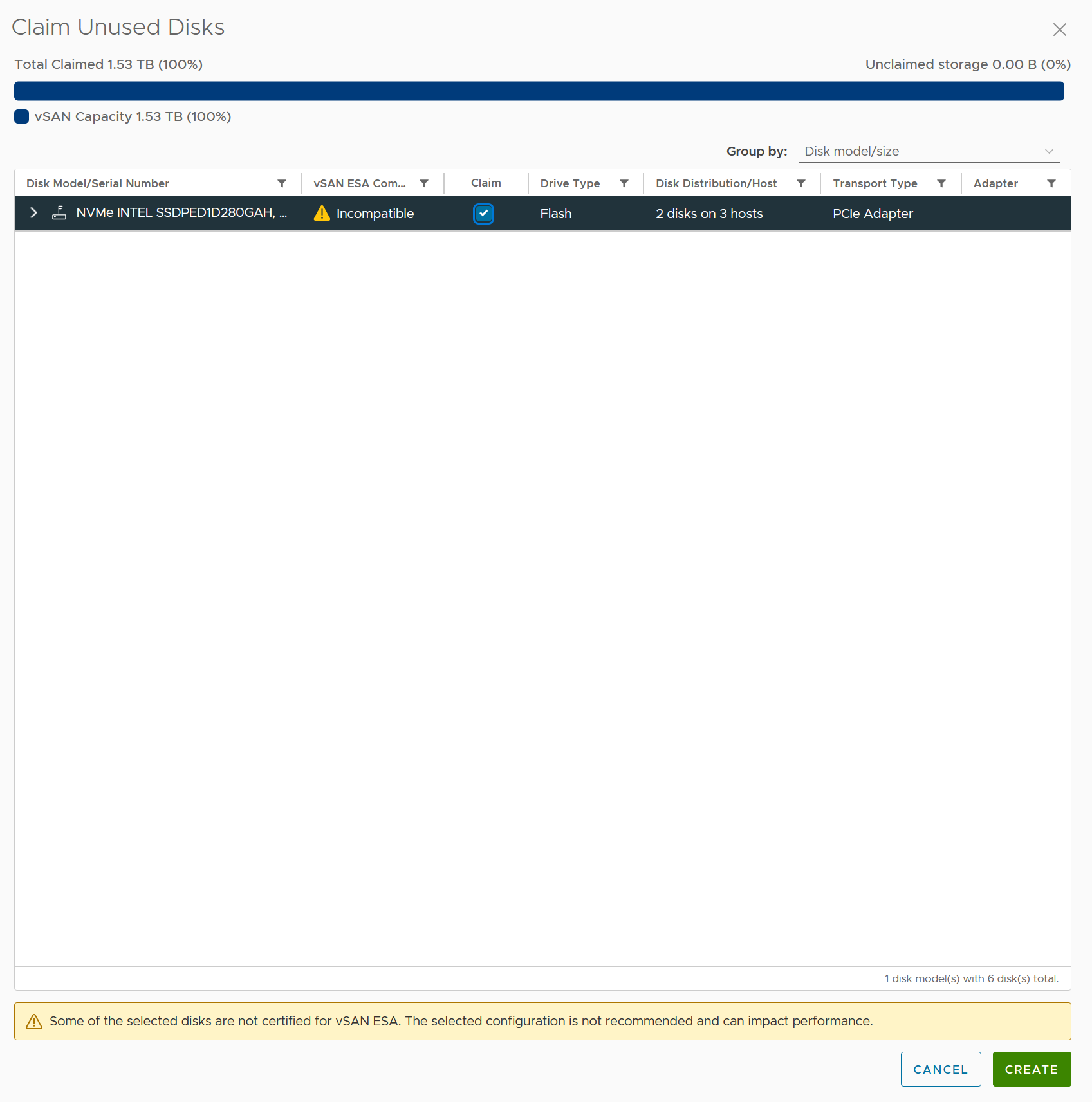

Intel was kind enough to supply me with Intel Optane 900P drives for my Lab. As you know I am part of the VMware vExpert program, that allows things like this.

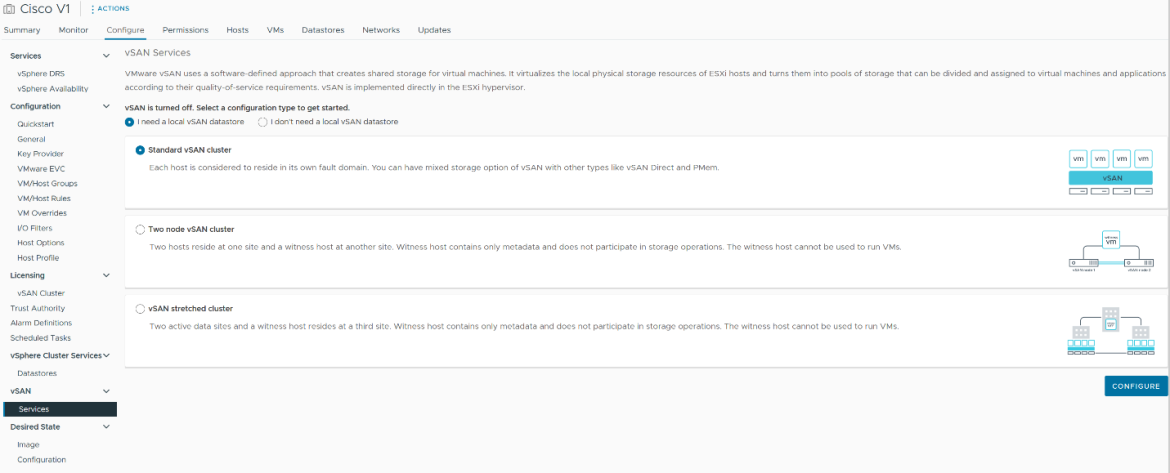

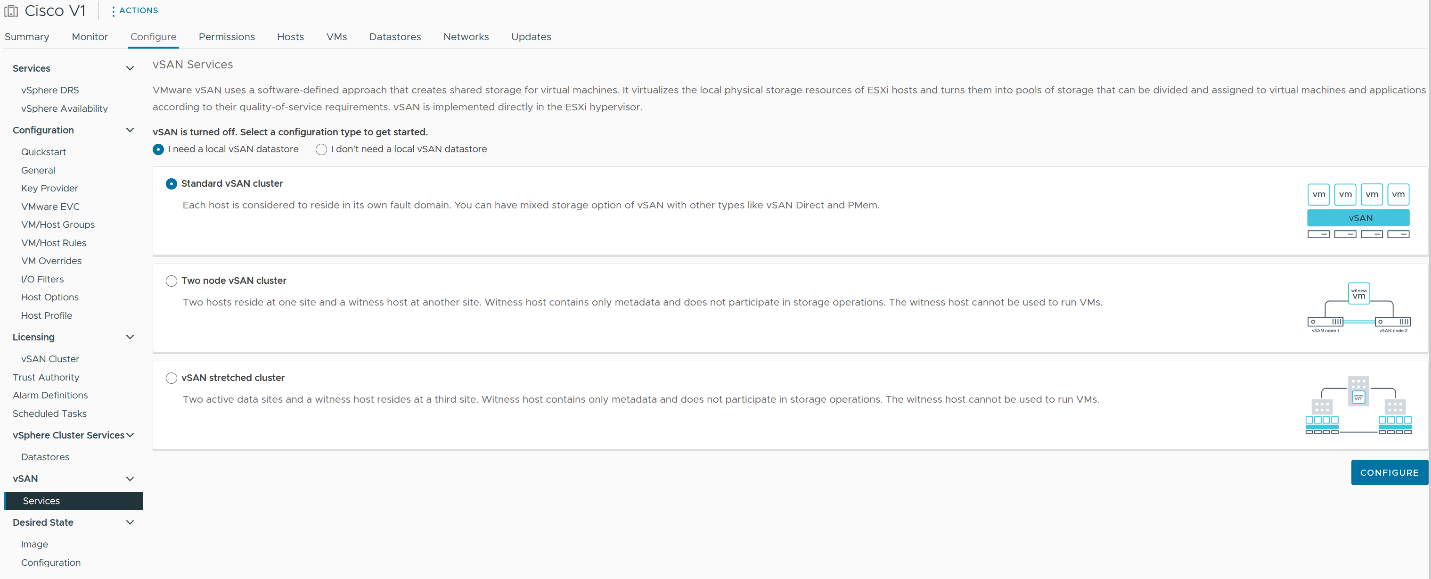

So naturally, the first thing I want to do is configure the VSAN ESA Express Storage Architecture. https://core.vmware.com/vsan-esa

Since AI is on our minds, I thought I would ask it to give us a overview and do a intro. Of course I used our favorite Chat GTP4.

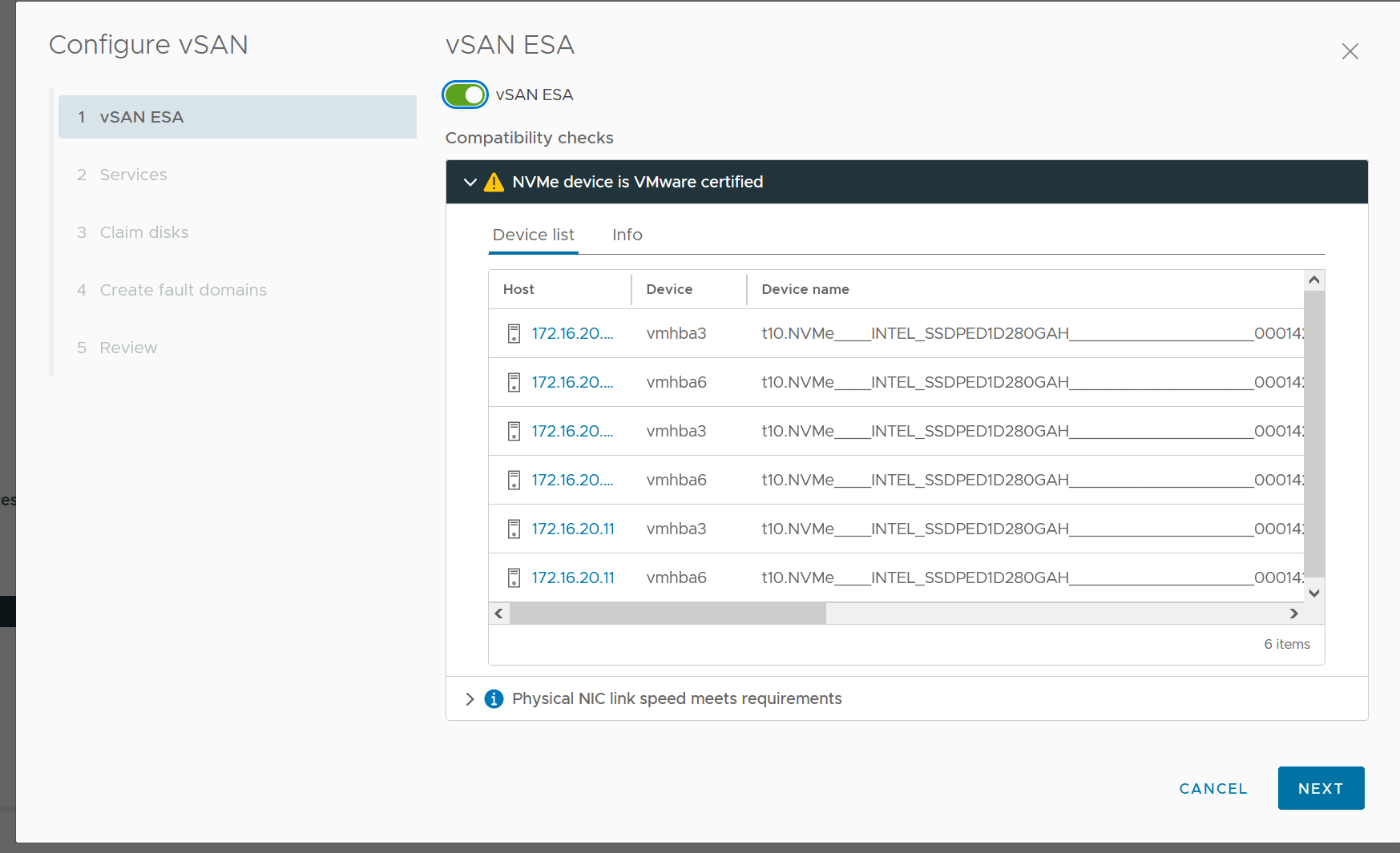

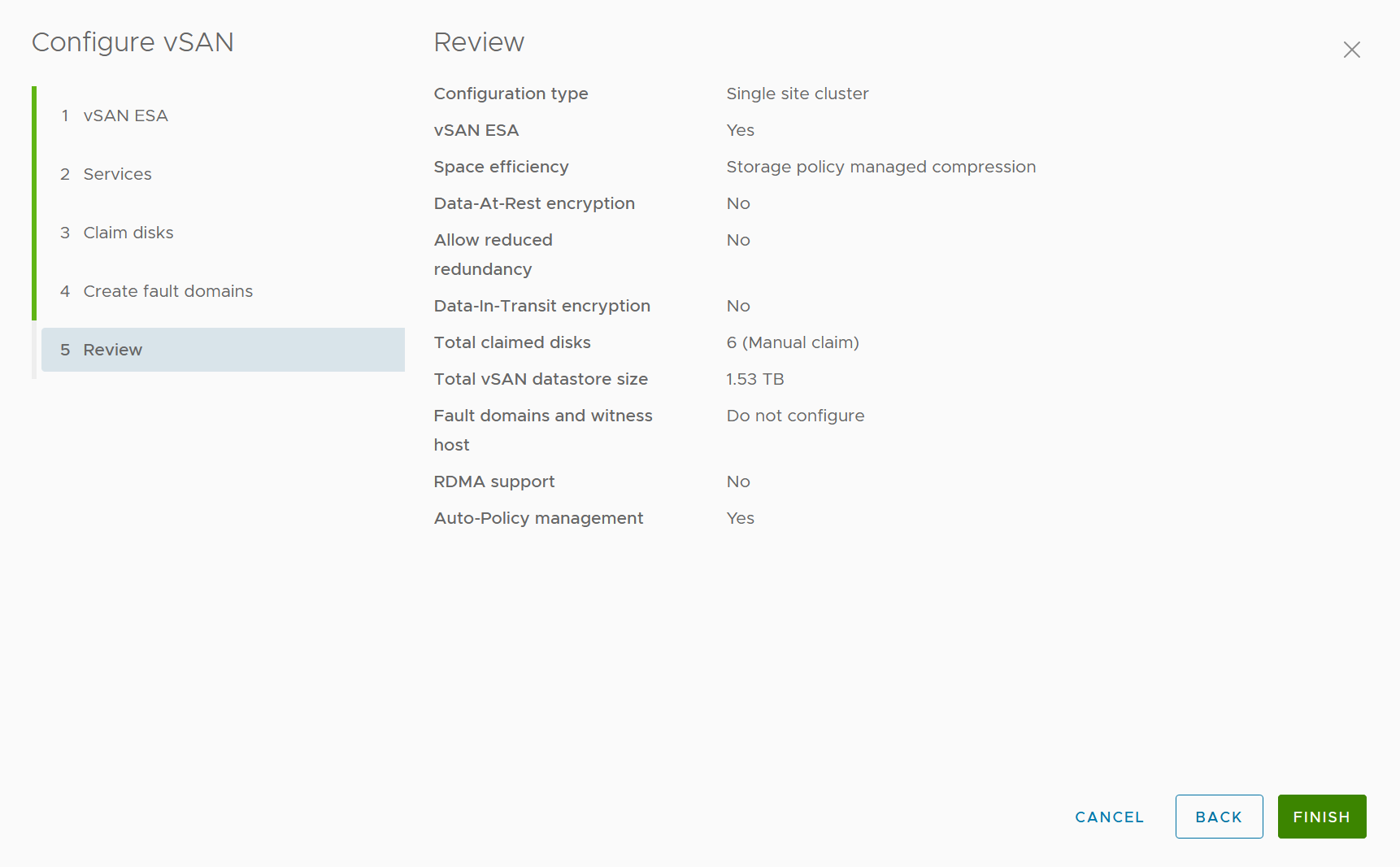

This was done with VMware vSphere 8 update 1.

“

Greetings, dear readers! As an artificial intelligence developed by OpenAI, known as ChatGPT, I’m here to introduce you to a fascinating subject that’s changing the game in data storage and management: VMware’s Express Storage Architecture (ESA), especially in the context of the recently released vSAN 8 U1. This article has been commissioned to provide you with an understanding of ESA and to walk you through setting it up with Intel Optane PCIE 900P’s in Cisco UCS Hosts.

VMware’s vSAN 8 U1 marks a significant advancement in data storage with its introduction of ESA. This development enhances dis-aggregated storage, a robust and successful method for customers to share vSAN storage resources with other vSAN clusters or compute-only clusters. With the incorporation of ESA, users can now mount remote vSAN datastores housed in different vSAN clusters, leveraging an ESA cluster as an external storage resource for a vSphere cluster. All this while maintaining the ease of use that customers have come to appreciate from vSAN’s Original Storage Architecture (OSA).

Another salient feature of vSAN 8 U1 is the support for vSAN stretched clusters with disaggregation in vSAN, made possible by the OSA. This feature optimizes network paths for specific topologies, leading to improved performance capabilities in stretched cluster configurations.

Lastly, vSAN 8 U1 allows for dis-aggregation across clusters managed by different vCenter server instances, offering a level of scalability and flexibility previously unattainable.

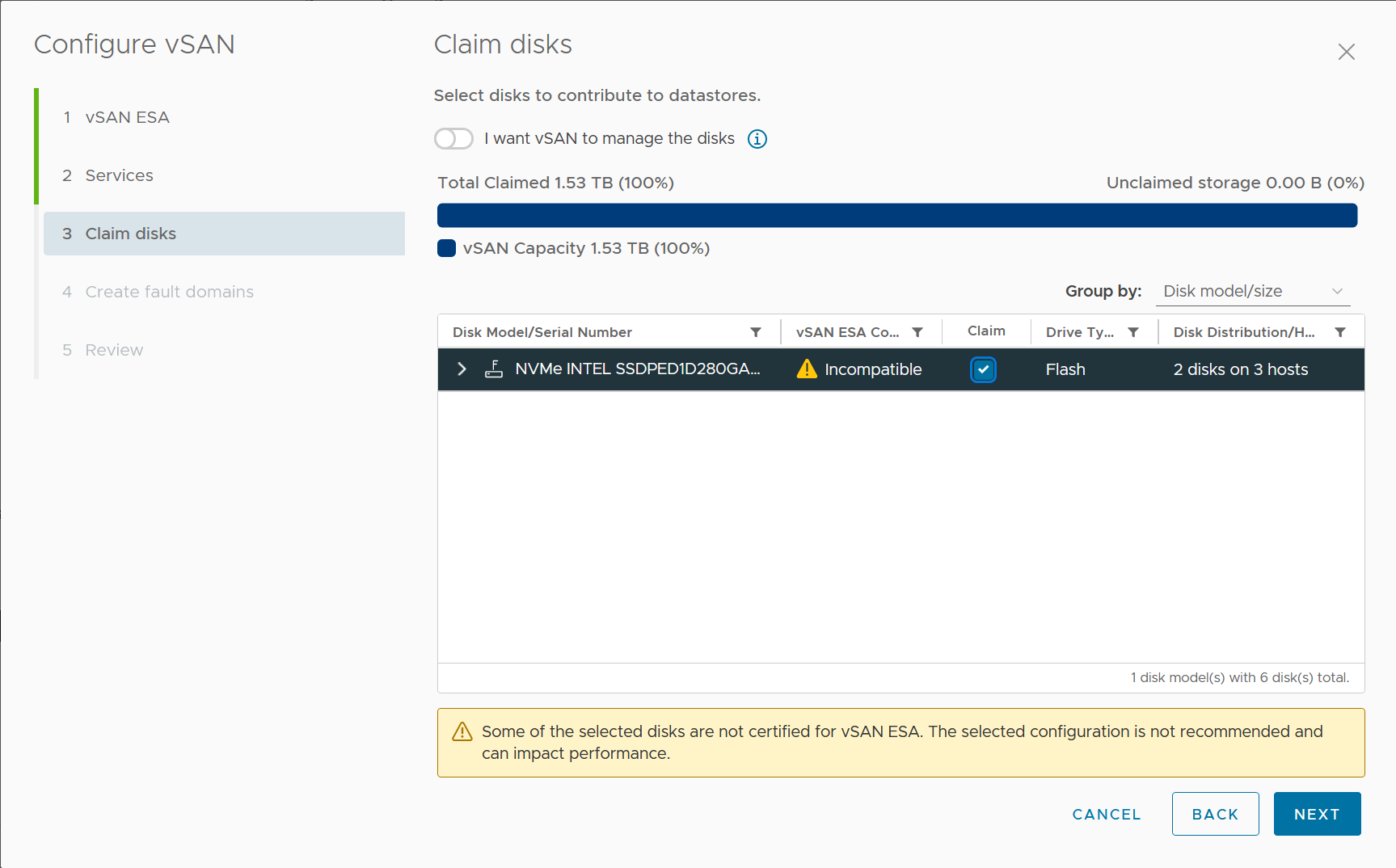

However, this high-level overview only scratches the surface of what VMware’s Express Storage Architecture truly brings to the table. The meat of our exploration lies in how we can set up ESA using Intel Optane PCIE 900P’s in Cisco UCS Hosts, and that’s what we’ll focus on in the upcoming parts of this article.

So stay tuned, and together we’ll deep dive into the nuts and bolts of this innovative storage solution, exploring the possibilities and benefits it can bring to your organization’s IT infrastructure.

“

I then asked the following.

“now give a overview of how this ESA behaves differently than vSAN in lets say vsphere 7.x in this scenario. 3 UCS C220’s with 2 Optane drives each.”

“

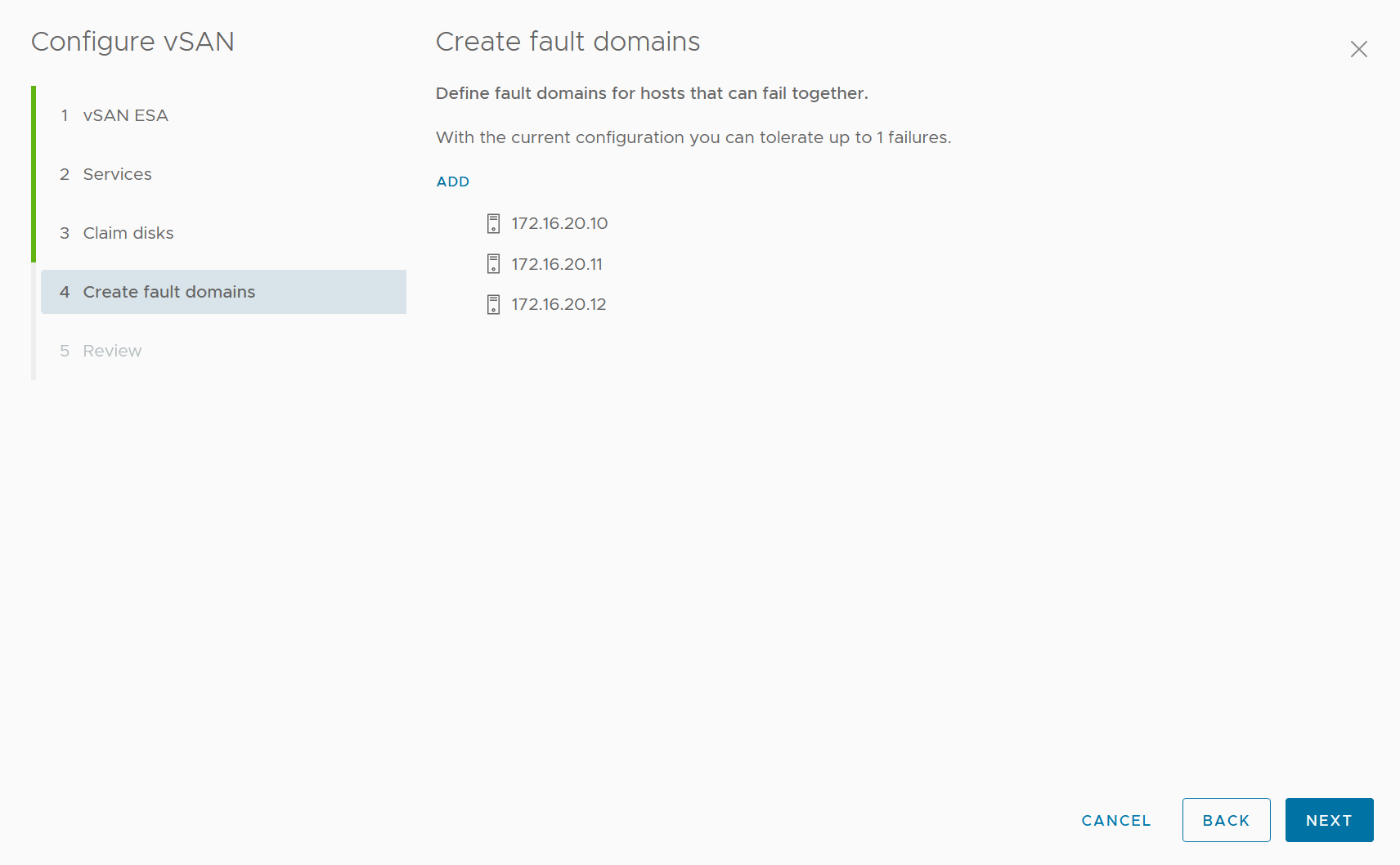

VMware’s Express Storage Architecture (ESA) introduced in vSAN 8 U1 brings notable changes in the way vSAN manages and operates storage, especially compared to previous vSAN versions like those integrated into vSphere 7.x. For the purposes of this overview, let’s consider a scenario where we have three Cisco UCS C220 hosts, each equipped with two Intel Optane drives.

In the traditional vSAN environment you might have encountered in vSphere 7.x, storage resources are pooled together across the cluster, with each host contributing storage to the vSAN datastore. vSAN then handles the distribution and management of this storage resource, providing the illusion of a single, unified datastore. This distributed nature of vSAN allows for high resilience, as data is typically mirrored or erasure-coded across multiple hosts. However, this traditional architecture does not readily support the sharing of vSAN storage resources with other vSAN clusters or compute-only clusters.

Enter VMware’s Express Storage Architecture in vSAN 8 U1. ESA introduces the capability of storage dis-aggregation, allowing the vSAN storage resources to be shared with other vSAN clusters or even with compute-only clusters. So in our scenario, if one of the UCS C220’s with its pair of Optane drives was part of a separate vSAN cluster (or was a compute-only cluster), it could still access and use the storage resources of the other two hosts via ESA. This results in enhanced flexibility and utilization of storage resources.

ESA also supports dis-aggregation in vSAN in both standard and stretched cluster configurations, although some limitations exist. While it brings added capabilities, there are features such as cross-vCenter Server capabilities, support for stretched clusters, and de-duplication that are not currently supported in ESA but are part of the traditional vSAN architecture.

The integration of Intel Optane drives plays a crucial role in both these architectures. In vSAN 7.x, Optane drives can be used as cache to dramatically improve the performance of the vSAN cluster. In vSAN 8 U1 with ESA, the same drives can still be used for caching, but they can also be used more flexibly because of the dis-aggregation feature. This means the performance benefits of the Optane drives can be enjoyed by all clusters with access to the dis-aggregated storage.

In conclusion, while the traditional vSAN as found in vSphere 7.x provides a solid and robust storage platform, the introduction of ESA in vSAN 8 U1 brings a level of flexibility and interoperability that was not previously possible, opening up new ways to maximize your infrastructure’s efficiency and performance.

“

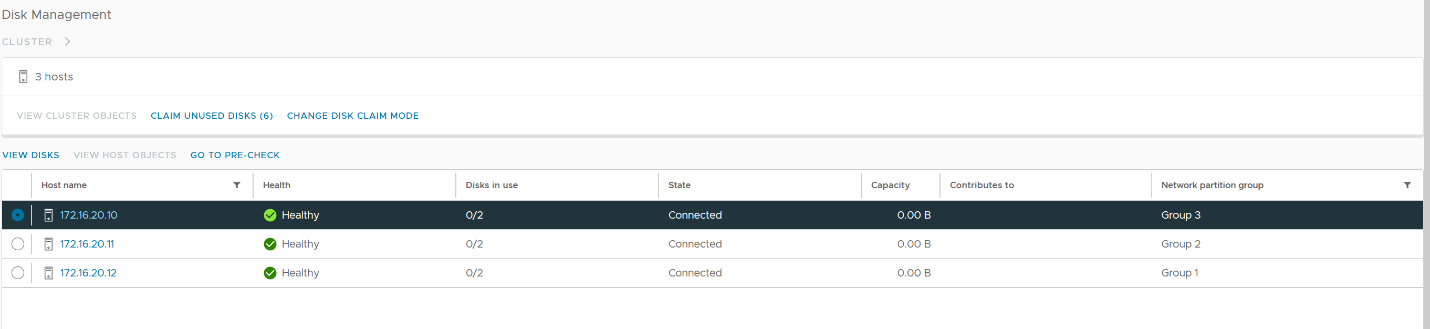

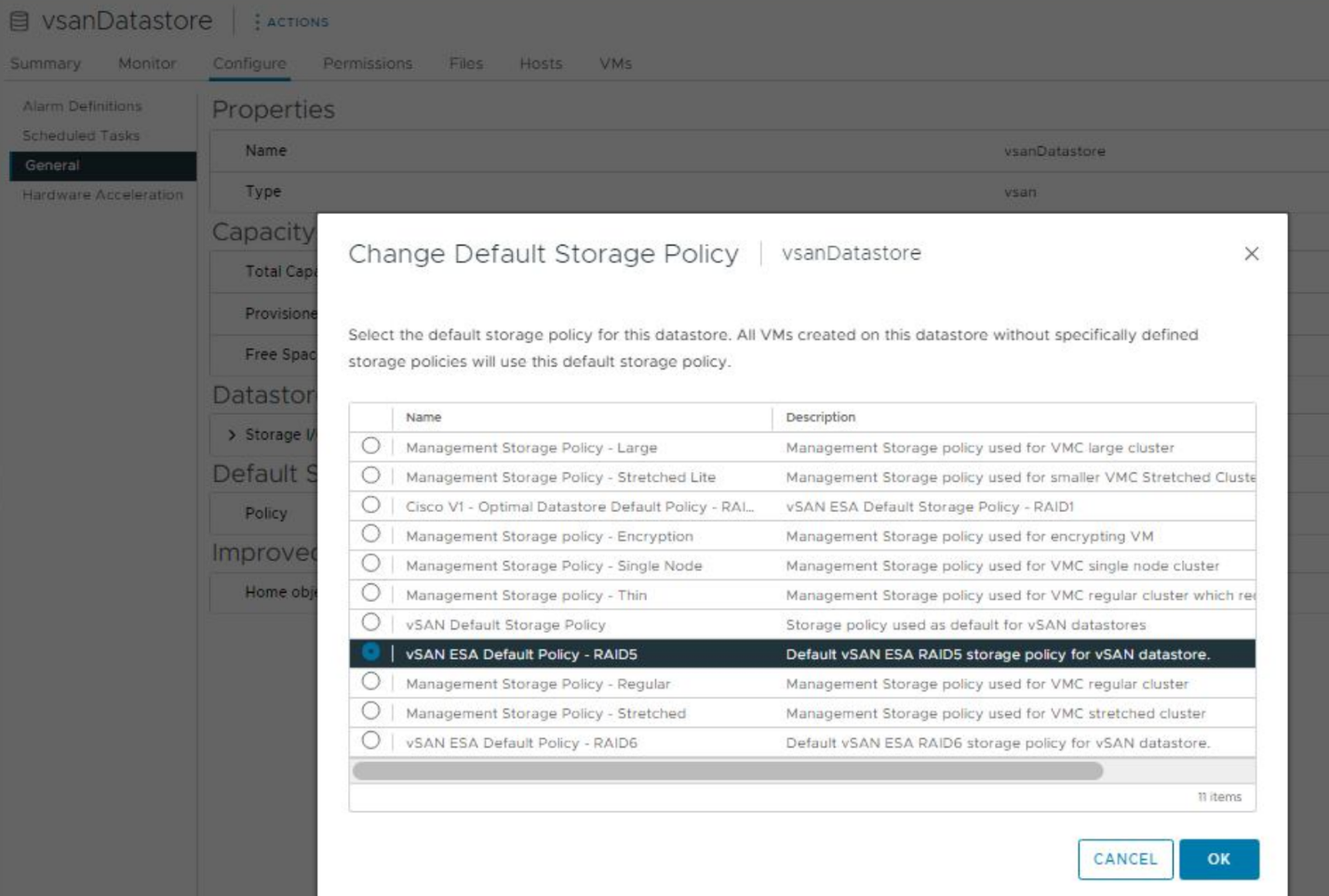

Next I go to the datastore, and change the storage policy

Why? Lets see what Chat GPT says about it.

I asked “I set the storage policy to ESA Default Raid 5, explain how it differences from vSAN of old.”

“

The Express Storage Architecture (ESA) in VMware vSAN 8 has introduced significant changes to how RAID-5 is implemented and managed compared to previous versions of vSAN. This new architecture aims to provide improved performance, space efficiency, and adaptability. Here are the key differences when you set the storage policy to ESA Default Raid 5 compared to the traditional vSAN:

Performance and Space Efficiency: In previous versions of vSAN, using RAID-5 resulted in some performance trade-offs due to the computational complexity of erasure coding, despite its better space efficiency compared to RAID-1. With vSAN 8’s ESA, RAID-5 can now deliver the same level of performance as RAID-1, meaning you get RAID-1-like performance with the space efficiency of RAID-5. This is made possible by ESA’s log-structured file system and optimized data structure.

Erasure Coding Schemes: The traditional vSAN used a 3+1 RAID-5 erasure coding scheme, where data was written as a stripe consisting of 3 data bits and 1 parity bit, across at least 4 hosts. The ESA in vSAN 8 replaces this with two new RAID-5 erasure codes – a 4+1 scheme (4 data bits and 1 parity bit across at least 5 hosts) and a 2+1 scheme (2 data bits and 1 parity bit across at least 3 hosts). These new schemes offer better space efficiency, especially for smaller clusters.

Adaptive RAID-5: vSAN 8’s ESA introduces an adaptive RAID-5 feature that automatically adjusts the RAID-5 erasure code based on the host count in the cluster. This means the system will optimize the RAID-5 scheme for your current configuration without requiring manual intervention. This is a key improvement in terms of ease of management and ensuring optimal data resilience.

Data Resilience: The ESA Default Raid 5 provides better resilience in face of host failure. When a host fails, the system automatically adjusts to maintain data availability while also keeping the prescribed level of resilience.

Overall, these differences mean that when you set the storage policy to ESA Default Raid 5 in vSAN 8, you’re using a much more space-efficient, resilient, and performance-optimized version of RAID-5 compared to previous versions of vSAN. This all translates into a higher effective storage capacity, better performance, and simpler management for your vSAN clusters.

“

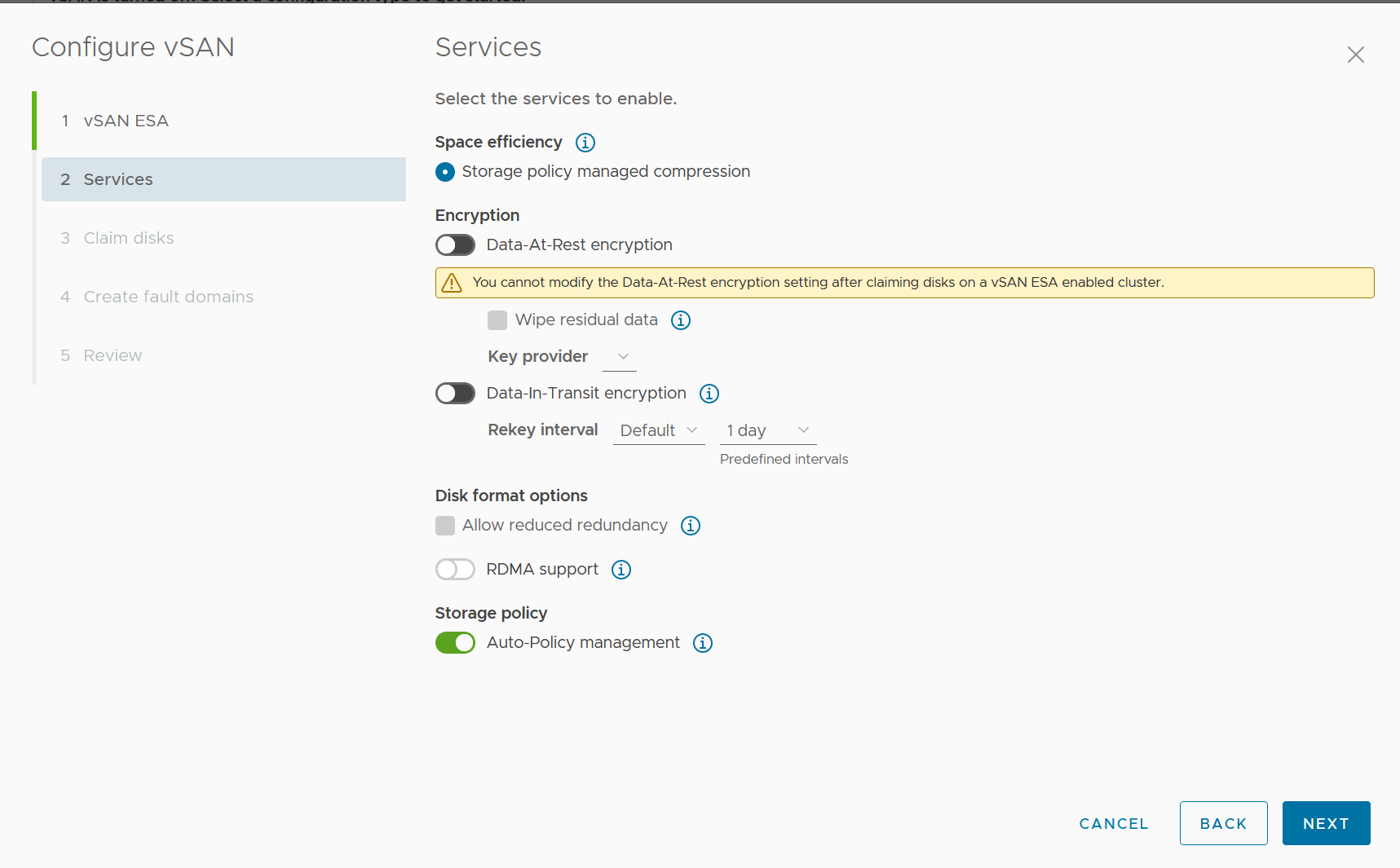

Let’s go ahead and set it!

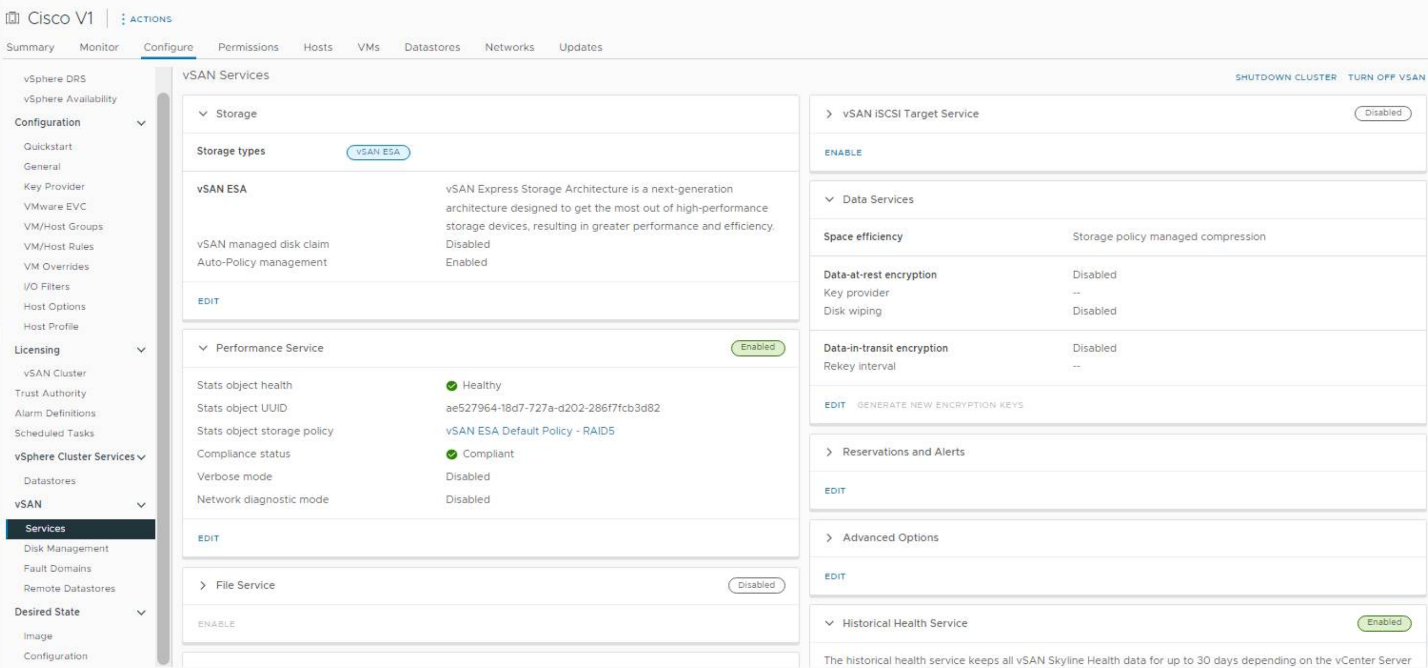

If we go to the VSAN services, we can see it now has the policy.

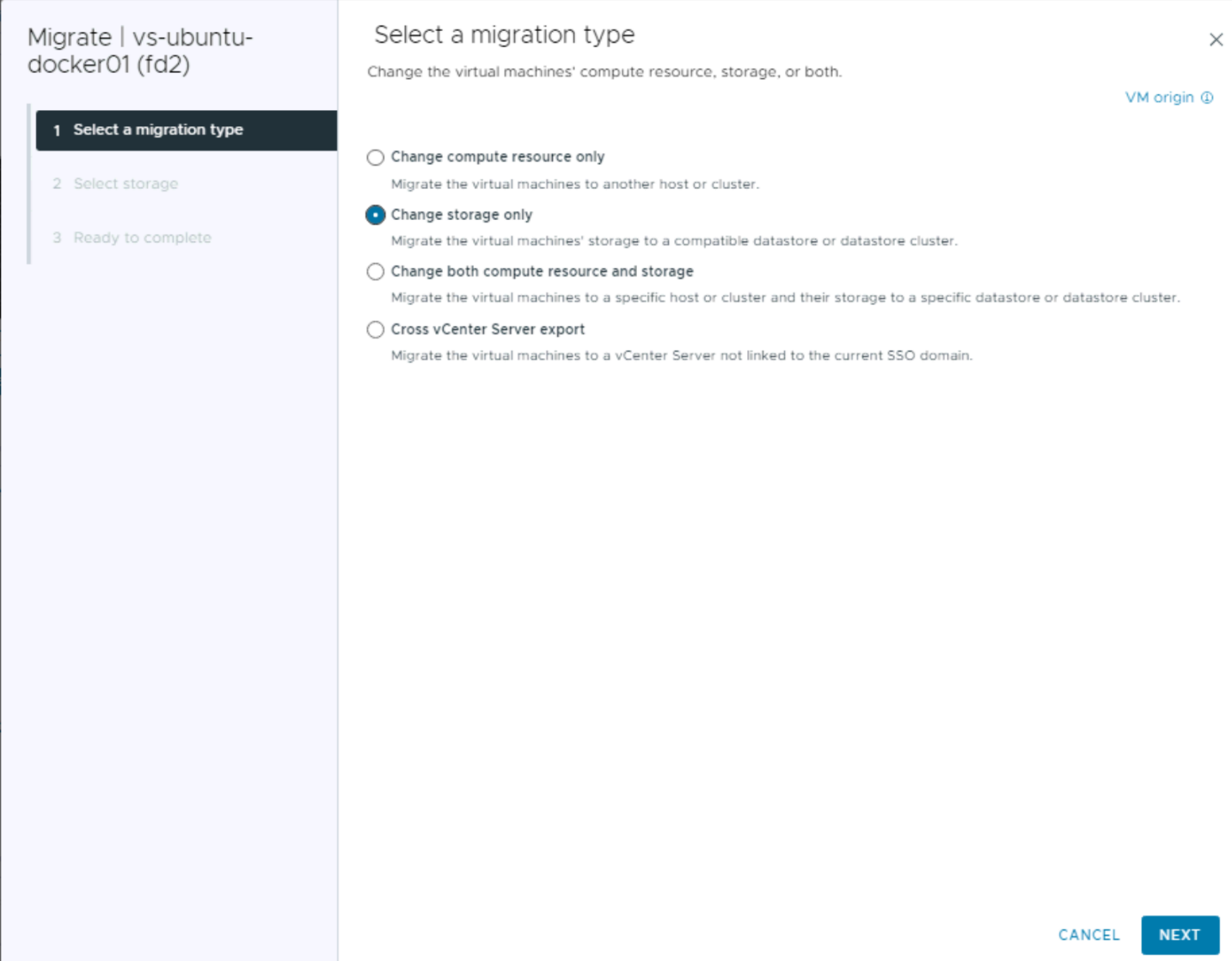

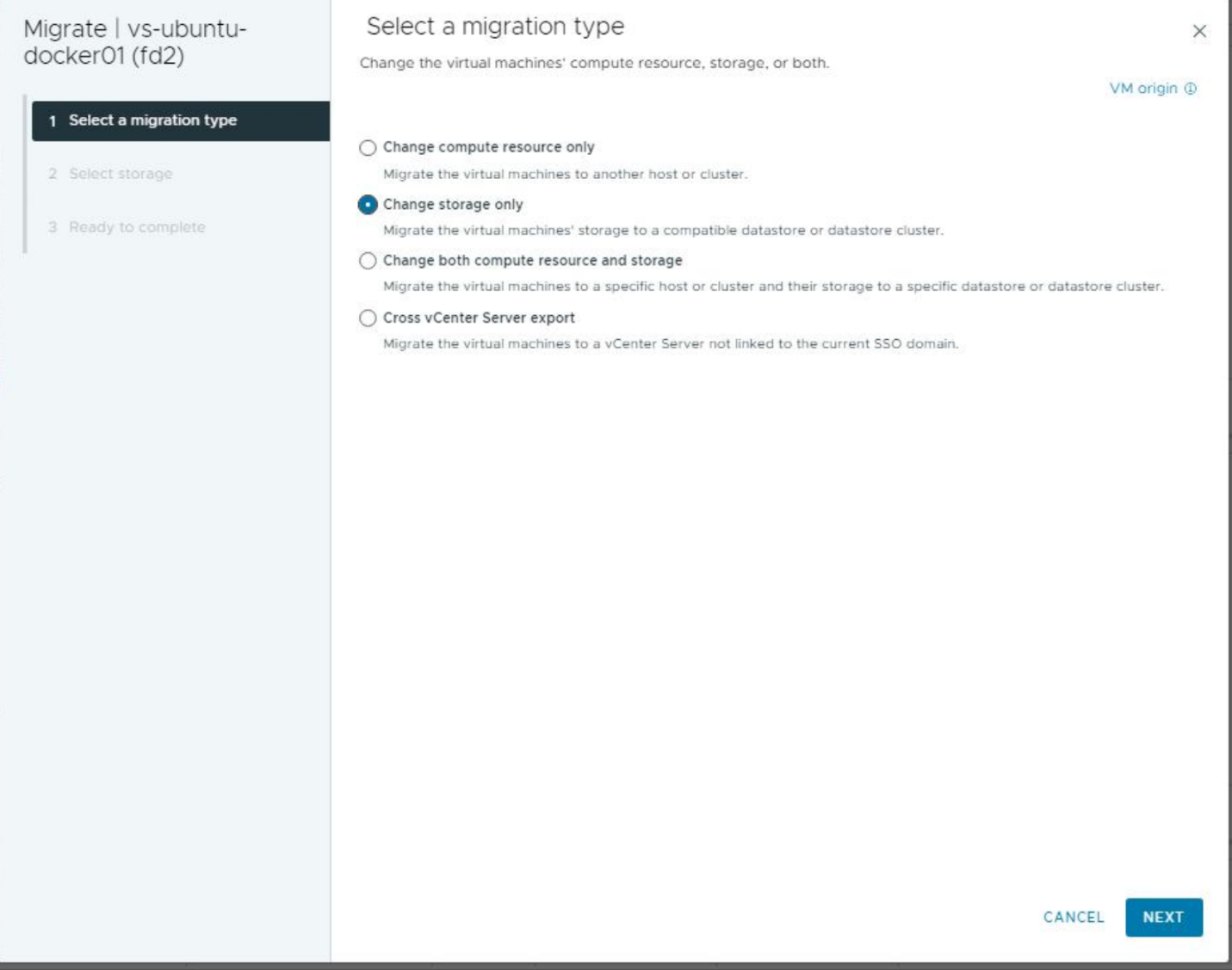

Next we are going to migrate a VM to the workload

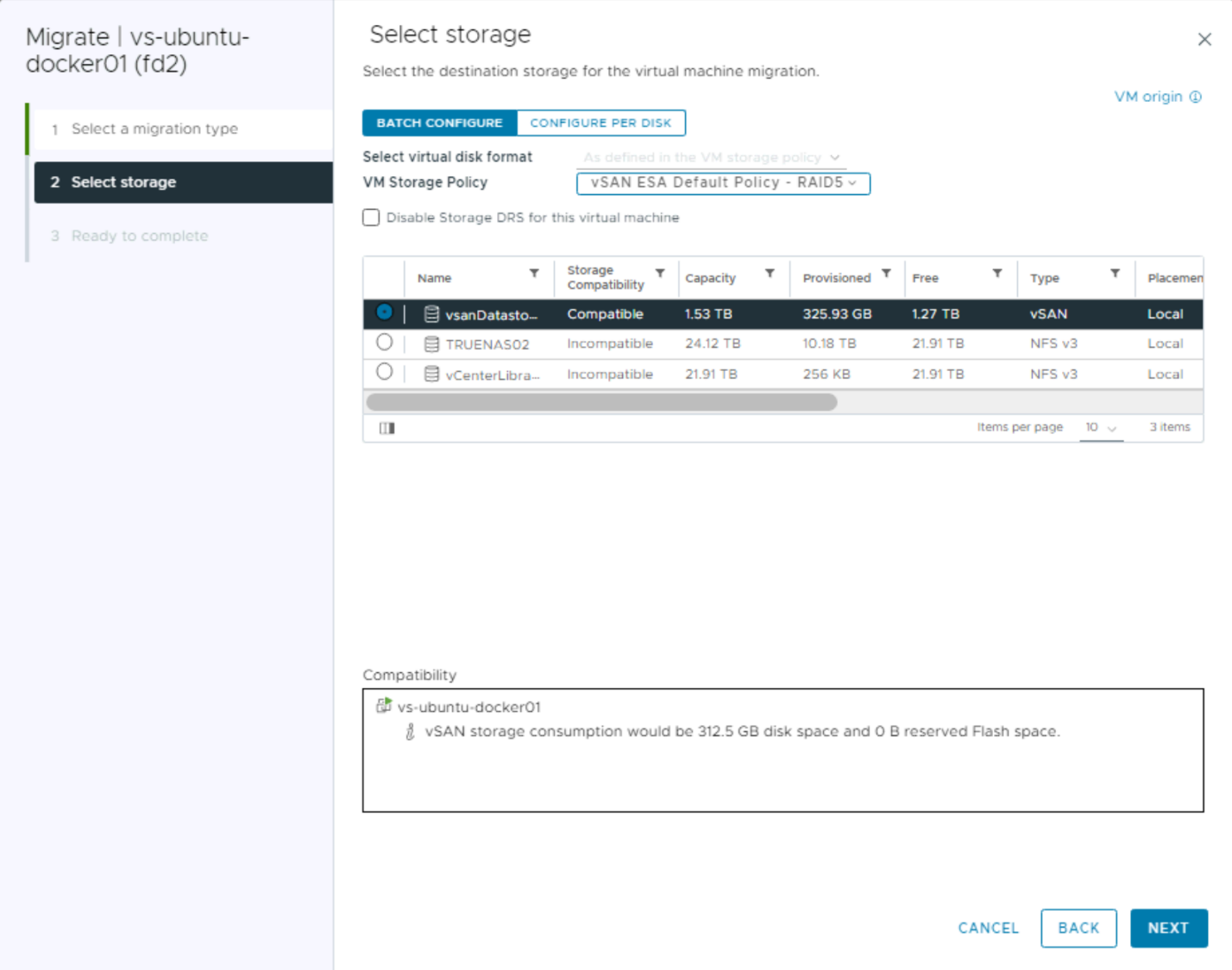

Under VM Storage Policy, select the policy, and the vsanDatastore.

Next

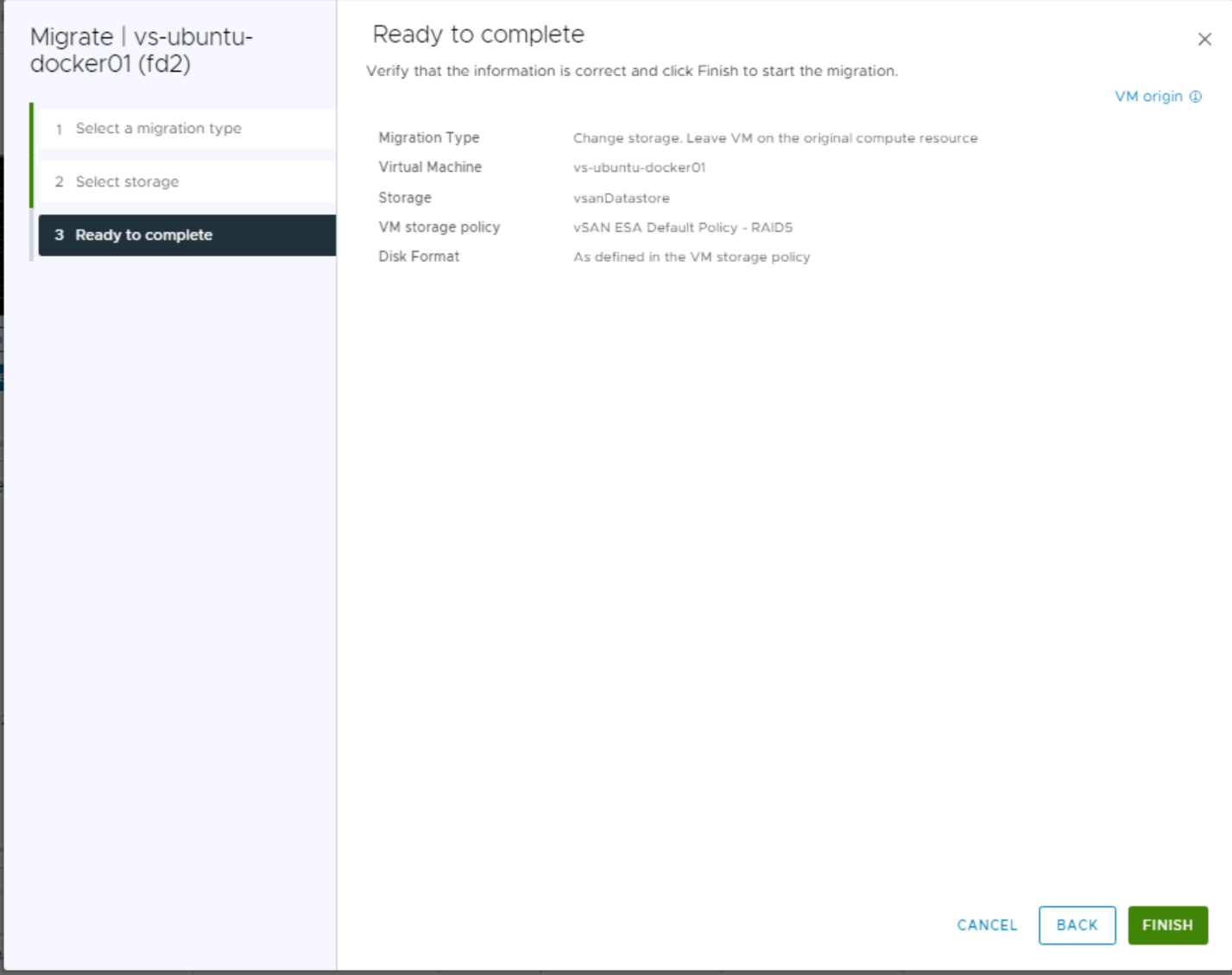

Click Finish

Lets go ahead and Migrate a VM to it.

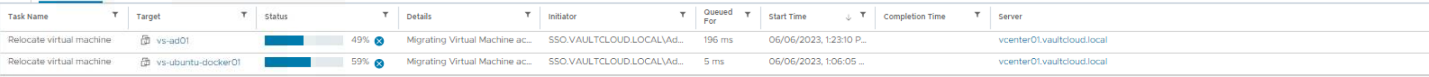

To show more movement, I queued another VM to migrate.

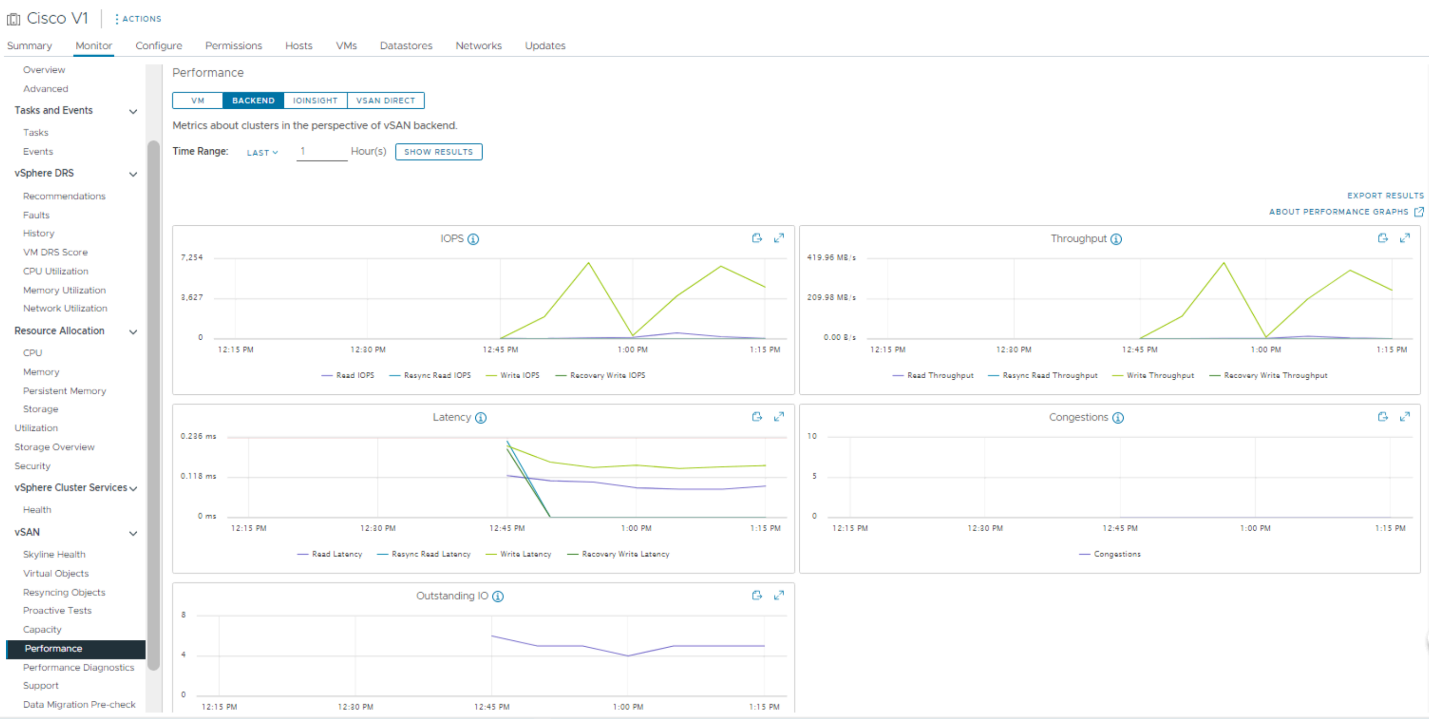

Ok, lets click on the cluster, monitor, vSAN and then performance, and click backend.

We can see some traffic.

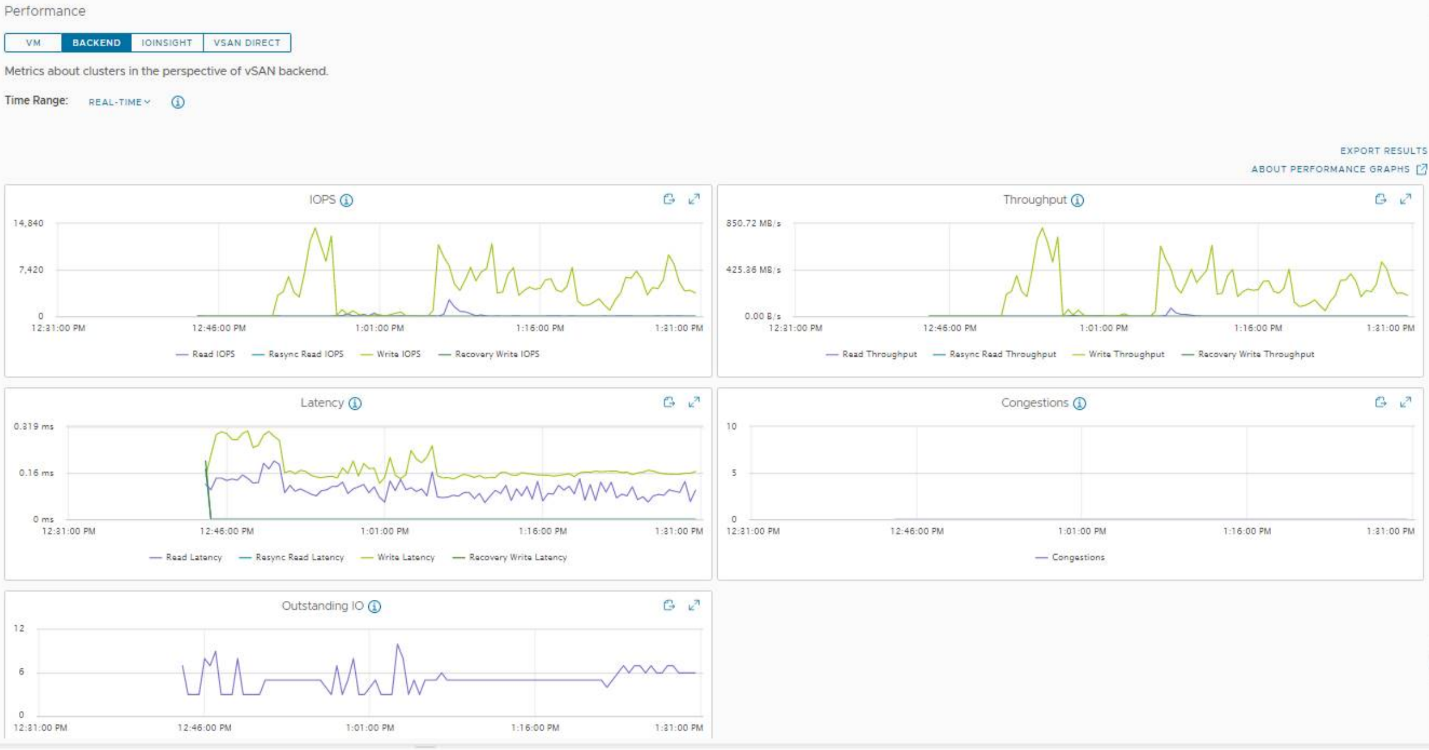

Lets change to real time. Under the drop down.

To note, I only have a single 10GB link per host, until I can run some more cabling. And I am migrating from a Hybrid Truenas array.

More to come on this front for Sure!