DobiMigrate Ensures Data Security and Integrity Objectives Met During Migration; Cuts Months Off Project Timeline

Datadobi, the global leader in unstructured data management software, today announced that the CBX agency has migrated its entire distributed data storage infrastructure with DobiMigrate enterprise-class migration software for network-attached storage (NAS) and object data. In doing so, CBX now enjoys greatly expanded features and functionality, including greatly enhanced data security and support for cloud tiering.

Recently, the NetApp data storage platform and associated software on which CBX had long relied on was end-of-life. This meant that no additional firmware, patches, or upgrades would be made available. In addition, warranty support would soon come to an end. CBX decided that its best option would be to remain with NetApp and upgrade to the vendor’s newest platform. In doing so, it would not only remain with a brand it trusted but CBX would also benefit from increased capabilities and heightened security. With this decision made, the next critical consideration was how best to migrate the NAS data from its existing data storage environments to the new. The data migration would involve the entirety of CBX’s client and internal business production data housed across two geographically dispersed locations in Minneapolis and New York City.

CBX reached out to their technology vendor SHI, for a recommendation on how best to proceed. SHI highly recommended Datadobi, and its DobiMigrate enterprise-class migration software for NAS and object data.

“SHI assured us that DobiMigrate could be trusted to deliver in the most complex and demanding environments, while ensuring that we meet our data security and integrity objectives for migration to our new storage,” said Don Piraino, IT Manager, CBX. “The fact that Datadobi is also vendor-agnostic and has the ability to move data from any vendor’s platform to another heterogeneous platform on prem, remote, or in the cloud added to my comfort level. It is nice knowing that I am not locked into any one vendor. But more importantly for me, this spoke to Datadobi’s industry unique experience managing diverse migrations across many different platforms and environments.”

“We couldn’t allow even a moment of downtime or loss of data availability of any kind. The migration involved every single piece of CBX data – from client to CBX business data, spread across two geographically dispersed locations,” Piraino explained. “Typically, we use NetApp SnapMirror, but we knew it wasn’t the answer for this level of a migration. With DobiMigrate, we finished in weeks, rather than in months, with not even one hiccup.”

When asked what advice Piraino would offer others contemplating an enterprise class data migration, he replied, “We had a Datadobi Implementation Specialist dedicated to ensuring the success of our migration initiative… Having that level of expertise was invaluable. Nothing happened without Datadobi making sure it was being done correctly. It was such a load off my mind.”

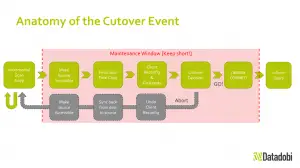

Piraino concluded, “The final cutover was really smooth – with absolutely zero impact on any of our team’s work. We completed it on a Friday evening. And on Saturday morning, everything was up and running. When you, your internal users, and your boss feel zero pain, that’s always a good thing.”

To read the CBX case study in its entirety, please visit: https://datadobi.com/case-studies/.

admin

Datadobi Offers Ultimate Unstructured Data Migration Flexibility with S3-to-S3 Object Migration Support

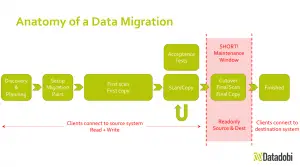

I was lucky enough to sit in on a product demonstration of DobiMigrate last week and was thoroughly impressed by the level of thought and attention to detail that goes into dobiMigrate,

every migration planned to the highest degree in order to minimise the service window with rollback procedures in place.

Now with Added S3 support Datadobi represents a truly cross-platform solution for data migration which is easy to recommend, if you want to know your data is safe.

Attached is their press release.

Datadobi Offers Ultimate Unstructured Data Migration Flexibility with S3-to-S3 Object Migration Support

DobiMigrate 5.9 provides the ability to migrate or reorganize file and object storage data to the optimal accessible storage service

LEUVEN, Belgium – June 2, 2020 – Datadobi, the global leader in unstructured data migration software, today announced DobiMigrate 5.9 with added support for S3-to-S3 object migrations. The addition of S3-to-S3 object migrations offers the most flexible and comprehensive software suite for migration of unstructured data. Customers can move data in any direction between on-prem systems of different vendors, cloud storage services, or a mixture of both. All objects and their metadata are synchronized from the source buckets to the target buckets.

Together with existing support for all NAS protocols, DobiMigrate customers can now benefit from the ultimate flexibility to migrate or reorganize their file and object storage data.

“We have always been a company that can manage our customer’s most difficult migration projects and have been involved in a number of different types of object migration projects over the years,” said Carl D’Halluin, Chief Technology Officer, Datadobi. “In this release, we are incorporating everything we have learned about S3 object migrations into our DobiMigrate product, making it available to everyone. We have tested it with the major S3 platforms including AWS to enable our customers’ journey to and from the cloud.”

The ability to move unstructured data whenever needed allows customers to continuously optimize their storage deployments and data layout. This keeps data on the optimal accessible medium needed at any moment, enables users to decommission ageing hardware, and offers the freedom to reconsider which data belongs on premises and which data better lives in cloud services.

With its unrivalled knowledge of cross-vendor storage compatibility, Datadobi has qualified migrations for an ever-expanding list of storage protocols, vendor products, and storage services, including S3 object storage, SMB and NFS NAS storage, and Content Addressed Storage (CAS) and is a partner with most of the major storage vendors.

“The exponential growth in the creation, storage, and use of unstructured data can create serious migration bottlenecks for many organizations,” commented Jed Scaramella, Program Director for IDC Infrastructure Services. “Migration services that leverage automation will mitigate risk, accelerate the process, and provide insurance that data transfers will proceed without interruptions.”

About Datadobi

Datadobi, a global leader in data management and storage software solutions, brings order to unstructured storage environments so that the enterprise can realize the value of their expanding universe of data. Their software allows customers to migrate and protect data while discovering insights and putting them to work for their business. Datadobi takes the pain and risk out of the data storage process, and does it ten times faster than other solutions at the best economic cost point. Founded in 2010, Datadobi is a privately held company headquartered in Leuven, Belgium, with subsidiaries in New York, Melbourne, Dusseldorf, and London.

For more information, visit www.datadobi.com, and follow us on Twitter and LinkedIn.

Repost: George Jon Selects Datadobi to Deliver Data Migration for its Leading eDiscovery Platform

George Jon Selects Datadobi to Deliver Data Migration for its Leading eDiscovery Platform

Datadobi Outperformed Alternative Solutions for Performance, Simplicity, and Reporting Capabilities

LEUVEN, Belgium–(BUSINESS WIRE)–Datadobi, the global leader in unstructured data migration software, has been selected by eDiscovery platform, process, and product specialist George Jon, to power its data migration services for clients across the corporate, legal, and managed services sectors. George Jon builds and launches best-in-class, secure eDiscovery environments, from procurement and buildout, and requires a data migration solution that provides clients with fast and reliable file transfer capabilities. They’ve managed projects for all of the ‘Big Four’ accounting firms and members of the Am Law 100, among many others.

Scale Computing Delivers Edge Computing to Goodwill Central Coast

Local nonprofit eliminated complexity, lowered costs and freed up management time across 20 stores with Scale Computing HC3

INDIANAPOLIS (PRWEB) APRIL 14, 2020

Scale Computing, the market leader in edge computing, virtualization and hyperconverged solutions, today announced Goodwill Central Coast, a regional chapter of the wider Goodwill nonprofit retail organization, has implemented Scale Computing’s HC3 Edge platform for its IT infrastructure for in-store across its 20 locations. Scale Computing HC3 has provided Goodwill Central Coast with a simple, affordable, self-healing and easy to use hyperconverged virtual solution capable of remote management.

Spanning three counties in southern California, Goodwill Central Coast serves Monterey, Santa Cruz and San Luis Obispo. Goodwill Central Coast’s mission is to provide employment for individuals who face barriers to employment, such as homelessness, military service, single parenting, incarceration, addiction and job displacement. To fund its mission, Goodwill Central Coast is also a retail organization, which provides job training and funds programs to assist individuals in reclaiming their financial and personal independence.

As a nonprofit with a small IT staff of three people and 20 locations to service, Goodwill Central Coast was in need of an affordable virtual hyperconverged infrastructure solution capable of managing the region’s file systems and databases out of a central database in Salinas, CA. Since implementation of Scale Computing, Goodwill Central Coast has upgraded its point of sale system, increased drive space, saved resources, and saved 15% on the initial IT budget.

“The cumbersome nature of managing our previous technology led me to look into other solutions, and I’m so glad I found Scale Computing,” said Kevin Waddy, IT Director, Goodwill Central Coast. “Migration from our previous system was so quick and easy! I migrated 24 file servers to the new Scale Computing solution during the day with little to no downtime, spending 20 minutes on some servers. The training and support during the migration was comprehensive and I’ve had zero issues with the system in the six months I’ve had it in place. Scale Computing HC3 is a single pane of glass that’s easy to manage, and it has saved us so much time on projects.”

Before turning to Scale Computing, Goodwill Central Coast was using an outdated legacy virtualization system that required physical reboots every other month. The nonprofit was looking to save management time by replacing their IT infrastructure with a virtual server system capable of simply and efficiently managing remote servers. Waddy was also interested in looking for a hyperconverged system for its ability to migrate from physical servers to virtual, ultimately turning to Scale Computing for its affordability and ability to maximize uptime.

“When an organization is providing a service to its community, the last thing they should worry about is stretching their resources,” said Jeff Ready, CEO and Co-Founder, Scale Computing. “For a nonprofit like Goodwill Central Coast, saving time and money is critical to the work they do. With Scale Computing HC3, Goodwill Central Coast now has a simple, reliable and affordable edge computing solution that is easy to manage with a fully integrated, highly available virtualization appliance architecture that can be managed locally and remotely.”

With Scale Computing HC3, virtualization, edge computing, servers, storage and backup/disaster recovery have been brought into a single, easy-to-use platform. All of the components are built in, including the hypervisor, without the need for any third-party components or licensing. HC3 includes rapid deployment, automated management capabilities, and a single-pane-of-management, helping to streamline and simplify daily tasks, saving time and money.

For more information on Scale Computing HC3, visit: https://www.scalecomputing.com/hc3-virtualization-platform

About Scale Computing

Scale Computing is a leader in edge computing, virtualization, and hyperconverged solutions. Scale Computing HC3 software eliminates the need for traditional virtualization software, disaster recovery software, servers, and shared storage, replacing these with a fully integrated, highly available system for running applications. Using patented HyperCore™ technology, the HC3 self-healing platform automatically identifies, mitigates, and corrects infrastructure problems in real-time, enabling applications to achieve maximum uptime. When ease-of-use, high availability, and TCO matter, Scale Computing HC3 is the ideal infrastructure platform. Read what our customers have to say on Gartner Peer Insights, Spiceworks, TechValidate, and TrustRadius.

Scale Computing Offers Acronis Cloud Storage, Expands Storage Options for Enhanced Data Backup, Disaster Recovery, and Ransomware Mitigation

Scale Computing Offers Acronis Cloud Storage, Expands Storage Options for Enhanced Data Backup, Disaster Recovery, and Ransomware Mitigation

By offering Acronis Cloud Storage, Scale Computing customers can now easily and securely store their Acronis backups locally or in the cloud

INDIANAPOLIS, March 24, 2020 – Scale Computing, a market leader in edge computing, virtualization and hyperconverged solutions, today announced it is offering Acronis Cloud Storage, adding a further dimension to the existing OEM partnership with the global leader in cyber protection. The easy to use cloud subscription from Acronis gives Scale Computing HC3 customers a powerful, highly available, hybrid redundant backup storage solution delivering disaster recovery protection and cyberthreat mitigation.

By offering Acronis Cloud Storage, Scale Computing provides customers with a safe, secure and easy place to back up data. By integrating the Scale Computing HC3 platform with Acronis Cyber Backup, users can benefit from a wide range of advanced backup and protection features, including granular object-level recovery, variable-length deduplication for backups, and active ransomware protection.

The new end-to-end storage and cloud backup solution can protect entire virtual machines (VMs), providing bare-metal restore capabilities, and can restore to dissimilar hardware or platforms if required. Available to purchase in one- or three-year terms, Scale Computing will be offering Acronis Cloud Storage in increments ranging from 250GB to 5TB.

Acronis backups are stored on reliable Acronis Cyber Infrastructure (a secure software-defined compute and storage solution) with outstanding redundancy and error correction. The data center infrastructure is designed around high availability and redundancy to minimize associated risks and eliminate single points of failure. In addition, physical security is ensured through several protective measures such as security barriers, video surveillance, and highly monitored access. Data can also be encrypted at source with government-approved AES-256 strong encryption before sending it to a secure data center.

“We are seeing considerable demand from today’s businesses for data protection, backup and disaster recovery. Integrating the Scale Computing HC3 platform with Acronis will help customers meet these priorities in a simple and cost-effective way,” explained Jeff Ready, CEO and co-founder, Scale Computing. “Scale Computing customers can now easily and securely store their Acronis backups locally or in the cloud, with a scalable solution that is designed for agile businesses.”

“The ever-rising threat of ransomware and other cyberthreats along with changing IT requirements have organizations facing ongoing challenges associated with protecting their infrastructure. Due to this, more organizations are demanding cyber protection solutions that are easy, efficient and secure for backing up their data,” said Patrick Hurley, Vice President and General Manager, Americas at Acronis. “With Acronis Cloud Storage on Scale Computing HC3, organizations gain a high availability data protection cloud solution that also ensures safe, secure and scalable offsite backup for any data or any system — anytime, anywhere.”

Availability:

Acronis Cloud from Scale Computing is available now, worldwide.For more information about Acronis Cloud Storage on Scale Computing HC3, visit: https://www.scalecomputing.com/acronis

About Scale Computing

Scale Computing is a leader in edge computing, virtualization, and hyperconverged solutions. Scale Computing HC3 software eliminates the need for traditional virtualization software, disaster recovery software, servers, and shared storage, replacing these with a fully integrated, highly available system for running applications. Using patented HyperCore™ technology, the HC3 self-healing platform automatically identifies, mitigates, and corrects infrastructure problems in real-time, enabling applications to achieve maximum uptime. When ease-of-use, high availability, and TCO matter, Scale Computing HC3 is the ideal infrastructure platform. Read what our customers have to say on Gartner Peer Insights, Spiceworks, TechValidate and TrustRadius.

About Acronis

Acronis sets the standard for cyber protection through its innovative backup, anti-ransomware, disaster recovery, storage, and enterprise file sync and share solutions. Enhanced by its award-winning AI-based active protection technology, blockchain-based data authentication and unique hybrid-cloud architecture, Acronis protects all data in any environment – including physical, virtual, cloud, mobile workloads and applications – all at a low and predictable cost.

Founded in Singapore in 2003 and incorporated in Switzerland in 2008, Acronis now has more than 1,500 employees in 33 locations in 18 countries. Its solutions are trusted by more than 5.5 million consumers and 500,000 businesses, including 100% of the Fortune 1000 companies. Acronis’ products are available through 50,000 partners and service providers in over 150 countries in more than 30 languages.

Reposting Blog from BackBlaze CEO.

Blog Post Title: An Announcement With 18 Zeros

Author: Gleb Budman, CEO and Co-Founder

Since the beginning of Backblaze—back in 2007, when five of us were working out of Brian Wilson’s apartment in Palo Alto—watching the business grow has always been profoundly exciting for me. And I’m not talking about financial growth (that’s nice too, of course).

Our team has grown. From five way back in the Palo Alto days, to 145. Our customer base has grown. Today, we have customers in over 160 countries… it doesn’t seem that long ago when I was excited about having our 160th customer.

More than anything else, the data we manage for our customers has grown.

In 2008, not long after our launch, we had 750 customers and thought ten terabytes was a lot of data. But things progressed quickly, and just two years later we reached 10 petabytes of customer data stored (1000x more). (Good thing we designed for zettabyte-scale cloud architecture!)

By 2014, we were storing 100 petabytes – the equivalent of 11,415 years of HD video.

Years passed, our team grew, the number of customers grew, and—especially after we launched B2 Cloud Storage in 2015—the data grew. At some scale it got harder to contextualize what hundreds and hundreds of petabytes really meant. I like to remember that each byte is part of some individual’s beloved family photos or some organization’s critical data that they’ve entrusted us to protect.

This is a belief that we’ve included in every Backblaze team member’s job description, to remind us of why we do what we do:

“Our customers use our services so they can pursue dreams like curing cancer (genome mapping is data-intensive), archive the work of some of the greatest artists on the planet (learn more about how Austin City Limits uses B2), or simply sleep well at night (anyone that’s spilled a cup of coffee on a laptop knows the relief that comes with complete, secure backups).”

It’s critically important for us that we achieved this growth by staying the same in the most important ways: being open & transparent, building a sustainable business, and caring about being good to our customers, partners, community, and team. Which is why I’m excited to announce a huge milestone today. Our biggest growth number yet.

We’ve reached 1.

Or, by another measurement, we’ve reached 1,000,000,000,000,000,000.

Yes, today, we’re announcing that we are storing 1 exabyte of customer data.

What does it all mean? Well. If you ask our engineers, not much. They’ve already rocketed past this number mentally and are considering how long it will take to get to a zettabyte (1,000,000,000,000,000,000,000 bytes).

But, while it’s great to keep our eyes on the future, it’s also important to celebrate what milestones mean. Yes, crossing an exabyte of data is another validation of our technology and our sustainably independent business model. But I think it really means that we’re providing value and earning the trust of our customers.

Thank you for putting your trust in us by keeping some of your bytes with us. We have a couple more announcements in the next few weeks that I’m looking forward to sharing with you. But for today, here’s to our biggest 1 yet. And many more to come.

Introducing vSphere 7: Essential Services for the Modern Hybrid Cloud :repost

Exciting news from Vmware this week.

https://blogs.vmware.com/vsphere/2020/03/vsphere-7.html

“Our customers who are most successful in meeting the changing needs of their customers simultaneously work on two initiatives: modernize their approach to applications, and modernize the infrastructure that those applications run on, to meet the needs of their developers and IT teams.

As part of these initiatives, customers want to gain the benefits of a cloud operating model, which means having rapid, self-service access to infrastructure, simple lifecycle management, security, performance, and scalability.

This is exactly what vSphere now provides. vSphere 7 is the biggest release of vSphere in over a decade and delivers these innovations and the rearchitecting of vSphere with native Kubernetes that we introduced at VMworld 2019 as Project Pacific.

The headline news is that vSphere now has native support for Kubernetes, so you can run containers and virtual machines on the same platform, with a simple upgrade of the system that you’ve currently standardized on and adopting VMware Cloud Foundation. In addition, this release is chock-full of new capabilities focused on significantly improving developer and operator productivity, regardless of whether you are running containers.”

New BEAST Elite Models Bring Major Performance & Connectivity Improvements for BEAST Platform

StorCentric’s Nexsan Announces Major Performance & Connectivity Improvements for BEAST Platform with New BEAST Elite Models

BEAST Elite & Elite F with QLC Flash Deliver Industry-Leading BEAST Reliability with Enhanced Performance

Nexsan, a StorCentric company and a global leader in unified storage solutions, has announced significant enhancements for its high-density BEAST storage platform, including major improvements in performance and connectivity. The new BEAST Elite increases throughput and IOPs by 25% while maintaining the architecture’s price/performance leadership. Connectivity has been tripled, providing 12 high-speed Fibre Channel (FC) or iSCSI host ports, reducing the need for network switches.

BEAST Elite: Industry-Leading Reliability, Enhanced Performance and Scale

The BEAST Elite has been architected for the most diverse and demanding storage environments, including media and entertainment, surveillance, government, health care, financial and backup. It offers ultra-reliable, high performance storage that seamlessly fits into existing storage environments. In addition to a 25% increase in IOPs and throughput, BEAST Elite provides an all-in enterprise storage software feature set with encryption, snapshots, robust connectivity with FC and iSCSI, and support for asynchronous replication.

Designed for easy setup and streamlined management processes for administrators, BEAST Elite is highly reliable and was built to withstand demanding storage environments, including ships, subway stations and storage closets that have less than ideal temperatures and vibration. The platform now supports 14TB and 16TB HDDs with density of up to 2.88PB in a 12U system.

BEAST Elite F: QLC Flash Delivers Unrivalled Price/Performance Metrics

The BEAST Elite F storage solution supports QLC NAND technology designed to accelerate access to extremely large datasets at unrivalled price/performance metrics for high-capacity, performance-sensitive workloads. It is ideally suited to environments where performance is critical, but the cost of SSDs has historically been too high.

The BEAST Elite and Elite F both support the new E-Flex Architecture, which allows users to select up to two of any of the three expansion systems available. This gives organizations the flexibility to size the systems exactly as required within their rack space requirements.

“The new BEAST Elite storage platform with QLC flash provides a significant performance improvement over HDDs with industry-leading price/performance. This storage platform is ideal for customers who want extremely dense, highly resilient block storage data lakes, analytics and ML/AI workloads,” said Surya Varanasi, CTO of StorCentric, parent company of Nexsan.

“With a reputation for reliability stretching back over two decades, our BEAST platform has become a trusted solution for a huge range of organizations and use cases,” commented Mihir Shah, CEO of StorCentric, parent company of Nexsan. “BEAST Elite and Elite F retain those levels of superior-build quality and robust design while adding significantly to performance, scale and connectivity capabilities. We will continue to enhance and introduce new products as a testament to our unrelenting commitment to customer centricity.”

For further information, please visit https://www.nexsan.com/beast-elite/.

Lemongrass Secures $10 Million Series C Financing Round Led by Blue Lagoon Capital

Global SAP® on AWS® services firm announces infusion of experienced board members and leadership team to bring renewed commitment to strategic vision and accelerate sales, marketing and execution of aggressive product roadmap.

ATLANTA, GA — Lemongrass Consulting, the leading professional and managed service provider of SAP enterprise applications running on AWS hyperscale cloud infrastructure, today announced it has completed a $10 million Series C round of financing. Blue Lagoon Capital led the investment round with participation from existing investor Columbia Capital. The investment brings Lemongrass’s total funding since launching its services to $29.3 million and will enable the company to build out its senior leadership team, broaden and accelerate product development, and aggressively expand its sales and marketing efforts.

Lemongrass, founded in 2008 by Eamonn O’Neill and Walter Beek, is recognized as the leading professional and managed service provider focused solely on the complex task of running SAP on AWS. The company provides end to end support for the entire client lifecycle spanning discovery, landing zone build out, workload migration and implementation at scale, and ongoing operational services. Additionally, the company has developed a cloud management platform, MiCloud, widely recognized as the most sophisticated set of purpose-built tools to facilitate the migration of SAP to the AWS cloud, and to support the on-going operation of the resulting SAP on AWS environments. Automation includes a full suite of migration, build and steady-state run services, self-service deployments and self-healing capabilities.

Lemongrass works with leading enterprises, across multiple verticals in the Americas, EMEA and APAC. The company has been working with AWS since 2010, is a Premier APN Consulting Partner, was the second company globally to achieve the SAP on AWS capability and was just recently awarded the coveted AWS Migration Competency award, in addition to the AWS Managed Services Competency award, in recognition of the thousands of SAP systems that they have migrated with a 100% success rate.

“Lemongrass is an exceptional company. The magnitude of their vision and the quality of their solutions positions them well to dominate the SAP on AWS growth market,” said Rodney Rogers, Co-founder and General Partner, Blue Lagoon Capital. “We could not be more delighted about this investment.”

In addition to the investment, Rogers will join Lemongrass as Chairman of the Board. Rogers is an expert technologist with more than 30 years of success in the technology services industry, recognized most recently for his leadership as Virtustream’s co-founder and CEO, a technology startup that achieved a billion-dollar+ valuation through its acquisition by EMC in 2015. Also, new to the Board is Kevin Reid, co-founder and CTO of Virtustream and co-founder and General Partner at Blue Lagoon Capital. Kevin is a technology entrepreneur with over 30 years of experience creating software-enabled solutions that deliver efficiency, enable innovation and drive superior financial results for enterprises worldwide.

The company also announced new appointments to round out the global management team and bring aboard the additional experience needed to support Lemongrass’s next era of growth and maturity.

Mike Rosenbloom joins the company as the Group CEO. Mike has over 25 years in IT Leadership and comes to Lemongrass from Accenture where he was Managing Director for the company’s Intelligent Cloud & Infrastructure business.

Walter Beek, previous Group CEO of Lemongrass, will continue with the company in the role of Co-founder & Chief Innovation Officer and will focus on his passion for driving the innovation agenda for the company’s services and the MiCloud platform.

“These are exciting times for Lemongrass and the momentum we have in our business is evident.” said Beek. “Our business has ambitious goals to pursue and achieving them will require immense skill, rapid growth and scale, and collaborative leadership. The addition of Rodney, Kevin, Mike and the rest of the new executives to our current accomplished team marks an important milestone in Lemongrass’s evolution. I’m confident we will grow faster, innovate as never before, and truly differentiate ourselves in this market.”

Other new leaders joining Lemongrass’s executive leadership team at this time are:

Mike Provenzano, Chief Financial Officer

- Mike has over 25+ years of high-tech and software executive experience providing strategic, financial and operational leadership to both public and private companies at various stages of their development. Prior to Lemongrass, Mike was CFO of Virtustream, a cloud services and software provider.

Lisa Desmond, Chief Marketing Officer

- Lisa brings 20+ years of experience in global IT marketing creating and operationalizing marketing visions for entrepreneurial tech businesses. Lisa was most recently CMO for Beep, an autonomous mobility solutions company, and before that, she was the CMO of Virtustream.

“The most exciting days for Lemongrass are ahead. This is a remarkable team and I am honored to be a part of this journey,” said Mike Rosenbloom, Lemongrass’s new CEO. “This latest funding and the strategic addition of these key leaders will drive exponential growth, enable strategic investments ‘ahead of the curve’, increase operational discipline, and inspire our team to be the absolute best as we execute on our commitments to our customers, partners, and investors.”

About Lemongrass

Lemongrass Consulting, headquartered in Reading with operations in all global geographies, was established in 2008 as a specialist SAP Technology consultancy. Lemongrass specializes in the implementation, migration, operation, innovation and automation of SAP on AWS, covering both the SAP Business Suite and Business One applications. The company is an AWS Premier partner, an Advanced APN Consulting Partner, an AWS accredited Managed Service Provider and was the second company globally to achieve the SAP on AWS capability.

To learn more about Lemongrass, visit https://lemongrassconsulting.com/

Nexsan announce Assureon Archive storage system. Version 8.3

Thousand Oaks, CA – Jan. 15, 2020 – Nexsan announced the newest release of its Assureon Archive storage system. Version 8.3 includes Private Blockchain and end-to-end RMDA (Remote Direct Memory Access) over Converged Ethernet (RoCE) both adding a whole new level of data security and protection.

Assureon is ideal for organizations that need to implement regulatory and corporate compliance, the long-term archiving of unstructured data, or storage optimization.

The Private Blockchain feature means that customers can now protect their data by:

Storing it in an immutable data structure

Utilizing cryptography to secure transactions

Relying on an automated integrity audit at the redundant sites to maintain data integrity and transparency.

Full press release :

Nexsan Adds RoCE and Private Blockchain Technology to Award Winning Assureon® Solution

Nexsan Assureon 8.3 includes Private Blockchain to protect and secure digital assets and RDMA over Converged Ethernet (RoCE) to enable over a 2x performance improvement for data retrieval

Thousand Oaks, CA – Jan. 15, 2020 – Nexsan, a global leader in unified storage solutions and part of the StorCentric family, today announced the newest release of its Nexsan Assureon active data vault storage solution. Version 8.3 includes Private Blockchain and end-to-end RDMA (Remote Direct Memory Access) over Converged Ethernet (RoCE). The latest version is also ideal for organizations that need to implement regulatory and corporate compliance, the long-term archiving of unstructured data, or storage optimization.

Assureon Private Blockchain enables organizations to protect and secure digital assets by storing data in an immutable data structure, utilizes cryptography to secure transactions and relies on an automated integrity audit at the redundant sites to maintain data integrity and transparency. Combined with Assureon’s unique file fingerprinting and asset serialization process, with metadata authentication and a robust consensus algorithm, Assureon Private Blockchain allows secure archiving of digital assets for long-term data protection, retention and compliance adherence.

Nexsan has adopted the high performance and low latency RoCE (RDMA over Converged Ethernet) to eliminate the performance-robbing layers of the network stack. Together, Nexsan with RoCE ensures all customers have ultra-low latency, are regulatory compliant and that the solution is ready for the ever-increasing data demands.

“Nexsan has a strong track record of creating products and services that capitalize on the advances in data archiving, security, protection and retrieval,” commented Surya Varanasi, CTO of StorCentric, Parent Company of Nexsan. “With the release of Assureon 8.3, we have implemented RoCE to provide over a 2x performance improvement and private blockchain technology for a secure, immutable data structure. Users are now able to quickly and efficiently retrieve data with a 40G RDMA Converged Ethernet connection between the Assureon server and Assureon Edge servers, and thereby accelerate access to archived data storage securely.”

Assureon 8.3 offers a number of benefits to Nexsan customers, including:

- Virtual shortcuts that require zero disk space and reside purely in memory as reference points to physical files in the Assureon archive.

- A 40Gb/s ethernet connection that provides blazing fast data retrieval from the Assureon server.

- Data is retrieved directly from user-space with minimal involvement of the operating system and CPU.

- In addition, zero-copy applications can retrieve data from the Assureon archive without involving the network stack.

- Security, traceability, immutability, and visibility of data with Assureon Private Blockchain technology.

About Nexsan

Nexsan® is a global enterprise storage leader, enabling customers to securely store, protect and manage critical business data. Established in 1999, Nexsan has built a strong reputation for delivering highly reliable and cost-effective storage while remaining agile to deliver purpose-built storage. Its unique and patented technology addresses evolving, complex enterprise requirements with a comprehensive portfolio of unified storage, block storage, and secure archiving. Nexsan is transforming the storage industry by turning data into a business advantage with unmatched security and compliance standards. Ideal for a variety of use cases including Government, Healthcare, Education, Life Sciences, Media & Entertainment, and Call Centers. Nexsan is part of the StorCentric family of brands. For further information, please visit: www.nexsan.com

About StorCentric

StorCentric provides world-class and award-winning storage solutions. Between its Drobo, Nexsan, Retrospect and Vexata divisions, the company has shipped over 1M storage solutions and has won over 100 awards for technology innovation and service excellence. StorCentric innovation is centered around customers and their specific data requirements, and delivers quality solutions with unprecedented flexibility, data protection, performance and expandability. For further information, please visit: www.storcentric.com