3K

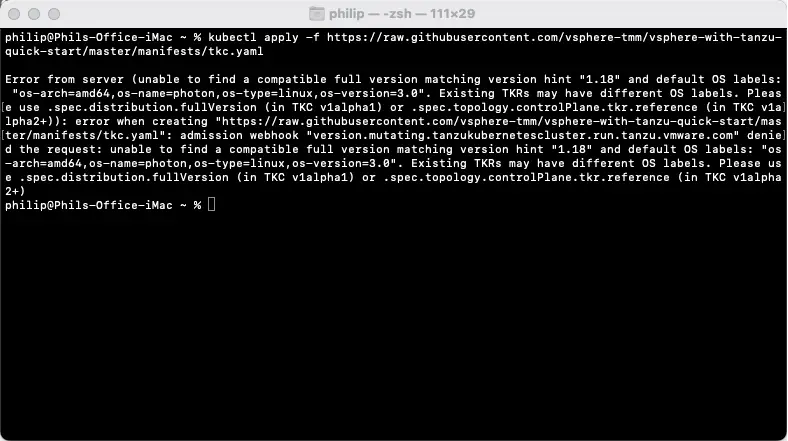

Error deploying VMware’s demo Tanzu Kubernetes Cluster on vCenter 8

written by Philip Sellers

Philip Sellers

Phil is a Solutions Architect at XenTegra, based in Charlotte, NC, with over 20 years of industry experience. He loves solving complex, technology problems by breaking down the impossible into tangible achievements - and by thinking two or three steps ahead of where we are today. He has spent most of my career as an infrastructure technologist in hands-on roles, even while serving as a leader. His perspective is one of servant leadership, working with his team to build something greater collectively. Having been lucky to have many opportunities and doorways opened during his career - Phil has a diverse background as a programmer, a writer, an analyst all while staying grounded in infrastructure technology.