Cloud-native. Containers. Scale and velocity. There is a still a lot of confusion and question about what and how cloud-native technologies should be adopted. I recently attended Cloud Field Day 16 in Santa Clara, hearing from solo.io, Forward Networks and Fortinet about their cloud-native solutions. Traveling to and from the event gave me some time to think about cloud-native. Many know that I have been advocating the move to cloud-native for many years. Conceptually, I have always felt it makes a lot of sense. I’m a huge fan of automation. My mandate to my operations team was to automate everything that could be automated, at my prior employer.

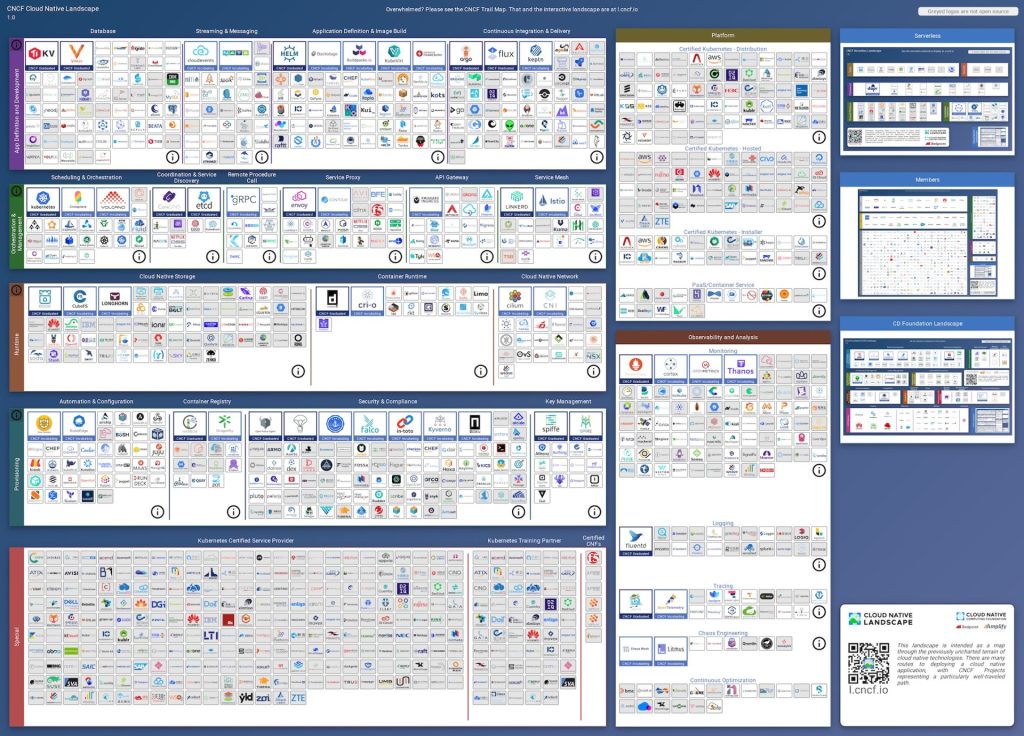

But, cloud native is not without complexity and a large amount of training and retooling. The Cloud-Native Computing Foundation has a slide showing all of the solutions in the cloud native landscape. Frankly, its daunting for any newcomer in the cloud-native space. There are so many projects and competing technologies in the landscape.

What are we chasing?

So, what do I think of as the Nirvana state? What is the ultimate goal of cloud native?

Automated continuous innovation, continuous development pipelines that deploy your applications without user interruption during operations, enabling a true 24 x 7 x 365 operation of your application, while preserving the ability to perform necessary maintenance and upgrades on the underlying infrastructure.

What makes this Nirvina? (aka What are the benefits?)

- Your workloads are consistently deployed every time in a known and tested configuration

- Manual deployments steps are eliminated and replaced by highly automated and repeatable workflows that enable scalability

- Desired state is defined and enforced by the infrastructure automation software

- Intelligent network services handle gracefully moving users and traffic between running software

- Security and instrumentation are defined and enforced as part of the automated workflows

Who’s who in the Zoo? Containers vs. Kubernetes vs. Istio

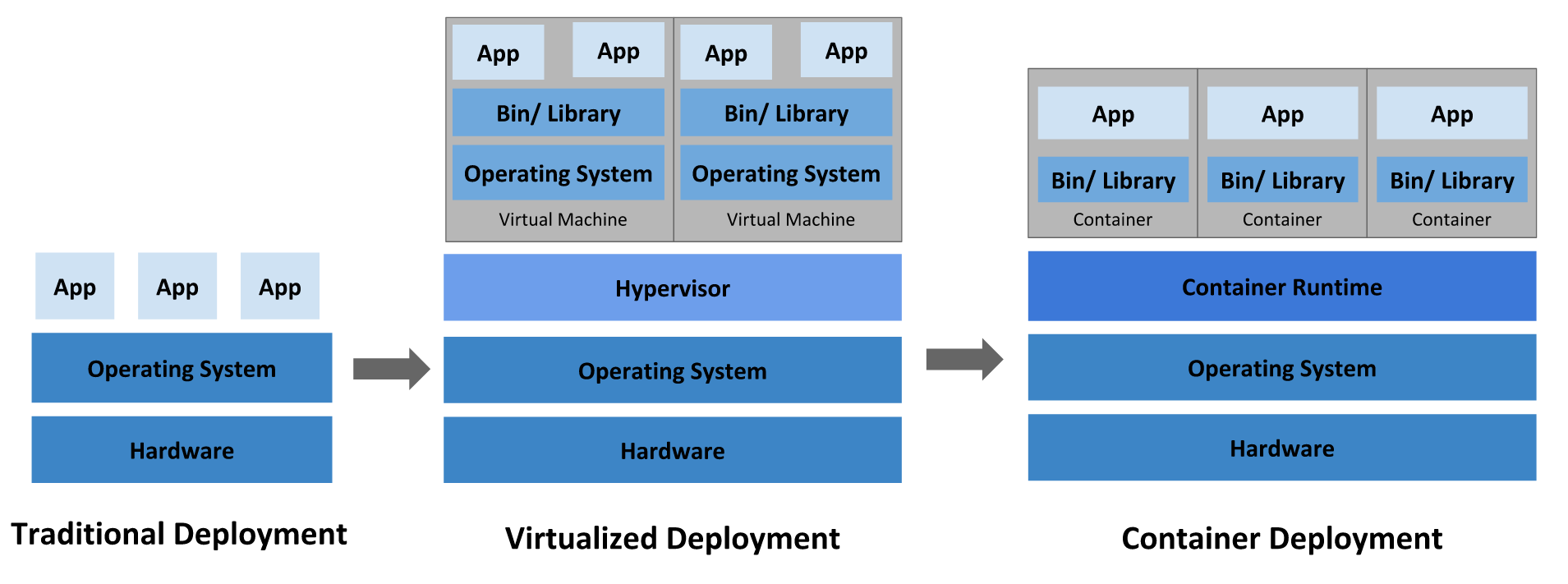

Containers solve the problem of consistently deploying code into dev, test and production by packing the code into an immutable format that includes only the required elements for the application to work, eliminating the bloat of a full operating system instance. Independently available layers of software are combined to create a container image and that image does not change.

Kubernetes solves the problem of orchestration of your containers. It exists and scales within a datacenter. Kubernetes allows for monitoring for desired scale and state of the containers and their workloads, ensuring high-availability and accessibility within the cluster. Kubernetes is a policy engine and relies on declarative definition files to say how the application or service should run. Kubernetes relies on registries to download the Container images for runtime and injects configuration and policy into them.

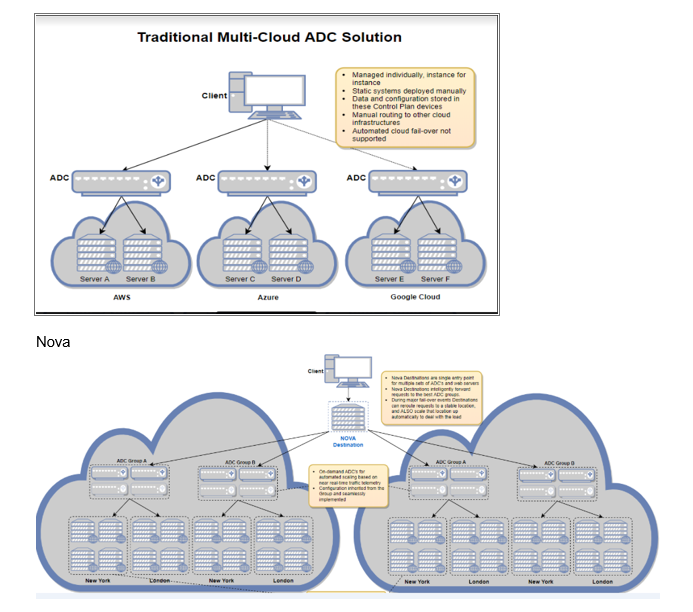

Istio exists to orchestrate and solve problems at a higher level than Kubernetes, with strong integrations on the container orchestration platform. While Kubernetes focuses on orchestration of the containers runtime, Istio focuses on offload of certain desired or required services – such as encryption of traffic, load balancing and failover – potentially across many Kubernetes clusters (or pods).

Istio solves:

- Encryption of traffic between services and applications, often the first reason companies adopt Istio

- Visibility within the application, container and Kubernetes cluster

- Global load balancing and network policies that dictate failover and resiliency, in addition to locality

First 3 Steps

I believe that these three components represent the first three steps on a journey to cloud native. There are many packaged workloads sitting ready to run in container registries thaht can be consumed and deployed onto a Kubernetes cluster.

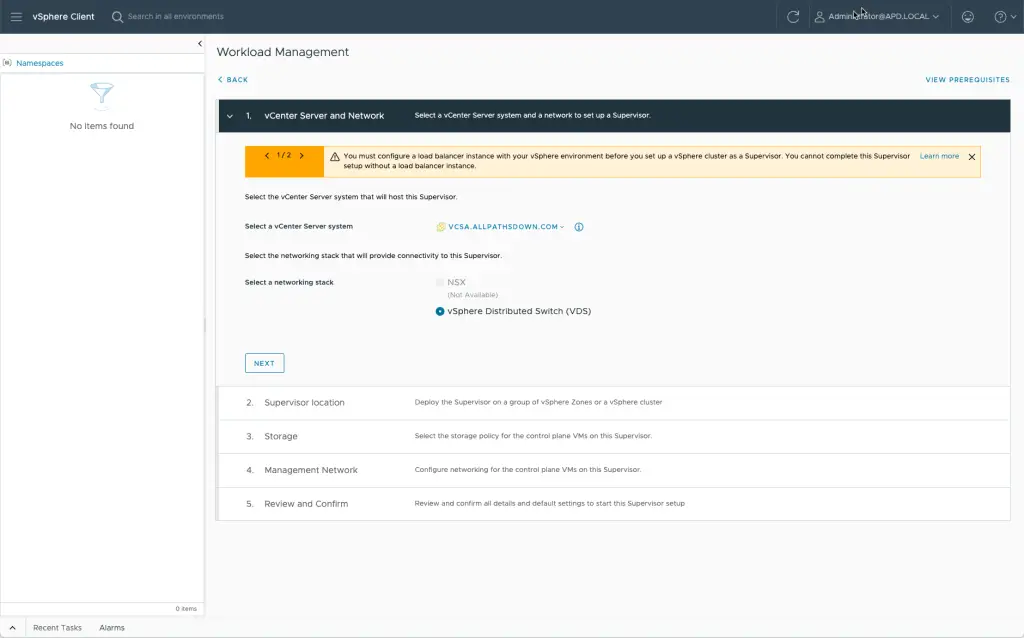

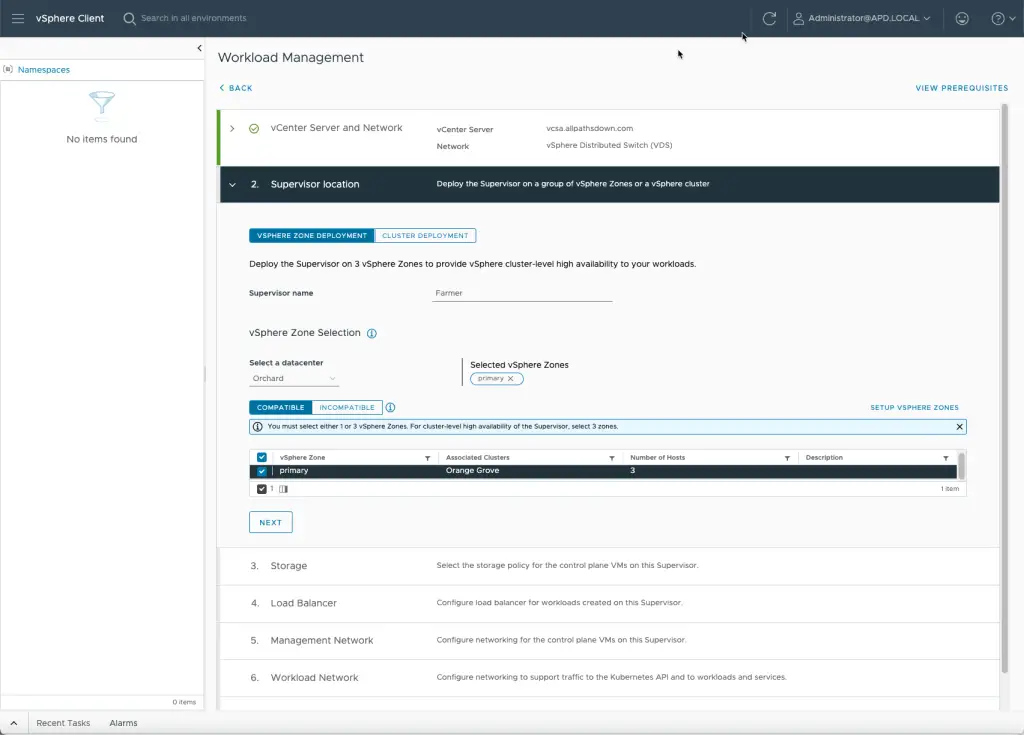

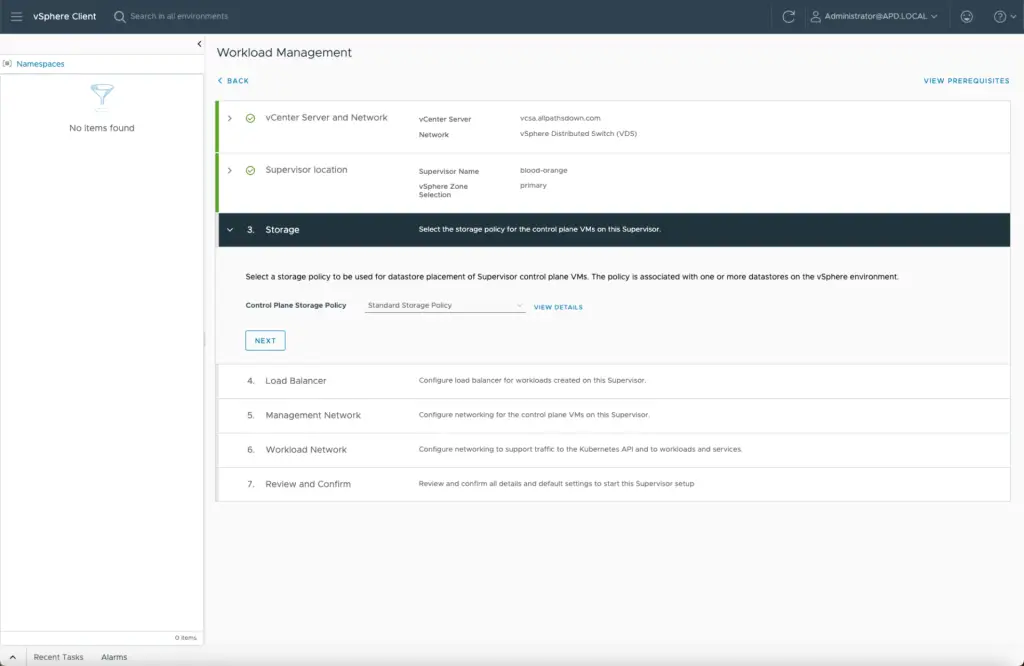

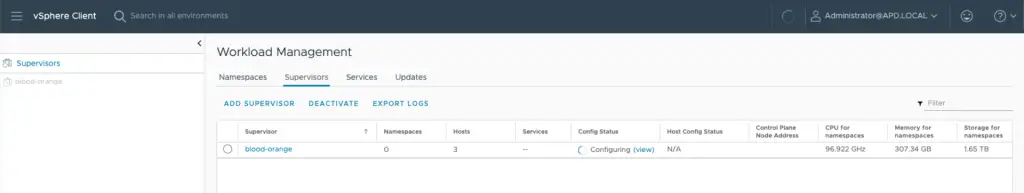

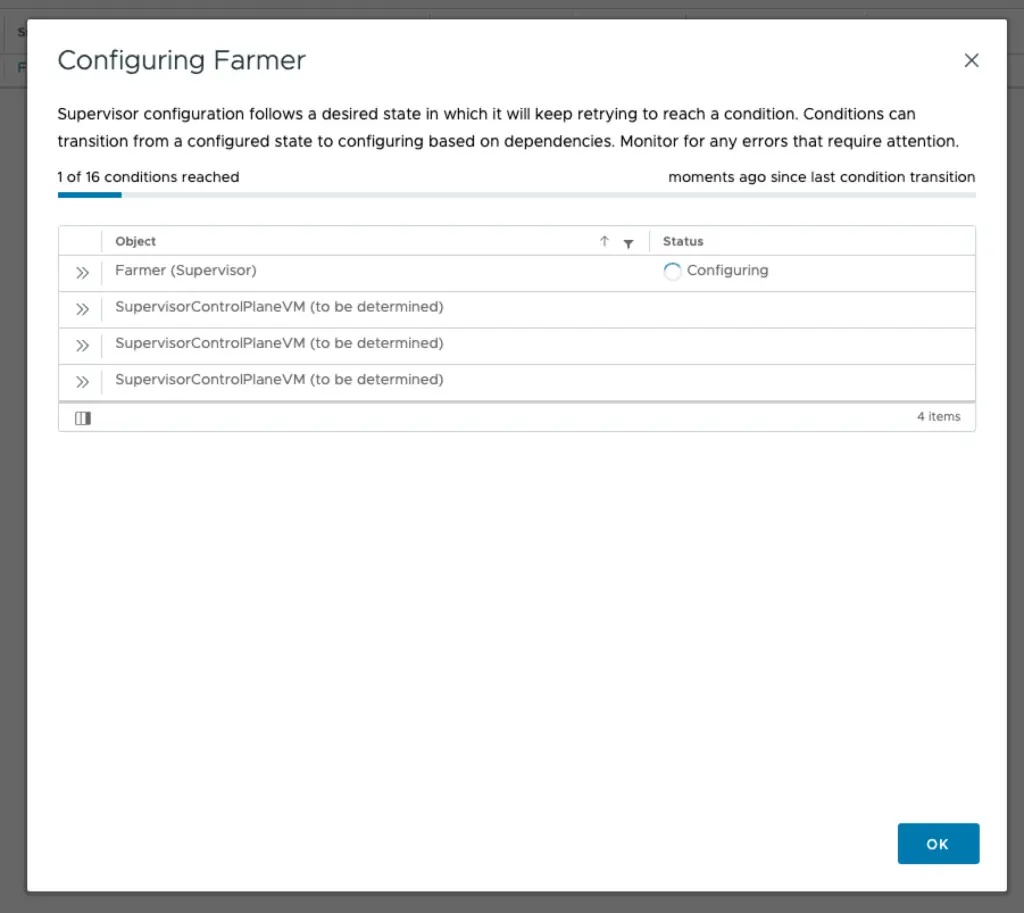

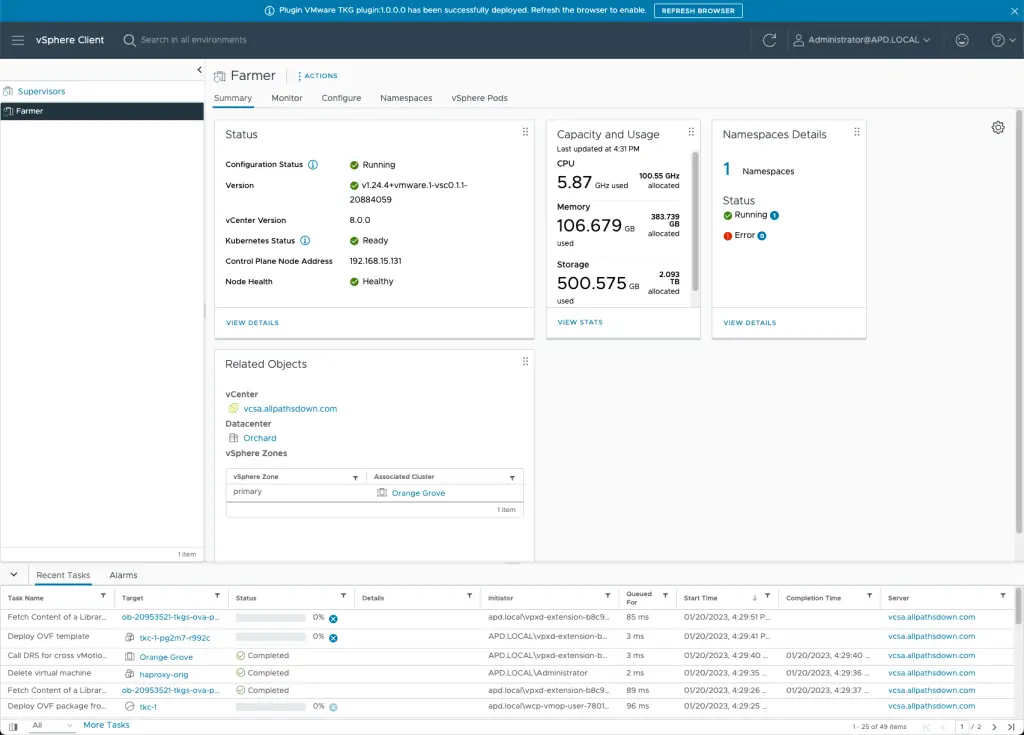

Step 1 – Deploy Kubernetes

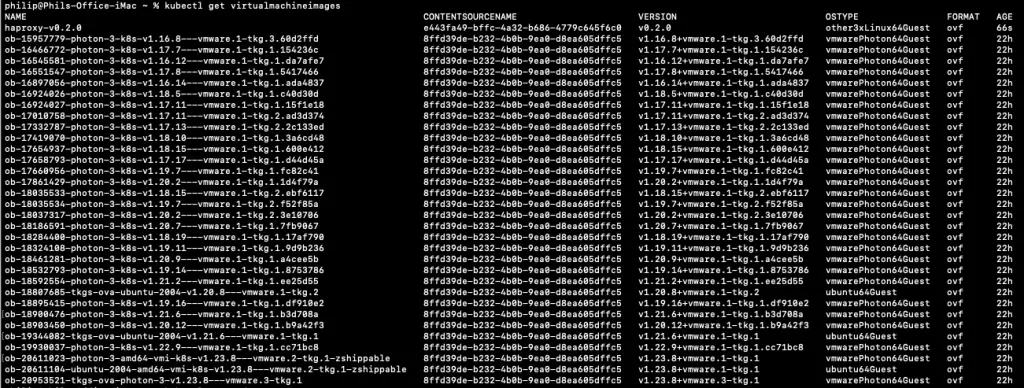

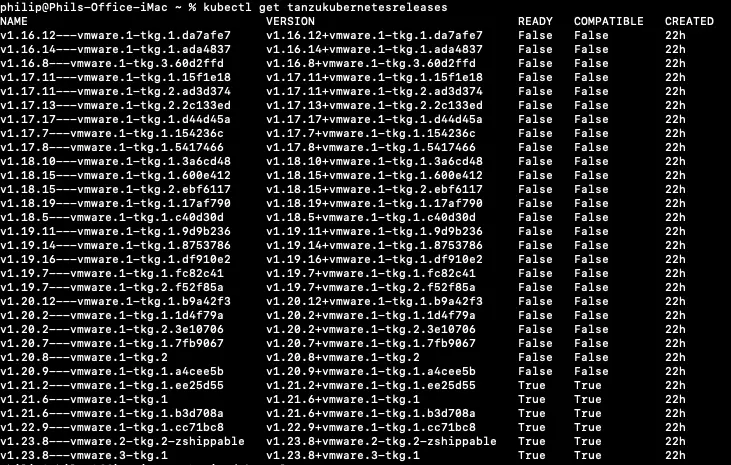

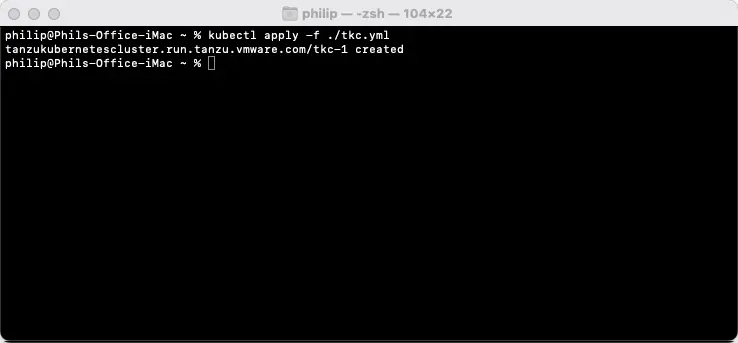

Whether this is Ubuntu, Nutanix or VMware Tanzu – find a Kubernetes deployment that works for you.

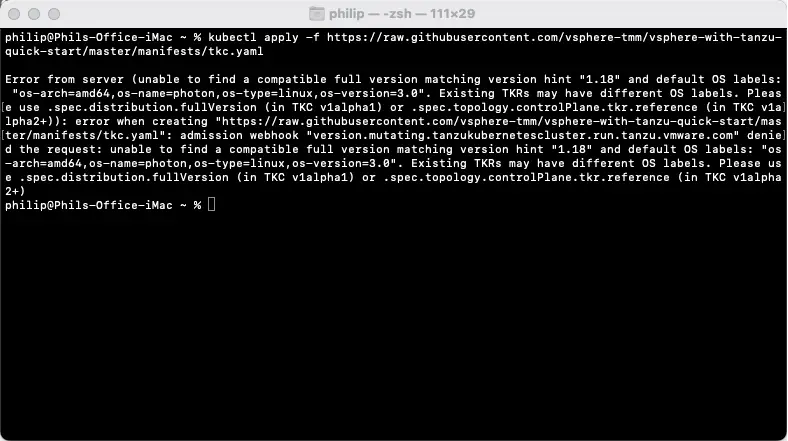

Step 2 – Deploy your First Containerized Applications on Kubernetes

Something like Helm will be hugely assistive to deploying your first workloads.

Step 3 – Deploy Istio and learn to pair sidecars with apps and scale deployments into multiple clusters and regions

More on this in a near term post…

Disclaimer: Tech Field Day, an independent organization, paid for my travel and accommodations to attend Cloud Field Day 16 where these cloud-native vendors presented. The views and opinions represented in this blog are my own and were not dictated or reviewed by the vendors.