Converging networks and condensing server footprints have been major trends in computing for the last several years. Examples of this range from protocols like Fiber Channel of Ethernet (FCoE) to blade centers to interconnect technologies like HP’s Virtual Connect. In the blade world, shared interconnects have changed how connectivity is defined to a blade chassis but until VMworld, I had not seen a similar solution for rack mount hardware.

A couple months ago now, I sent out a cryptic tweet: “Its like Christmas in September. I’m spending some time this evening with a new product. More details to come soon on the blog…” Soon being a relative term, I didn’t expect almost 3 months to pass before I revealed the device and my experiences with it. But I am excited to finally talk about my experiences with the NextIO vNet I/O Maestro, officially released in mid-October.

A couple months ago now, I sent out a cryptic tweet: “Its like Christmas in September. I’m spending some time this evening with a new product. More details to come soon on the blog…” Soon being a relative term, I didn’t expect almost 3 months to pass before I revealed the device and my experiences with it. But I am excited to finally talk about my experiences with the NextIO vNet I/O Maestro, officially released in mid-October.

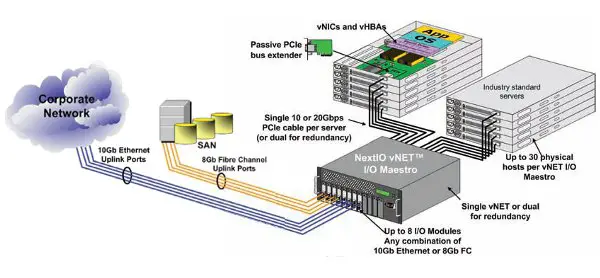

The NextIO vNet is a converged PCI interconnect solution which takes standard PCIe network and fiber channel adapters and provisions them in a shared way back to individual rack mount servers. The vNet extends PCI outside of the physical server through a special (albeit simple) riser PCI card then via cables to the vNet. The provisioned resources can be reassigned to different physical hardware, if required, offering a level of portability for systems and disaster recovery. In addition, the vNet does not add any specialized drivers or software on the hosts and the host simply see a PCI device presented to it from the vNet. The nControl management software handles creating virtualized WWID and MAC addresses that are assigned to the individual rack mount servers through an intuitive interface.

Configuration & Testing

Installation of the vNet was very straight forward. The unit is 4U in size and installs into any rack. To bring the unit online, we connected power and a single network cable for management. Once connected, the unit retrieved an address by DHCP. Once it got an address, we were free to configure it to a static address (as most enterprise would like do).

The unit required very little configuration on our part. The unit had two Ethernet and two Fiber Channel cards loaded on the interconnect slots. These dual port cards were presented in the nControl management console along with all 30 possible servers which could be connected via PCI on the back-end. The administrator is free to create profiles on any of the available server connections and these virtualized WWID and MAC addresses are portable between profiles, meaning that in the event of a failure for a critical system, the addresses could be reassigned to new hardware and the system brought back online (assuming similar enough hardware that the OS will not complain and the ability to move the OS disks to a compatible model server).

On the rackmount server side, we only needed to install a small PCI pass-through card and connect the cable from it to the vNet and it was ready to be powered on. After this, all configuration is done in nControl. Drivers for the Fiber Channel and Network adapters is done as normal in the operating system. The cards I received in my demo were newer than ESX 4.1 and required us to add a OEM vendor supplied driver to ESX and the same would be true for Windows. But in many cases, the OS vendor will bundle appropriate drivers and the solution will just work.

The management interface is easy to use with drag and drop and the vNet also supports command line configuration through SSH. The command line interface reminded me of a configuration similar to our HP or Cisco managed switches. Commands were easy to understand and I found no limitations of what could be configured and viewed from command line, which I know will make some Unix administrators happy.

I have always believed that a picture is worth a thousand words, so below is a logical view of how a vNet connects to a set of rack mount servers.

For my internal testing, I had two ESX hosts setup on the vNet and I was able to test network throughput between virtual machines on both boxes at amazing speeds. I was never able to get the device SAN connected to truly test some advanced features such as vMotion and disk IO due to limitations of my test hardware. The vNet requires PCIe card slots, so users should know that going in they will not be repurposing a lot of older equipment onto a vNet, although anything produced in the last 3 year should be compatible with the solution. I ran into issues with available hardware for my abbreviated testing since my systems were older Proliant DL380 G4’s.

I did not see boot from SAN as an option since the fiber channel card did not show during the POST of the test rack systems. I believe this may be an option depending on the fiber channel card, but I cannot recall the exact discussion I had with NextIO about this. Boot from SAN would greatly improve the ability to make OS profiles portable between rack servers since you no longer have to manually swap OS disk drives. Boot from SAN could mean a remote administrator could perform a hardware failover while offsite.

Advantages

The advantages that I see with the technology are very similar to the benefits I initially show with HP Virtual Connect, although a little different since it is with more industry standard hardware. Some of the advantages include:

- Reduced need of ports for Fiber Channel and Network, which is really useful when few applications can consume the capacity of an 8Gb fiber channel port or a 10Gb Ethernet port. As with virtualization in its first phase which sought to increase utilization of processor and memory in hosts, this technology can be used to increase utilization of under-utilized connectivity.

- Less costly upgrades to the newest technology allowed by adding a single PCI card of a specific technology and then sharing the new features to the backend rack mount systems.

- Server to server traffic not interacting with systems outside of the vNet’s domain does not need to leave the vNet device and can travel between systems at PCIe native speeds.

- Very intuitive and easy to learn interface to provision interfaces to backend nodes.

- The special PCI riser cards are pass-through modules with no firmware or intelligence to patch and maintain.

- Interconnect cards are industry standard PCI cards – nothing special or proprietary.

- PCI traffic passes through the vNet unit even without the management module in service, meaning that a firmware upgrade or other outage in the module would not cause downtime.

Disadvantages

To be fair, I can see some downsides to this approach and these are downsides I have found with the HP Virtual Connect solutions.

- Converged solutions sometimes introduce new complexities for maintenance and patching firmware on the PCI interconnect cards and due to share nature, it could be difficult from a coordination stand-point. This is a consideration when deciding what to run on a vNet, and in my mind makes cluster nodes and virtual hosts good candidates so that you can fail workloads onto nodes on a different vNet to enable maintenance periods.

- The solution could introduce a single point of failure, but this is easily overcome with the use of two vNet devices, although that does double the cost for both interconnect cards and vNet units.

- In some ways, it introduces a new black box to the environment whose traffic cannot be inspected which is a downside on the security end of things.

For a number of reasons, I think this solution could have a good use case with virtualization. Particularly with a customer who is just adopting virtualization, this solution could give them the ability to repurpose fairly new servers and connect them to SAN and 10Gb Ethernet with ease.

In addition, I think that a customer who needs to limit the number of SAN and 10Gb port investment would find the technology beneficial.