Simplify. Eliminate duplication of effort. Reduce costs. Play to your core competencies. Standardize. All of these are themes I have heard in my own company as we have looked at ways to improve our IT operations. Like many companies, we try to form a plan of where our IT operations should move, motivated by making IT highly available, redundant and cost efficient.

Converge. That theme is a tougher sell in my employer’s environment. There is resistance to converging, whether it’s IP telephony on our data network versus converging our fiber channel with fiber channel over Ethernet and putting it on our same core Ethernet network. Same would go for iSCSI, if we had it. We tend to separate for simplicity reasons, but there are certainly cost savings in convergence.

Why converge?

Convergence is a major trend in IT, today, although it goes by many names. But like most trends and buzzwords (think Cloud), your mileage will vary among vendors and interpretations of the buzz. HP’s approach to convergence is largely centered around standardized x86 hardware for both server and storage platforms. In addition, the converged storage platforms within HP are about scale out, with multiple controllers to handle unpredictable and unruly disk I/O with ease.

Before moving into a discussion of converged storage, though, it is worth taking a moment to talk about how things have been done in the past. For the past 20 years, storage has been largely created around a monolithic model. This model consisted of dual controllers and shelves of JBOD’s for capacity. The entire workload and orchestration of the array was trusted to the controllers. With the traditional workloads, the controllers performed well. In the old world, capacity was the limitation on data arrays.

Today, the demands of virtualization and cloud architectures on storage have considerably changed the workloads. The I/O is unpredictable and burst large amounts of traffic to the arrays. This is not what our traditional arrays were designed for and the controllers were paying the price. In a large number cases, including my company, the controllers become oversubscribed before the capacity of the disks are exhausted so you don’t realize your full investment. Monolithic arrays come with a high up-front price tag. When one is “full”, it is a big hassle and cost to bring in a new array and migrate. But these have been the work horses of our IT operations. They are trusted.

Hitting the wall

Within the past couple years, I have found the limitation of the controllers to be a significant problem within our environment. And even after significant upgrades to high-end HP EVA within our company, we can still see times when the disk I/O overwhelms our controllers to a point that disk latency increases and response slows.

The controller pain points are one of the driving forces behind converged storage. Converged storage is the “ability to provide a single infrastructure, that provides with server storage and networking and rack that in a common management framework,” says Lee Johns, HP’s Director of Converged Storage. “It enables a much simpler delivery of IT.”

What is different with converged storage?

Across the board, convergence leverages standardized, commodity hardware to lower costs and improve the ability to scale out. Converged storage is about taking those same x86 servers and creating a SAN that can adapt to the demands of today’s cloud and virtualization. Instead of the limitations of a single set of controllers, intelligently clustered server nodes enables each server in the array to serve as a controller.

Through distribution of control, the cluster is able to handle the bursts of I/O more easily across all of its members than a monolithic array controller is able. The software becomes the major player in the array operations and it really is a paradigm shift for storage administrators. No longer is storage a basic building block, it is just another application running on x86 hardware.

Diving deep into the HP P4800 G2 SAN solution

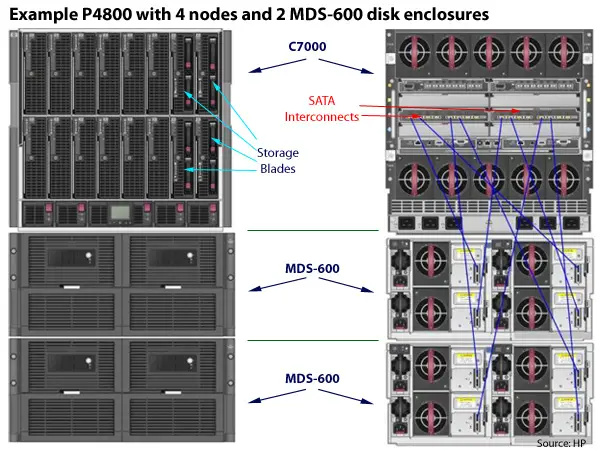

Perhaps the best way to understand converged storage is to look at a highly evolved converged data array. On Tuesday, Dale Degen, the worldwide product manager for the HP P4000 arrays, introduced our blogger crew to the P4800 G2, built on HP’s C7000 Bladesystem chassis.

The core of the LeftHand Networks and now P4000 series arrays is the SAN/iQ software. The SAN/iQ takes individual storage blade servers and clusters them into an array of controllers. This clustering allows for scale out as you need additional processing ability to handle the workload. Each of the storage blades is connected to its own MDS-600 disk enclosure via a SATA switch on the interconnect bays of the blade center. The individual nodes of the array mirror and spread the data over the entire environment. One of the best things about the SAN/iQ software is its ability to replicate to a different datacenter and handle seamless failover if one site is lost. (Today, in my fiber channel world, if I lose an array, it involves presentation changes to bring up my replica from another EVA, so this is a huge plus.)

By leveraging the HP Bladesystem for the P4800 G2, you can also leverage its native abilities, such as the shared 10Gb Ethernet interconnects and Flex-10. For blades in the same chassis with the P4800, the iSCSI traffic never has to leave the enclosure and it is allowed to flow at speeds up to 10Gb (unless you have split your connection into multiple NICs).

From an administrative standpoint, the P4800 is managed just like any other blade server in the C7000 enclosure. These blades are standard servers, except that they include the SATA interface cards. They include standard features like iLO (Integrated Lights Out) management, VirtualConnect for Ethernet network configuration, and the Onboard Administrator for overall blade health and management.

Within a single chassis, the P4800 can scale up to 8 storage blades (half of the chassis). The iSCSI SAN is not limited to presentation within the same C7000 or within the BladeSystems at all. It is a standard iSCSI SAN which can be presented to any iSCSI capable server in the datacenter.

The P4800 G2 is available in two ways. For customers new to the C7000, they may purchase a factory integrated P4800 G2 and C7000 chassis together. For existing customers with a C7000 and available blade slots, the P4800 G2 can be integrated with the purchase of blades, SATA interconnects and one or more MDS-600 disk enclosures. For existing customers, you must also purchase the installation services for the P4800 G2.

The P4800 is a scale up technology also. Customers do not need to migrate everything at one time. It allows for a single infrastructure and allows you to move onto it over time by adding additional storage blades and MDS-600 disk enclosures.

As a quick side note, this is the first entry for Thomas Jones’ Blogger Reality Show sponsored by HP and Ivy Worldwide. I ask that readers be as engaged and responsive as possible during this contest. I would like to see comments and conversations that these entries spark, tweets and retweets if it interests you and I also request that you vote for this entry using the thumbs up/thumbs at the top of this page. As I said earlier, our readers play a large part in scoring, so participate in my blog and all the others!