Over the course of the past few months, I have had the opportunity to compare physical workloads directly against virtualized workloads. As workloads have moved into vSphere in the environment that I support, we have had the opportunity to compare workloads head to head, pinpoint some bottlenecks and remedy them in our environment. In addition, I have spent a considerable amount of time researching running business critical applications inside of vSphere.

Power Profiles Matter with Performance

In Elias Khnaser’s PluralSight course Best Practices for Running XenApp/XenDesktop on vSphere, he says to check the power management settings of your servers, particularly in blade servers, since they many come with a dynamic power profile based on processor performance states (P-states). P-states conserve energy in servers by operating the processor it different power levels. With a dynamic profile, it may be lowering power to the CPU in order to save energy, but at the expense of optimized performance.

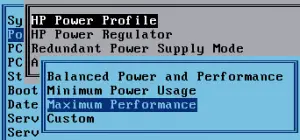

I checked this on my HP ProLiant blade servers and they were set to the default “Balanced Power and Performance.” The label doesn’t sound all that bad, but on further searching, this setting enabled the dynamic power management within a server. With this enabled, the CPU’s seemed to be powering down and taking additional time to power up when demand from vSphere increases.

I checked this on my HP ProLiant blade servers and they were set to the default “Balanced Power and Performance.” The label doesn’t sound all that bad, but on further searching, this setting enabled the dynamic power management within a server. With this enabled, the CPU’s seemed to be powering down and taking additional time to power up when demand from vSphere increases.

Changing the Power Management setting to “Maximum Performance” ensures that the CPU stays powered fully, however, it may take additional power to do so. In my initial testing, this increased performance within my business critical applications where we are measuring transaction times. We were able to observe a decrease in the transaction times by nearly one half.

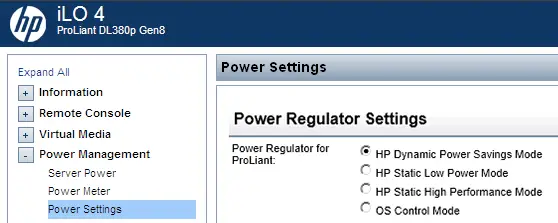

HP has a technical whitepaper outlining Power Regulation in ProLiant servers. I found two ways that you can adjust the power profile – both by entering BIOS during boot and also in the iLO on ProLiant systems. For ProLiant G7 and Gen8 systems, the iLO power profile change is instantaneous. For G6 and earlier systems with iLO 2, it appears to activate on next boot. I also found that depending on the profile setting on the hardware, vSphere presents different options at the hypervisor level. When the server is in dynamic mode, vSphere is able to configure a profile from the Configuration tab of the host. When set to “Maximum Performance”, the ability to change or set a profile is not available in vSphere.

In addition, VMware has a knowledgebase article (1018206) with troubleshooting steps for poor performance based on power settings. This KB article details not only HP ProLiant servers but also Dell servers.

And finally, there is one more place to check and that is the power profile settings within your Guest OS. Setting the profile to a high performance profile may also have a positive impact on performance.

Training: Business Critical Apps on vSphere

VMware has made some great online training available to all customers and the same courses are available to partners who seeking accredittation for virtualizing business critical apps (though Partner University). Each course is a 3 hour, online self-paced class.

- Virtualizing Microsoft SQL Server with VMware

- Virtualizing Microsoft SQL Server 2012 with VMware

- Virtualizing Microsoft Exchange 2013 on VMware vSphere

- Virtualizing Oracle Database on VMware

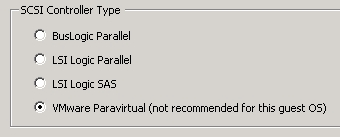

Paravirtualized SCSI driver

VMware unanimously makes the recommendation to the the Paravirtualized SCSI adapters within guest OSes when running business critical apps on vSphere in all the courses linked above. Paravirtualized SCSI adapters are higher performance disk controllers that allow for better throughput and lower CPU utilization in guest OSes according to VMware KB article 1010398. The same KB article also gives step by step instruction for adding the controller and driver into Guest OSes.

VMware unanimously makes the recommendation to the the Paravirtualized SCSI adapters within guest OSes when running business critical apps on vSphere in all the courses linked above. Paravirtualized SCSI adapters are higher performance disk controllers that allow for better throughput and lower CPU utilization in guest OSes according to VMware KB article 1010398. The same KB article also gives step by step instruction for adding the controller and driver into Guest OSes.

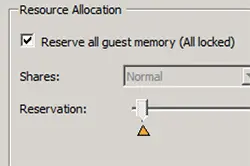

Reserve the Entire Memory Allotment

VMware recommends reserving the entire memory allotment for virtual machines running business critical apps. This ensure no contention where high performance applications are concerned. vSphere 5 and higher with virtual hardware version 8 or higher has a checkbox that allows for reserving the entire allotment of vRAM, even as allocations change.

VMware recommends reserving the entire memory allotment for virtual machines running business critical apps. This ensure no contention where high performance applications are concerned. vSphere 5 and higher with virtual hardware version 8 or higher has a checkbox that allows for reserving the entire allotment of vRAM, even as allocations change.

Avoid Thin on Thin Storage Provisioning

From my own experience, running vSphere thin-provisioning works great on array technology that does not have native thin provisioning capabilities, but if the storage has thin provisioning at the array level, use that and instead use the thick provisioning in vSphere, however which thick provisioning option to use varies by array.

While moving an application onto vSphere in my environment, we had development setup on an array without thinning capabilities and production on an array with thin provisioning. The production environment took more than double the time to perform the data build-out when utilizing thin on thin capabilities. Once we noticed this, investigation showed that it was thin on thin that was causing the performance derogation. Once the disk allocated the blocks within the VMDK, the performance derogation disappeared. Our theory is that the slowdown occurs when allocating new blocks for data.

Converting the production virtual machine to thick disks by performing a storage vMotion to a new LUN caused the build to complete in less time than on the development VM backed by the array without thin capabilities. In my particular case, it was an EVA without thin capabilities backing development and a 3PAR with thin provisioned LUNs backing production. The recommendation for 3PAR is to use “Thick Eager Zero” disks since the 3PAR will recognize and zero out the space of the ‘eager’ zeroed disks. I have seen other recommendations for different arrays, where many recommend the “Thick Lazy Zero” disks.

Thin on thin also presents more challenges in estimating and accommodating growth in an array over time. Any thin provisioning technology should be monitored for over-allocation to ensure that there is adequate room for growth on the array. Doing thin on thin may obstruct this view somewhat, so proceed with caution and know that you’re likely losing some performance.