Last year at HP Discover, I was preparing for my first StoreVirtual VSA deployment. I spoke with a couple different folks from the StoreVirtual team. The main piece of advice I was given was to use raw disk mappings under the StoreVirtual VSA. At first, I questioned this. But there is good logic to this recommendation. Now, my source is HP staff, but this isn’t in their official guidelines anywhere that I have found…

Scenario without RDM’s

So, take the scenario if we did not use raw disk mappings with the StoreVirtual VSA. You would create local VMFS datastores on the local drives connected to the host. You would only need one VMFS and you would deploy the VSA onto this disk. Then, you would then create additional VMDK files for the data disks behind the StoreVirtual VSA on the same VMFS datastore. You could create multiple VMFS and do the same thing, if you like.

Now, when you created your VMDK data disk to use your full VMFS capacity, vSphere would throw a disk space alarm for your VMFS datastore. The alarms are not customizable to say alarm on these VMFS created in the StoreVirtual VSA (which you will want later), but do not alarm on these local disk VMFS. So, you would always have alarm conditions if you used the full size of the VMFS.

First point, to avoid alarms, you would need to consume 80% or less of the VMFS capacity. But, why would you ever want to leave capacity on the table, never to be used? You want to maximize the capacity you have in your local servers.

Second point, you are never going to snapshot your VSAs. You don’t need any spare capacity for snapshot delta disks. With the VSA, you have multiple copies of the data on additional StoreVirtual (VSA or physical) and that is your redundancy. In fact, snapshots at the VSA level are dangerous. You should avoid these at all cost.

Scenario with RDM’s

My recommendation would be to create two local disks on the ESXi host that will run the VSA. The first disk should be small – possibly RAID1 – and will be formatted as a VMFS datastore. The second, possibly RAID5 or RAID6 would be your storage for the VSA. It would not be a VMFS and would instead be presented to the VSA as a raw disk mapping. This allows you to use 100% of the raw capacity, with no alarms and direct access to the underlying disk.

When you deploy the VSA, do not specify any data disks during the deployment. I used the Zero To VSA toolkit for my deployments and it allows this configuration to be deployed.

The complication with the RDM plan is that vSphere does not show local disks as available storage to present as a raw disk mapping. You will need to do some work with vmkfstools to create a VMDK file, on the primary VMFS with the StoreVirtual VSA files, and point this to the physical location of the second volume. Once you create the VMDK, you may attach it to the VSA and begin your configuration.

The first step is to identify the device ID of the unformatted, data disk. To do this, open SSH or a remote shell to the ESXi host. You will want to navigate to /vmfs/devices/ or /dev/disks and run an ls -la to list the device names. Hopefully you only have two or three devices listed – possibly your boot disk, your VMFS disk and your data disk. You may identify your data disk because it will be the name without a symbolic link associated. The ID you are looking for will begin with “naa.”

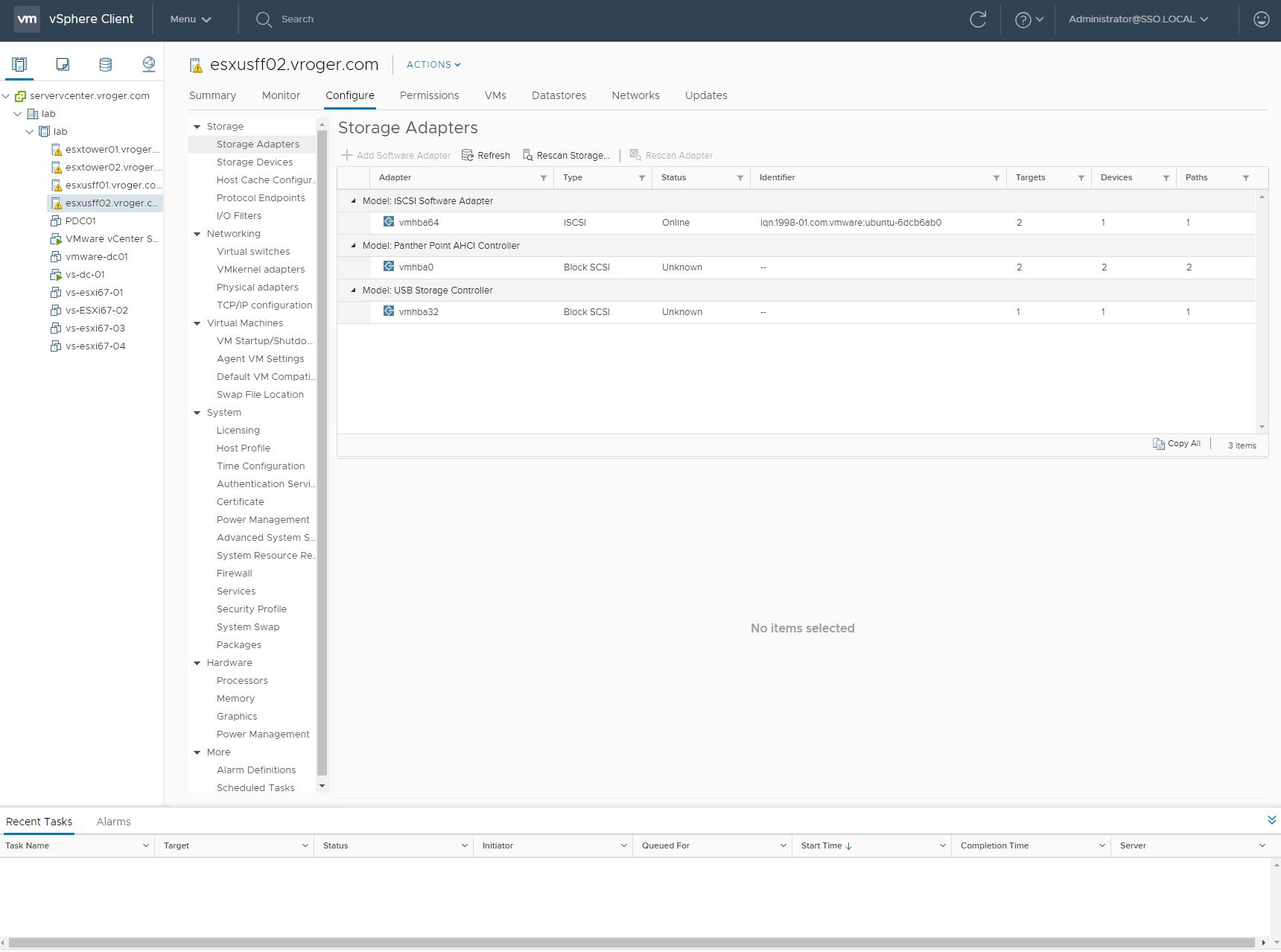

<screenshot home vsa install>

The vmkfstools command to create the VMDK raw disk mapping file is:

[code]vmkfstools -z /vmfs/devices/ID /vmfs/Volumes/VMFSDatastore/SVApplianceName/SVApplianceName_2.vmdk[/code]

Change VMFSDatastore to match the name you assign to your VMFS when you created it. Change the StoreVirtual1 to be the directory/name you named your appliance on this host. And lastly, specify a name for the vmdk file.

Now, edit settings. You attach the VMDK file you just created. Browse to the location you specified in vmkfstools and select the VMDK file. Repeat this for each virtual appliance.

Lastly, boot the VSA and use the StoreVirtual Centralized Managed console and its wizards to setup the VSA and cluster the individual VSAs into a software storage array.