Last week, I posted about HPE’s Synergy Platform – their vision of Composable Infrastructure. My post had a hard slant towards the hardware components of the solution because that is the world that I generally live in. I received some comments about the post and I always appreciate that kind of feedback. I don’t always get it correct when I write and this is less about being right or wrong than not painting the complete picture. And in full disclosure, the two people I was talking with are not HPE employees – both were industry folks at other companies.

So, what did I miss in post one? Primarily, I shortchanged the software component of the solution and one friend pointed out, the hardware alone does not make this a composable or even software-defined solution. So, let’s dig a little deeper into what HPE has laid out during my briefings and see if I can’t paint a fuller picture.

Composable, driven by Software

The software that drives Synergy Platform is two pronged. I covered the Synergy Composer and the Synergy Image Streamer to some degree in the first post. The software is installed as a physical hardware module. Each module has a specific job and a specific application running on it, but they also work together.

As a recap, Composer is the management interface. Composer is able to manage a large pool of Synergy Frames [up to 21] and their connected resources. It is based on HPE OneView – also a management software – but it has been customized and extended for the Synergy Platform. Utilizing OneView as the base for the system gives Synergy an API to build upon.

OneView is a strong management system built around server profiles. In OneView, you create a definition – a server profile – that includes all of the settings that get instantiated on the hardware. This metadata can include server settings down the BIOS, the network definitions, the storage definition, and firmware/driver levels. Profiles can be cloned and as of OneView 2.0, the idea of profile templates was added. Templates allow you to set definitions and then clone out individual profiles with different IP and names, but they retain the tie to the template. With templates, you may change a setting, like the networking configuration or firmware level, and then push out that change across all the profiles created from it. During the next reboot, these settings are applied. This is great for firmware and driver patching or for adding a new network uplink to a cluster of ESXi hosts, for example. All of these features are great for IT operations working with traditional IT infrastructure.

Synergy Platform, however, has goals beyond easing management of traditional IT. It is targeted at new-style IT or cloud-centric IT. So, building upon the base of server profiles, Synergy Composer takes the server profiles and adds some additional personality – and VMware administrators may see a lot of similarities between what Composer does and how Virtual Appliances work in vSphere. You can set IP and naming conventions for deploying systems in mass with Synergy – so Composer has to adopt these functions into its toolset – but it also adopts the ability to pass that configuration to the booting OS. So, what’s I’m rambling and trying to explain is that Synergy Composer encompasses the OS personality, in addition to the hardware personality. When I think of the OS personality – it is the ‘what makes each node different’ versus the hardware personality as the ‘what makes them the same.’

API & a different audience

One of HPE’s major goals with Synergy Platform was to expose everything available in the GUI management interface as an API, also. And beyond that, they wanted it to be as simple as possible to create and deploy an instance of compute. HPE’s focus here – I’ll admit – almost seems obsessive to allow compute to be created or torn down with a single line of code. Another major goal was to allow developers to call and create compute instances in their own language – in code – where they feel most comfortable. It is an appeal to a very different audience than the IT operations staff that HPE is normally catering to with their hardware solutions.

All of that said, the API makes it possible for forward-thinking administrators to automate and extend their processes into other systems. The API makes it possible to tie and automate server creation into the ticketing system, regardless of which vendor created the ticketing. It makes it possible to include these processes into scripted processes in enterprise batch solutions or anywhere else. It opens up an endless set of possibilities.

Streaming the OS

The OS personality is handled in conjunction with the Image Streamer. The streamer holds the golden master images of the OS that will run on the server profiles when they are deployed to compute nodes and powered on. The Image Streamer takes an OS image, presents it over iSCSI to the host, and then boots the system. While Image Streamer could do this for traditional OSes, it is not targeted there. The Image Streamer is intended to be used with stateless OS deployments – like ESXi hosts or container hosts. This means that the boot process needs to include a scripted process to fully configure the host. The settings for each instance is provided from Composer and the individual profile. vSphere offers very similar stateless ESXi with vSphere Auto Deploy. The difference here is that you’re using iSCSI to boot instead of PXE.

Cattle versus Pets

Very similar to how you view applications deployed on Docker, to realize the maximum benefit of Synergy, your compute nodes should be viewed as cattle and not as pets. For anyone not familiar with this analogy, here’s how it goes. In traditional IT, you view your servers as pets – your cat or dog. You know your pet by name and when they get sick, you take them to vet and you’ve have surgery to get them well again. But, if you were a cattle farmer with thousands of cows, you treat each cow differently. You don’t name them, though they might be assigned a number for tracking. And while you might give them medicine, you wouldn’t carry it to vet and give it surgery to make it well again. You’d likely shoot a sick cow and move on. Harsh, I know, but that’s reality.

The same thing is true when you change from traditional virtual machines to containers. With our traditional virtual machines, each one may start as a template, but once it is deployed, it deviates from that template and there is no relationship to it. If you need patches, you install the same update on each VM. On the other hand, containers have a limited lifetime. They exist to do a single thing. If it gets sick, you kill the process and restart new. If you need an upgrade, you don’t do those update steps against 1000 containers. You restart them from a new master image with the updates integrated once.

OS in the Synergy Platform will adopt the cattle mentality to realize the maximum benefit of the platform. The combination of the Composer and Image Streamer will allow IT departments to use the same mentality and benefits of scale that exist with containers at the physical hardware level with the container hosts, virtual hosts and with individual OS deployments.

Composer and Image Streamer gives IT the necessary workflow tools to achieve the vision of becoming a cattle farmer in the IT operations.

Traditional IT, too

Even though the vision and maximum benefit of Synergy Platform requires a change in how you view and operate your IT hardware, everyone in the industry realizes that all traditional IT is not going away anytime soon. The trick is finding a flexible solution that can provide you infrastructure for both traditional IT and cloud-centric IT. According to HPE, Synergy Platform can do this for IT.

Even though a lot of what makes Synergy different and composable is the Synergy Composer and Synergy Image Streamer, these two components can continue to run traditional IT systems side-by-side with the new-style IT.

Companies that adopt Synergy Platform will need to be somewhere down the transformation pathway for the solution to make sense. If a company is still engrained in traditional IT systems and operation, Synergy makes no sense to them. This solution is targeted and most beneficial to companies that are attempting to run both traditional IT and build new, cloud scale applications at the same time. Cloud scale is about agility and the time required to react to business needs. It is also about handling demands of a very uncertain or dynamic workload. It is about decoupling the applications into smaller and more-manageable pieces. For IT operations, managing both of these is a challenge. Managing both of these types of infrastructure in a single solution is compelling.

Final thoughts

Does Synergy Platform solve all of the management headaches of deploying traditional and cloud-scale? No. There are management headaches above the physical layer, but having a consistent platform for both at the physical level could be beneficial. All IT shops are faced with resource constraints the the more standardization, the better.

When moving to cloud-scale, one of the first conversations has to be ‘where do we have the most similarity and mass that it makes sense to invest in the workflow and automation that makes cloud-scale work?’ It makes sense to standardize and try to maximize the benefits. It is a large portion of the cloud-scale appeal and benefit.

Next week, I head to HPE Discover in Las Vegas. I fully expect to hear more about Synergy Platform and where it is in the release-to-market timeline. As an aside, I have not seen the interface and software actually running Synergy Platform. I saw some early concepts back in December at HPE Discover in London, but I really have not spent any time hands-on with the software. This is one reason I subconsciously avoided talking detail about it in the first post. I post what I know and what I’ve observed – not simply what I’m told by marketing folks [no offense to them]. I would really love that opportunity next week.

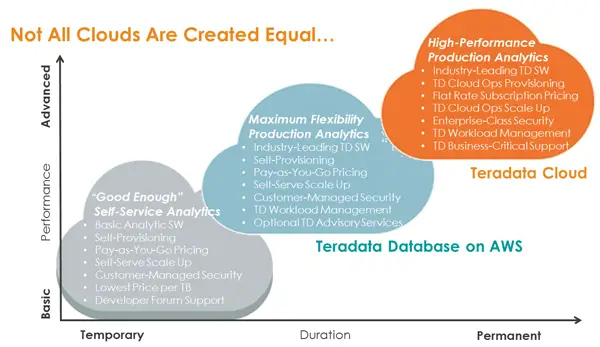

Unique, new types of datasets are forcing companies to investigate cloud-powered analytics to tap into data that can steer a company’s direction and its decision making. These datasets have challenging characteristics that require moving the analytics platform and not the data that should be mined.

Unique, new types of datasets are forcing companies to investigate cloud-powered analytics to tap into data that can steer a company’s direction and its decision making. These datasets have challenging characteristics that require moving the analytics platform and not the data that should be mined.