DH2i Closes 2021 As Another Year of Record Sales Growth, Product Innovation, and Strategic Partnership Development

Strengthened Foothold Across Key Verticals and Transitioned to 100% Software as a Subscription Model

Strengthened Foothold Across Key Verticals and Transitioned to 100% Software as a Subscription Model

Today, HPE introduced Ezmeral, a new software portfolio and brand name at its Discovery Virtual Event (DVE), with product offerings ranging from container orchestration and management, AI/ML and data analytics, cost control, IT automation and AI-driven operations, and security. The new product line continues the company’s trend of creating software closely bundled to its hardware offerings.

Three years ago, HPE divested itself of its software division. Much of the ‘spin-merge’, as it was called, was a portfolio of software components that didn’t have a cohesive strategy. The software that was left to remain in the company was closely tied to hardware and infrastructure – its core businesses. And like the software that powers its infrastructure, OneView and InfoSight, the new Ezmeral line is built around infrastructure automation, namely Kubernetes.

Infrastructure orchestration is the core competency of the Ezmeral suite of software – with the HPE Ezmeral Container Platform servicing the the foundational building block for the rest of the portfolio. Introduced in November, HPE Container Platform is a pure Kubernetes distribution, certified by the Cloud Native Computing Foundation (CNCF) and supported by HPE. HPE touts it as an enterprise-ready distribution. HPE is not doing a lot of value add at the container space, but they are working to help enterprise adopt and lifecycle Kubernetes.

Kubernetes has emerged as the de-facto infrastructure orchestration software. Originally developed by Google, it allows container workloads to be defined and orchestrated in an automated way, including remediation and leveling. It provides an abstracted way to handle networking and storage – through the use of plug-ins developed by different providers.

A single software does not make a portfolio, however, so there is more. In addition to the Kubernetes underpinnings, the acquisition of BlueData by HPE provides the company with an app store and controller which assists with the lifecycle and upgrades of both the products running on the Container Platform and the platform itself. There is a lot of value to companies in these components.

In addition, the HPE Data Fabric is also a component of the portfolio allowing customers to pick and choose their stateful storage services and plugins within the Kubernetes platform.

The focus of the App Store is on analytics and big data – with artificial intelligence and machine learning as a focus as well. The traditional big data software titles – Spark, Kafka, Hadoop, etc. – will continue to be big portions of the workloads intended to run on the HPE Ezmeral Container Platform.

The new announcement for HPE Discover Virtual Event centers around Machine Learning and ML Ops.

According to the press release, HPE Ezmeral ML Ops software leverages containerization to streamline the entire machine learning model lifecycle across on-premises, public cloud, hybrid cloud, and edge environments. The solution introduces a DevOps-like process to standardize machine learning workflows and accelerate AI deployments from months to days. Customers benefit by operationalizing AI / ML data science projects faster, eliminating data silos, seamlessly scaling from pilot to production, and avoiding the costs and risks of moving data. HPE Ezmeral ML Ops will also now be available through HPE GreenLake.

“The HPE Ezmeral software portfolio fuels data-driven digital transformation in the enterprise by modernizing applications, unlocking insights, and automating operations,” said Kumar Sreekanti, CTO and Head of Software for Hewlett Packard Enterprise. “Our software uniquely enables customers to eliminate lock-in and costly legacy licensing models, helping them to accelerate innovation and reduce costs, while ensuring enterprise-grade security. With over 8,300 software engineers in HPE continually innovating across our edge to cloud portfolio and signature customer wins in every vertical, HPE Ezmeral software and HPE GreenLake cloud services will disrupt the industry by delivering an open, flexible, cloud experience everywhere.”

HPE also reiterated its engagement with open-source projects and specifically to the Cloud Native Computing Foundation (CNCF), the sponsor of Kubernetes, with an upgraded membership from silver to gold status. The recent acquisition of Scytale, a founding member of the SPIFFE and SPIRE projects, underscores the commitment, representatives say.

“We are thrilled to increase our involvement in CNCF and double down on our contributions to this important open source community,” said Sreekanti. “With our CNCF-certified Kubernetes distribution and CNCF-certification for Kubernetes services, we are focused on helping enterprises deploy containerized environments at scale for both cloud native and non-cloud native applications. Our participation in the CNCF community is integral to building and providing leading-edge solutions like this for our clients.”

Disclaimer: HPE invited me to attend the HPE Discover Virtual Experience as a social influencer. Views and opinions are my own.

Datadobi Outperformed Alternative Solutions for Performance, Simplicity, and Reporting Capabilities

LEUVEN, Belgium–(BUSINESS WIRE)–Datadobi, the global leader in unstructured data migration software, has been selected by eDiscovery platform, process, and product specialist George Jon, to power its data migration services for clients across the corporate, legal, and managed services sectors. George Jon builds and launches best-in-class, secure eDiscovery environments, from procurement and buildout, and requires a data migration solution that provides clients with fast and reliable file transfer capabilities. They’ve managed projects for all of the ‘Big Four’ accounting firms and members of the Am Law 100, among many others.

INDIANAPOLIS (PRWEB) APRIL 14, 2020

Scale Computing, the market leader in edge computing, virtualization and hyperconverged solutions, today announced Goodwill Central Coast, a regional chapter of the wider Goodwill nonprofit retail organization, has implemented Scale Computing’s HC3 Edge platform for its IT infrastructure for in-store across its 20 locations. Scale Computing HC3 has provided Goodwill Central Coast with a simple, affordable, self-healing and easy to use hyperconverged virtual solution capable of remote management.

Spanning three counties in southern California, Goodwill Central Coast serves Monterey, Santa Cruz and San Luis Obispo. Goodwill Central Coast’s mission is to provide employment for individuals who face barriers to employment, such as homelessness, military service, single parenting, incarceration, addiction and job displacement. To fund its mission, Goodwill Central Coast is also a retail organization, which provides job training and funds programs to assist individuals in reclaiming their financial and personal independence.

As a nonprofit with a small IT staff of three people and 20 locations to service, Goodwill Central Coast was in need of an affordable virtual hyperconverged infrastructure solution capable of managing the region’s file systems and databases out of a central database in Salinas, CA. Since implementation of Scale Computing, Goodwill Central Coast has upgraded its point of sale system, increased drive space, saved resources, and saved 15% on the initial IT budget.

“The cumbersome nature of managing our previous technology led me to look into other solutions, and I’m so glad I found Scale Computing,” said Kevin Waddy, IT Director, Goodwill Central Coast. “Migration from our previous system was so quick and easy! I migrated 24 file servers to the new Scale Computing solution during the day with little to no downtime, spending 20 minutes on some servers. The training and support during the migration was comprehensive and I’ve had zero issues with the system in the six months I’ve had it in place. Scale Computing HC3 is a single pane of glass that’s easy to manage, and it has saved us so much time on projects.”

Before turning to Scale Computing, Goodwill Central Coast was using an outdated legacy virtualization system that required physical reboots every other month. The nonprofit was looking to save management time by replacing their IT infrastructure with a virtual server system capable of simply and efficiently managing remote servers. Waddy was also interested in looking for a hyperconverged system for its ability to migrate from physical servers to virtual, ultimately turning to Scale Computing for its affordability and ability to maximize uptime.

“When an organization is providing a service to its community, the last thing they should worry about is stretching their resources,” said Jeff Ready, CEO and Co-Founder, Scale Computing. “For a nonprofit like Goodwill Central Coast, saving time and money is critical to the work they do. With Scale Computing HC3, Goodwill Central Coast now has a simple, reliable and affordable edge computing solution that is easy to manage with a fully integrated, highly available virtualization appliance architecture that can be managed locally and remotely.”

With Scale Computing HC3, virtualization, edge computing, servers, storage and backup/disaster recovery have been brought into a single, easy-to-use platform. All of the components are built in, including the hypervisor, without the need for any third-party components or licensing. HC3 includes rapid deployment, automated management capabilities, and a single-pane-of-management, helping to streamline and simplify daily tasks, saving time and money.

For more information on Scale Computing HC3, visit: https://www.scalecomputing.com/hc3-virtualization-platform

About Scale Computing

Scale Computing is a leader in edge computing, virtualization, and hyperconverged solutions. Scale Computing HC3 software eliminates the need for traditional virtualization software, disaster recovery software, servers, and shared storage, replacing these with a fully integrated, highly available system for running applications. Using patented HyperCore™ technology, the HC3 self-healing platform automatically identifies, mitigates, and corrects infrastructure problems in real-time, enabling applications to achieve maximum uptime. When ease-of-use, high availability, and TCO matter, Scale Computing HC3 is the ideal infrastructure platform. Read what our customers have to say on Gartner Peer Insights, Spiceworks, TechValidate, and TrustRadius.

Zerto Announces Partnership with Google Cloud and Deeper Integration with Azure, AWS and VMware

Boston, March 24, 2020 – Zerto, an industry leader for IT resilience, today announced the general availability of Zerto 8.0, expanding disaster recovery, data protection, and mobility for hybrid and multi-cloud environments with strategic partners. Zerto 8.0 introduces new integration with Google Cloud, deeper integrations with Azure, AWS public cloud platforms, and new innovations with VMware.

“With Zerto 8.0, our mission is to deliver IT resilience everywhere, by introducing a range of new and powerful features along with deeper integration with market-leading public cloud providers. We give customers the protection they want, wherever they need it,” explained Ziv Kedem, CEO of Zerto. “Zerto has been moving the dial on resilience for the past decade, and the ongoing development and wider integration of our platform shows the importance of technology leadership in an era where businesses can never stand still on data protection and recovery.”

Zerto 8.0 brings unrivalled data protection and mobility for hybrid, multi-cloud environments with a range of new, innovative features and capabilities:

New support for Google Cloud by bringing its leading Continuous Data Protection (CDP) technology to Google Cloud’s VMware-as-a-Service offering. Zerto 8.0 will support VMware on Google Cloud, enabling users to protect and migrate native VMware workloads in Google Cloud Platform (GCP) with Zerto’s leading RTOs, RPOs and workload mobility.

New cost savings, operational efficiencies and visibility with support for VMware vSphere Virtual Volumes (vVols).

Expanded VMware vCloud Director (vCD) connection with Zerto’s self-service recovery portal for Managed Service Providers (MSP).

Deeper integration with Microsoft Azure for increased simplicity and scalability of large deployments, which includes support for Microsoft Hyper-V Gen 2 VMs, users leveraging UEFI for VMs running in their on-premises environments can now use Microsoft Azure as a target for disaster recovery, without a need to convert the VMs to legacy formats.

AWS Storage Gateway to be used as a target site for inexpensive and efficient cloud archive and data protection.

New data protection capabilities, extending the value of Virtual Protection Groups (VPGs) to data protection, delivering application consistency from seconds to years.

A single pane of glass for data protection reporting with status performance and capacity reporting of protected workloads.

Zerto’s failback functionality as part of the cost-effective incremental snapshots of Azure managed disks is now available across ALL regions.

New unified alert management with prioritized views of critical alerts and customization for users to receive the alerts exactly when needed for critical operations.

New impact analysis capability to mitigate risk to an organization’s protected and unprotected environment for on-premises or cloud.

New resource planning view of Unprotected VM’s for better insight into an organizations’ unprotected VMs.

Additional features to automate and streamline the processes for failover and configuration in the public cloud with automated OS configuration, automatic failback configuration and more.

Additionally, Zerto 8.0 lays the foundation for the future of data protection, replacing traditional snapshot-based backup with low RPO journal-based “operational recovery,” enabling enterprises to perform day-to-day granular recovery quickly.

“We’re excited to partner with Zerto and to integrate its capabilities in disaster recovery and backup with Google Cloud,” said Manvinder Singh, Director, Partnerships at Google Cloud. “Zerto’s expertise in supporting and securing VMware workloads in the cloud will be a benefit to organizations that are increasingly running mission-critical workloads on Google Cloud.”

“Zerto support for VMware Virtual Volumes (vVols) helps our mutual customers protect their digital infrastructure,” said Lee Caswell, VP Marketing, HCI BU, VMware. “As an Advanced tier Technology Alliance Partner, Zerto is helping customers realize the unique VMware ability to offer consistent storage policy-based management across traditional storage and hyperconverged infrastructure.”

“At Coyote, the security and protection of our customers’ data is our top priority,” said Brian Work, Chief Technology Officer, Coyote. “We take every precaution to keep their information safe, and our work with Zerto is a perfect example of this. Our mutual focus on data protection allows us to offer our customers the innovative tech solutions they need with the security they require.”

Learn more about Zerto 8.0 here: https://www.zerto.com/zerto-8-0-general-availability/

About Zerto

Zerto helps customers accelerate IT transformation by reducing the risk and complexity of modernization and cloud adoption. By replacing multiple legacy solutions with a single IT Resilience Platform, Zerto is changing the way disaster recovery, data protection and cloud are managed. With enterprise scale, Zerto’s software platform delivers continuous availability for an always-on customer experience while simplifying workload mobility to protect, recover and move applications freely across hybrid and multi-clouds. Zerto is trusted globally by over 8,000 customers, works with more than 1,500 partners and is powering resiliency offerings for 450 managed services providers. Learn more at Zerto.com.

VMware, vSphere, VMware Cloud Director, and VMware Virtual Volumes are registered trademarks or trademarks of VMware, Inc. or its subsidiaries in the United States and other jurisdictions.

StorCentric’s Nexsan Announces Major Performance & Connectivity Improvements for BEAST Platform with New BEAST Elite Models

BEAST Elite & Elite F with QLC Flash Deliver Industry-Leading BEAST Reliability with Enhanced Performance

Nexsan, a StorCentric company and a global leader in unified storage solutions, has announced significant enhancements for its high-density BEAST storage platform, including major improvements in performance and connectivity. The new BEAST Elite increases throughput and IOPs by 25% while maintaining the architecture’s price/performance leadership. Connectivity has been tripled, providing 12 high-speed Fibre Channel (FC) or iSCSI host ports, reducing the need for network switches.

BEAST Elite: Industry-Leading Reliability, Enhanced Performance and Scale

The BEAST Elite has been architected for the most diverse and demanding storage environments, including media and entertainment, surveillance, government, health care, financial and backup. It offers ultra-reliable, high performance storage that seamlessly fits into existing storage environments. In addition to a 25% increase in IOPs and throughput, BEAST Elite provides an all-in enterprise storage software feature set with encryption, snapshots, robust connectivity with FC and iSCSI, and support for asynchronous replication.

Designed for easy setup and streamlined management processes for administrators, BEAST Elite is highly reliable and was built to withstand demanding storage environments, including ships, subway stations and storage closets that have less than ideal temperatures and vibration. The platform now supports 14TB and 16TB HDDs with density of up to 2.88PB in a 12U system.

BEAST Elite F: QLC Flash Delivers Unrivalled Price/Performance Metrics

The BEAST Elite F storage solution supports QLC NAND technology designed to accelerate access to extremely large datasets at unrivalled price/performance metrics for high-capacity, performance-sensitive workloads. It is ideally suited to environments where performance is critical, but the cost of SSDs has historically been too high.

The BEAST Elite and Elite F both support the new E-Flex Architecture, which allows users to select up to two of any of the three expansion systems available. This gives organizations the flexibility to size the systems exactly as required within their rack space requirements.

“The new BEAST Elite storage platform with QLC flash provides a significant performance improvement over HDDs with industry-leading price/performance. This storage platform is ideal for customers who want extremely dense, highly resilient block storage data lakes, analytics and ML/AI workloads,” said Surya Varanasi, CTO of StorCentric, parent company of Nexsan.

“With a reputation for reliability stretching back over two decades, our BEAST platform has become a trusted solution for a huge range of organizations and use cases,” commented Mihir Shah, CEO of StorCentric, parent company of Nexsan. “BEAST Elite and Elite F retain those levels of superior-build quality and robust design while adding significantly to performance, scale and connectivity capabilities. We will continue to enhance and introduce new products as a testament to our unrelenting commitment to customer centricity.”

For further information, please visit https://www.nexsan.com/beast-elite/.

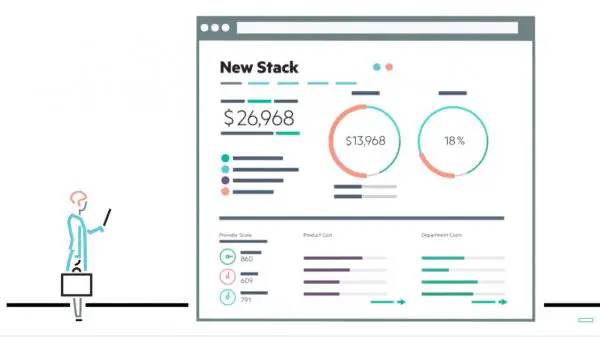

One of the most interesting announcements this week at HPE Discover was a project called New Stack. The project is a technology preview that HPE is ramping up with beta customers later this year, but the intentions of the project are to integrate the capabilities of all the “citizens” it manages to provide IT with cloud-like capabilities based on HPE platforms and marry that with the ability to orchestrate and service multiple-cloud offerings. That’s a mouthful, I know – so lets dig in.

If you assess the current HPE landscape of its infrastructure offerings, it has been building a management layer utilizing its HPE OneView software layer and its native API’s to allow for a full software-definition of its hardware. Based on OneView, HPE has created hyperconverged offerings and its HPE Synergy offerings building additional capabilities on top of OneView. With the converged offerings, the deployment times are reduced and the long term management has been improved with proprietary software.

With the HyperConverged offerings, HPE calls it VM Vending – meaning its a quick way to create VM workloads for traditional application stacks easily. It does not natively assist with cloud-native types of workloads. Certainly, you can deploy VM and VMware solutions to assist with OpenStack and Docker. Synergy improves upon the hyperconverged story by including the Image Streamer capability to allow native stateless system deployment and flexibility, in addition to the traditional workloads. Synergy is billed as a bimodal infrastructure solution that enables customers to do cloud-native and traditional workloads, side-by-side.

And then there is a gap. The gap is the required orchestration layers to enable cloud-like operations in on-premises IT solutions. HPE has a package called CloudSystem, which also existed as a productized converged solution, that include this orchestration layer to enable cloud-like . The primary problem with CloudSystem is cost. Its expensive – personal experience. Its also cumbersome. CloudSystem pieced together multiple different software packages that already existed in HPE – including some old dial-up billing software that morphed into cloud billing. CloudSystem was clearly targeted towards service providers.

The reality is today that regular IT needs to transform and be able to operate with cloud methodologies. It needs to be able to offer a service catalog to its user – whether developers, practitioners, or business folks ordering services for their organization. Project New Stack is planned to allow this cloud operation and orchestration across on-premises and multiple clouds.

New Stack is entirely new development for the company, based around HPE’s Grommet technology and integrating technology from CloudCruiser, which it acquired in January of 2017. CloudCruiser provides cost analysis software for cloud and so that provides a big part of what has been shown for Project New Stack during Discover. CloudCruiser focused on public cloud, so HPE has had to do some development around costing for on-premises infrastructure. It attempts to give a cost and utilization percentages across all your deployment endpoints.

Project New Stack taps into the orchestration capabilities of Synergy and its hyperconverged solutions to assist with pivoting resources into new use cases on-premises. It uses those resources to backend the services offered in a comprehensive service catalog. Those services are offered across a range of infrastructure, on-site and remote. Administrators should be able to pick and choose service offerings and map them from a cloud vendor to their users for consumption, but this is not the only method of consumption.

Talking with HPE folks on the show floor, they don’t see Project New Stack as the interface that everyone in an organization will always use. Developers can still interact with AWS and Azure from directly within their development tools, but Project New Stack should take any of those service spun-up or consumed and report them, aggregate them and allow the business to manage them. It doesn’t hamper other avenues of consumption and explains the focus on cost analysis and management for the new tool.

Orchestration is only part of the story. New Stack should gives line of business owners the ability to get insights from their consumption and make better decisions about where to deploy and run their apps. Marrying it with a service catalog gives a new dimension of decision making ability, based around cost. As with all costing engines, the details will make or break the entire operation. Every customer gets varying discounts and pricing from Azure and AWS. How those price breaks translate into the cost models will really dictate whether or not the tool is useful.

The ability to present a full service catalog shouldn’t be diminished. It is a huge feature – enabling instantiation of services in the same way, no matter where the backend cloud or on-premises. On-premises is new territory for most enterprises with cloud services. Enterprise is slow to change, but if the cloud cliff is real, more companies will face making the decision and brining those cloud-native services back on-premises for some use cases.

What I do not hear HPE talking about is migration of services from one cloud to another. While it may be included, this is typically the achilles heal for most cloud discussions. This is easier to accomplish with micro-services and new stack development than with traditional workloads. Bandwidth constraints and incompatibilities between clouds still exist for traditional workloads. And I didn’t get far enough into the system on the show floor to see how an enterprise could leverage the system to deploy their own cloud-native code, or simply draw insights from the cloud-native code deployed.

Project New Stack was the most interesting thing that I saw HPE Discover this week. While HPE has been developing software defined capabilities for its infrastructure, what has been lacking is an interface to integrate it all together. OneView is a great infrastructure-focused product made for defining and controlling the full on-premises infrastructure stack. Its incredibly important to allowing cloud-like operations on-premises. In the early forms, it won’t tie everything up in a nice little package, but it is early and that’s intention on HPE’s park to get the product out and into the hands of customers and then refine.

Last week, I posted about HPE’s Synergy Platform – their vision of Composable Infrastructure. My post had a hard slant towards the hardware components of the solution because that is the world that I generally live in. I received some comments about the post and I always appreciate that kind of feedback. I don’t always get it correct when I write and this is less about being right or wrong than not painting the complete picture. And in full disclosure, the two people I was talking with are not HPE employees – both were industry folks at other companies.

So, what did I miss in post one? Primarily, I shortchanged the software component of the solution and one friend pointed out, the hardware alone does not make this a composable or even software-defined solution. So, let’s dig a little deeper into what HPE has laid out during my briefings and see if I can’t paint a fuller picture.

The software that drives Synergy Platform is two pronged. I covered the Synergy Composer and the Synergy Image Streamer to some degree in the first post. The software is installed as a physical hardware module. Each module has a specific job and a specific application running on it, but they also work together.

As a recap, Composer is the management interface. Composer is able to manage a large pool of Synergy Frames [up to 21] and their connected resources. It is based on HPE OneView – also a management software – but it has been customized and extended for the Synergy Platform. Utilizing OneView as the base for the system gives Synergy an API to build upon.

OneView is a strong management system built around server profiles. In OneView, you create a definition – a server profile – that includes all of the settings that get instantiated on the hardware. This metadata can include server settings down the BIOS, the network definitions, the storage definition, and firmware/driver levels. Profiles can be cloned and as of OneView 2.0, the idea of profile templates was added. Templates allow you to set definitions and then clone out individual profiles with different IP and names, but they retain the tie to the template. With templates, you may change a setting, like the networking configuration or firmware level, and then push out that change across all the profiles created from it. During the next reboot, these settings are applied. This is great for firmware and driver patching or for adding a new network uplink to a cluster of ESXi hosts, for example. All of these features are great for IT operations working with traditional IT infrastructure.

Synergy Platform, however, has goals beyond easing management of traditional IT. It is targeted at new-style IT or cloud-centric IT. So, building upon the base of server profiles, Synergy Composer takes the server profiles and adds some additional personality – and VMware administrators may see a lot of similarities between what Composer does and how Virtual Appliances work in vSphere. You can set IP and naming conventions for deploying systems in mass with Synergy – so Composer has to adopt these functions into its toolset – but it also adopts the ability to pass that configuration to the booting OS. So, what’s I’m rambling and trying to explain is that Synergy Composer encompasses the OS personality, in addition to the hardware personality. When I think of the OS personality – it is the ‘what makes each node different’ versus the hardware personality as the ‘what makes them the same.’

One of HPE’s major goals with Synergy Platform was to expose everything available in the GUI management interface as an API, also. And beyond that, they wanted it to be as simple as possible to create and deploy an instance of compute. HPE’s focus here – I’ll admit – almost seems obsessive to allow compute to be created or torn down with a single line of code. Another major goal was to allow developers to call and create compute instances in their own language – in code – where they feel most comfortable. It is an appeal to a very different audience than the IT operations staff that HPE is normally catering to with their hardware solutions.

All of that said, the API makes it possible for forward-thinking administrators to automate and extend their processes into other systems. The API makes it possible to tie and automate server creation into the ticketing system, regardless of which vendor created the ticketing. It makes it possible to include these processes into scripted processes in enterprise batch solutions or anywhere else. It opens up an endless set of possibilities.

The OS personality is handled in conjunction with the Image Streamer. The streamer holds the golden master images of the OS that will run on the server profiles when they are deployed to compute nodes and powered on. The Image Streamer takes an OS image, presents it over iSCSI to the host, and then boots the system. While Image Streamer could do this for traditional OSes, it is not targeted there. The Image Streamer is intended to be used with stateless OS deployments – like ESXi hosts or container hosts. This means that the boot process needs to include a scripted process to fully configure the host. The settings for each instance is provided from Composer and the individual profile. vSphere offers very similar stateless ESXi with vSphere Auto Deploy. The difference here is that you’re using iSCSI to boot instead of PXE.

Very similar to how you view applications deployed on Docker, to realize the maximum benefit of Synergy, your compute nodes should be viewed as cattle and not as pets. For anyone not familiar with this analogy, here’s how it goes. In traditional IT, you view your servers as pets – your cat or dog. You know your pet by name and when they get sick, you take them to vet and you’ve have surgery to get them well again. But, if you were a cattle farmer with thousands of cows, you treat each cow differently. You don’t name them, though they might be assigned a number for tracking. And while you might give them medicine, you wouldn’t carry it to vet and give it surgery to make it well again. You’d likely shoot a sick cow and move on. Harsh, I know, but that’s reality.

The same thing is true when you change from traditional virtual machines to containers. With our traditional virtual machines, each one may start as a template, but once it is deployed, it deviates from that template and there is no relationship to it. If you need patches, you install the same update on each VM. On the other hand, containers have a limited lifetime. They exist to do a single thing. If it gets sick, you kill the process and restart new. If you need an upgrade, you don’t do those update steps against 1000 containers. You restart them from a new master image with the updates integrated once.

OS in the Synergy Platform will adopt the cattle mentality to realize the maximum benefit of the platform. The combination of the Composer and Image Streamer will allow IT departments to use the same mentality and benefits of scale that exist with containers at the physical hardware level with the container hosts, virtual hosts and with individual OS deployments.

Composer and Image Streamer gives IT the necessary workflow tools to achieve the vision of becoming a cattle farmer in the IT operations.

Even though the vision and maximum benefit of Synergy Platform requires a change in how you view and operate your IT hardware, everyone in the industry realizes that all traditional IT is not going away anytime soon. The trick is finding a flexible solution that can provide you infrastructure for both traditional IT and cloud-centric IT. According to HPE, Synergy Platform can do this for IT.

Even though a lot of what makes Synergy different and composable is the Synergy Composer and Synergy Image Streamer, these two components can continue to run traditional IT systems side-by-side with the new-style IT.

Companies that adopt Synergy Platform will need to be somewhere down the transformation pathway for the solution to make sense. If a company is still engrained in traditional IT systems and operation, Synergy makes no sense to them. This solution is targeted and most beneficial to companies that are attempting to run both traditional IT and build new, cloud scale applications at the same time. Cloud scale is about agility and the time required to react to business needs. It is also about handling demands of a very uncertain or dynamic workload. It is about decoupling the applications into smaller and more-manageable pieces. For IT operations, managing both of these is a challenge. Managing both of these types of infrastructure in a single solution is compelling.

Does Synergy Platform solve all of the management headaches of deploying traditional and cloud-scale? No. There are management headaches above the physical layer, but having a consistent platform for both at the physical level could be beneficial. All IT shops are faced with resource constraints the the more standardization, the better.

When moving to cloud-scale, one of the first conversations has to be ‘where do we have the most similarity and mass that it makes sense to invest in the workflow and automation that makes cloud-scale work?’ It makes sense to standardize and try to maximize the benefits. It is a large portion of the cloud-scale appeal and benefit.

Next week, I head to HPE Discover in Las Vegas. I fully expect to hear more about Synergy Platform and where it is in the release-to-market timeline. As an aside, I have not seen the interface and software actually running Synergy Platform. I saw some early concepts back in December at HPE Discover in London, but I really have not spent any time hands-on with the software. This is one reason I subconsciously avoided talking detail about it in the first post. I post what I know and what I’ve observed – not simply what I’m told by marketing folks [no offense to them]. I would really love that opportunity next week.

Last month, just before the flooding, I was onsite at HPE in Houston, TX, for a Tech Day focused on the HPE Hyper Converged and Composable Infrastructure portfolio. I have since had a chance to reflect on everything I had learned about HPE’s Composable strategy – and the larger industry direction of containers and orchestration are it is all taking us. There are potential benefits and lots of hurdles in both of these major initiatives in the industry. The reason behind these concepts and solutions is delivering faster results and flexibility for IT organizations. And while at the Tech Day, I was able to get dig in again and refresh what I’d heard and learn more detail around HPE’s Composable strategy.

I’ve had the opportunity to drill into HPE’s and the broader industry definition of Composable Infrastructure a couple times in the past year. HPE released Synergy Platform last December at HP Discover in London. This was the first purpose-built hardware platform for composability, but the people I talked with in HP warned me that it would not the only platform.

Composability is the concept of being able to take standard compute, storage and networking hardware and assemble them in a particular way using orchestration to meet a specific use case. When your use case is completed, you can decompose and recompose the same components in a different way to meet new needs. Beyond that point, it also includes the concept of being able to do this with hardware on demand as your usage and requirements change throughout the day. For peak work loads, you may need to compose infrastructure to run a billing cycle and then decompose and recompose the same to be web front-end servers for E-Commerce during a peak usage – like Black Friday. The key here is orchestration and with the orchestration, speed.

In many ways, Composable Infrastructure provides a pool of resources similar to how virtualization provides access to a pool of resources that can be consumed in multiple ways. The critical difference is that these are physical resources being sliced and diced without a hypervisor layer providing the pooling.

HPE has a strict definition they are following for Composable Infrastructure. If any of these characteristics are missing, they will not classify a solution as Composable, no matter how close it may resemble it.

The API is straight forward, along with the ability to use templates to define and automate system builds. Fluid resource pools also makes sense if you’ve spent any time with a virtualization technology. The point that didn’t immediately make sense is the ‘frictionless operations.’

In terms of frictionless operations, what HPE is talking about today is automation and workflow tools within the management interface to streamline the upgrade processes required on the system. Those may include upgrades in the OS images and it may include firmware and driver bundles along with the management interface rollups.

Now, take this concept & definition a step forward and layer on monitoring and mitigation software. What becomes possible is a self-healing infrastructure, reacting to events and remediating them on its own. Self-healing is a compelling concept, but so far attempts have been far from exceptional and its really tough to achieve. There is an huge amount of work required in standardization and orchestration that just don’t fit with traditional IT software and OS concepts. Where I say traditional, HPE is using the word legacy or old-style. They’re talking client-server applications – the commercial, off-the-shelf software so many Enterprises are running today.

But looking into the future, HPE can see that self-healing can be realized with cloud-native, scale-out software solutions. And it is betting that if it can build a physical infrastructure capable of programmatically assembling and disassembling systems on-demand, that is can power this self-healing future.

HPE Synergy Platform is the hardware HPE believes will power the future of on-premises, cloud-native IT along with the flexibility to host legacy applications in the same pool of resources.

While examining Synergy Platform at Discover, I first noticed that the hardware platform looks very similar to a HPE BladeSystem chassis. It is different in the number of compute nodes and the dimensions of those compute nodes and the networking. But physically, it looks very much like a BladeSystem. What occurs quickly to you is that you have new capabilities like adding a disk shelf that spans two compute bays and provides a pool of disk that can be shared throughout a Synergy Frame (not called chassis anymore).

Blades become compute nodes, but the concept is much the same. The form factor does not change much – you have half-height and full-height options for compute nodes in Synergy. The dimensions are larger to accommodate a fuller range of hardware inside of the compute node. Starting with a half-height blade, HPE is offering the Synergy 480 Compute Node. It is a similarly equipped Gen9 server to meet similar use cases as the BL460c.

Another change in Synergy, the slots for compute nodes no longer restrict you to a single unit – there is no metal separation between the compute bays. You can do double width units in Synergy, in addition to full-height. HPE is making use of that with a double width and full height Synergy 680 Compute Node. The 680 is an impressive blade with up to 6TB of RAM across 96 DIMM slots and quad sockets. It is a beast of a blade. Other full-height option are the Synergy 620 and 660 Compute Nodes.

Orchestration is really what sets Synergy Platform apart from the c7000 blade frames from HP. The orchestration is achieved by modules on the Synergy Platform management modules.

First, the Synergy Composer is the brains and management of the operation and it is built on HPE OneView. From an architecture stand-point, a single Composer module can manage up to 21 interlinked frames. Each frame a two 10Gig management connections – on a Frame Link Module – that can be link frames together. Each frame connects upstream to a frame and downstream to another and this forms a management ring. Using this management network, the Synergy Composer is able to manage all 21 frames of infrastructure. Although each frame has a slot for a Composer management module, only one is required and a second can be added in a different frame to establish high-availability.

Synergy Image Streamer is all about the boot images. You take a golden image, you create a clone-like copy (similar to VMware’s linked clones) and you boot it, when the image is rebooted, nothing is retained. Everything about the image must be sequenced and configured during boot. Stateless operation is very much a cloud-native concept – requiring the additional services to be deployed in the environment – like centralized logging – to enable the long-term storage of data from the workloads. Composer also takes into account updates and patching by allowing the administrator to commit these to the golden master and then kick off a set of rolling reboots to bring all the running images up to date. Just like Composer, the Image Streamer also only needs a single module or two for redundancy in two different frames.

Management in Synergy is made to scale and eliminate the need for onboard management modules and a separate software outside of the hardware to manage many units.

At launch, Synergy’s Image Streamer supports Linux, ESXi and Container OSes with the full benefit of image, config and run. The images are stateless, meaning nothing is retained when the system reboots. Windows was not supported with the Image Streamer as of December. The Synergy and composable concept is clearly targeted at bare-metal deployments of cloud-native systems.

Now, even though you can’t use the Image Streamer to run Windows or stateful Linux on Synergy, it doesn’t mean they wont’ run. It is still possible to create a boot disk and provision it to a compute node (boot-from-san, boot from USB or SD, etc.). For compatibility, you can use Synergy compute note like a traditional rack-mount or blade server. Of course, when you do, you lose all of the potential benefits of the platform from its imaging and automation engines.

The Synergy Fabric the other huge differentiating factor with Synergy Platform. A single frame is probably not what you’ll see deployed anywhere. Synergy is built to scale up within the rack – with up to 5 frames interconnected to the same converged fabric that provides Ethernet, Fibre Channel, FCoE and iSCSI across all of the frames. Synergy uses a parent/child module to extend the managed fabric across multiple frames. A parent module is inserted in one frame and child modules in up to 4 additional frames. Similar to Composer and Image Streamer modules, the interconnect modules on Synergy uses a pair of parent modules in different frames to achieve high availability. Management of the interconnect modules and fabric is a single interface and utilizes MLAG connections between the modules to communicate management changes.

StoreVirtual is a primary use case with Synergy Platform and HPE expect many users will choose their VSA to do software defined storage in Synergy. But it is hard to get enough disks to matter in the blade form factor. To allow for this, HPE is also showing off a new disk shelf that can fit into two compute bays on a frame. The HPE Synergy D3940 disk shelf can hold up to 40 small form factor disks that can be carved out and consumed by any of the compute nodes in the frame. One important limitation here, however, is that the local disks are only accessible inside of a single frame. The SAS module used for these disks is in a separate bay and is separate from the fabric. So all the disks need to be presented to compute within the frame.

However, StoreVirtual to the rescue. Either bare metal StoreVirtual or more likely as a VSA, the StoreVirtual can take those local disks and present them in a way that they can be consumed or clustered with compute in other frames. All 40 disks may be presented to a single StoreVirtual instance and then clustered with 2 additional StoreVirtual instances in other frames – and then the fabric can consume the storage from StoreVirtual. The great thing is you have choice as a consumer to instantiate and then recompose these resources as needed on Synergy.

It is critically important to note that you can run traditional workloads side-by-side with cloud-native workloads. While cloud-native benefit more from the Image Streamer and account for stateless OS operation, traditional IT workloads can be run on the same hardware. From the Composer, you can assemble a traditional server, with boot from SAN or boot from local disk in the compute node and run a client-server application. This flexibility is important as organizations attempt to build the new style apps but need to support existing applications. It means that a single hardware platform will be able to deliver both. Unfortunately, organizations that choose to run legacy systems on Synergy won’t be able to fully realize all the benefits Synergy Platform has to offer since most do not apply to these legacy workloads, but having the flexibility is key.

From my viewpoint, no company is going to buy into Synergy simply to run legacy applications on it. The real benefits are for companies that are planning or in the middle of application transition. For companies who have not begun the transition process, Synergy and Composable Infrastructure is going to be a tough sell – because they’re not thinking in ways where the benefits Synergy delivers matter.

One of the biggest hurdles for virtualization adoption in the small business area has been the need for expensive, shared storage. The Virtual Storage Appliance is looking to change that. By utilizing the relatively cheap, attached storage in host systems and pooling those into a storage array, vendors are looking to extend the goodness of virtualization to the final frontier – the small business. But its not just applicable there – I think that VSA may also have a strong play for virtual desktops and eventually for primary storage in many environments.

A reporter asked me end of 2011 for my predictions in 2012. Mine was around the VMware VSA and the promise of cheap, shared storage for the masses. VMworld’s Solutions Exchange was full of vendors with VSA or purpose-built storage solutions looking to address the storage play in virtualization. Many are looking at SSD, either hybrid or pure SSD, to achieve a large amount of IOPS for virtualization. In my mind, its possible that a VSA could replace all of these purpose-built solutions by utilizing the local RAID adapters and local storage already within our ESX hosts.

HP has rebranded the LeftHand VSA under the new StoreVirtual VSA moniker. The StoreVirtual also introduces a reduced cost licensing model which bundles 3 years of service and support along with the virtual appliance. StoreVirtual VSA allows for active/active clusters using the tried and true Lefthand technology. StoreVirtual uses iSCSI as its primary transport of storage to the ESX hosts. The local storage is pulled into the StoreVirtual VSA, then it is carved into LUNs and presented to particular iSCSI initiators. All of the advanced features of Lefthand are available to customers of the VSA. The StoreVirtual VSA is the only VSA that is certified as a VMware Metro Storage Cluster, capable of spanning storage over datacenters.

I remember when Lefthand was first demoed for me, before the HP acquisition. One of their value propositions at the time was repurposing existing hardware into storage nodes within the Lefthand SAN. The same principle applies in the case of the new StoreVirtual VSA. Adding disks to existing, very capable ESX hosts may make a lot of sense to companies. It may also reduce the data center footprint and cooling needs by collapsing storage into running host servers.

VMware also offers a licensable VSA. It uses NFS to mount a datastore and make it accessible to all nodes in a cluster. It also allows for replication of data from the datastore to another datastore, but it is a single node active model. In the event the primary host running the NFS share goes down, the secondary node has to activate the NFS and make it available. It may not be quite up to par with offering seamless or instant failover, but it is probably good enough for many applications.

Nexenta also had a VSA for View demoed in the Solutions Exchange at VMworld. It was a very low cost option, licensed per user, to convert local storage into VDI storage.

VSA’s do not come without some challenges. Customers will need to architect high-availability into any design. They will need to ensure that advanced features like snapshots and backups are capable, although there are new considerations which come into play. HP and VMware partner Veeam has introduced a version of its backup software that fully understand the StoreVirtual VSA and the challenges of backup that may be introduced with a VSA. One of these challenges is how exactly to restore a data snapshot onto a storage array running on the same virtual infrastructure. The VSA creates a circular reference, to some degree.

I stand by my prediction, and I have to admit, its fun to see my thoughts coming true on this front. Small business is ripe to benefit from virtualization and may be the last, large, untapped area of growth. And VSA’s may make it possible.