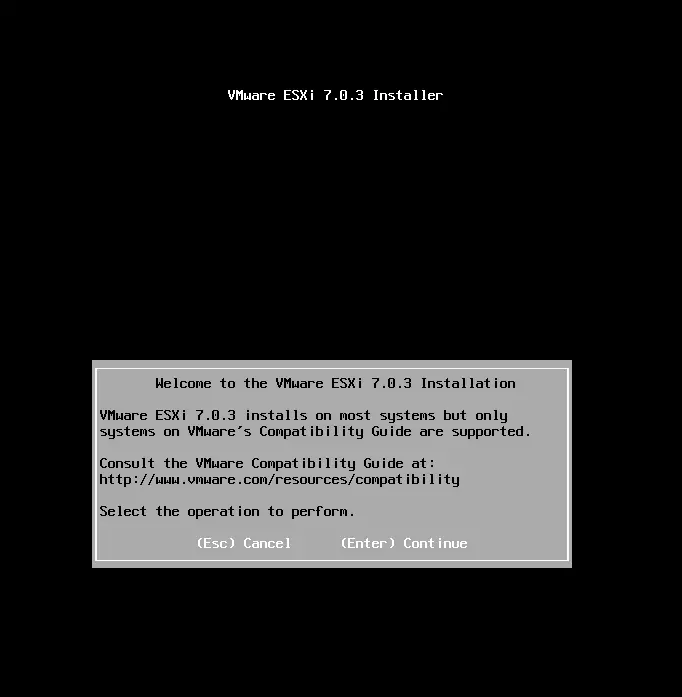

Install vSphere 7 U3

Today I will be Installing VMware vSphere 7 Update 3 via workstation to walk you through the installation.

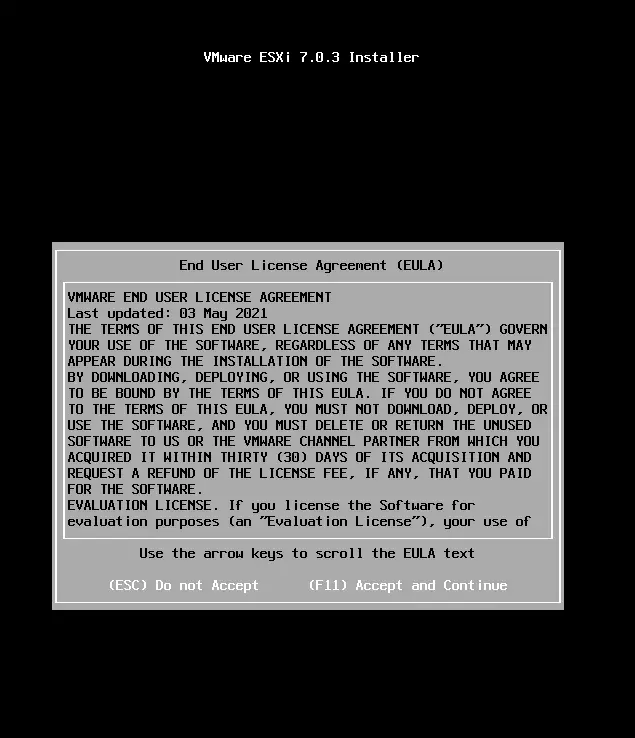

Lets go ahead and press F11

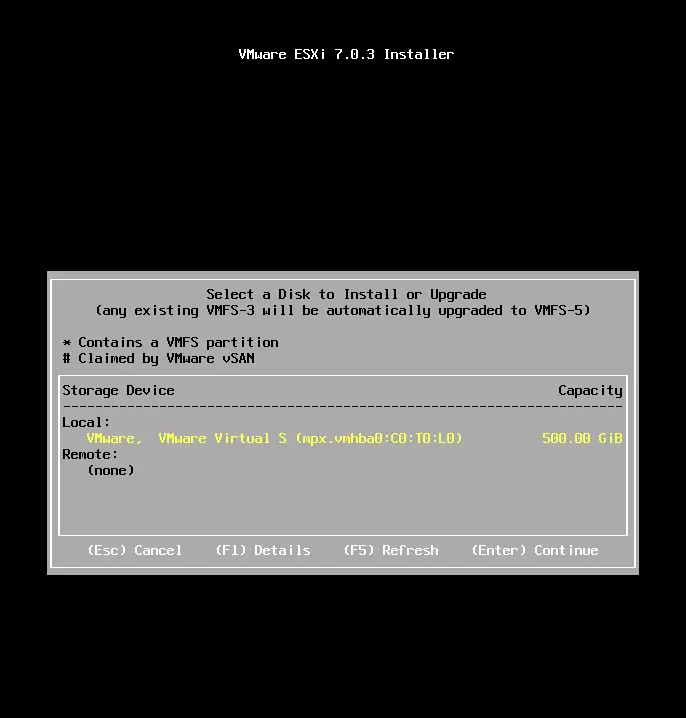

Select your disk, and Press Enter.

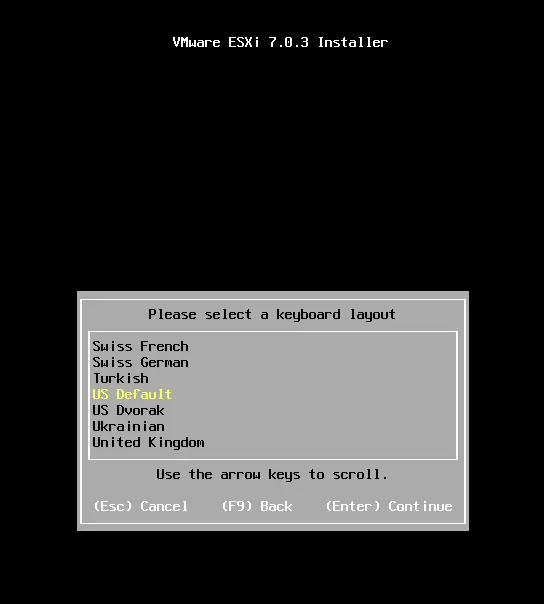

Select your language and Press Enter.

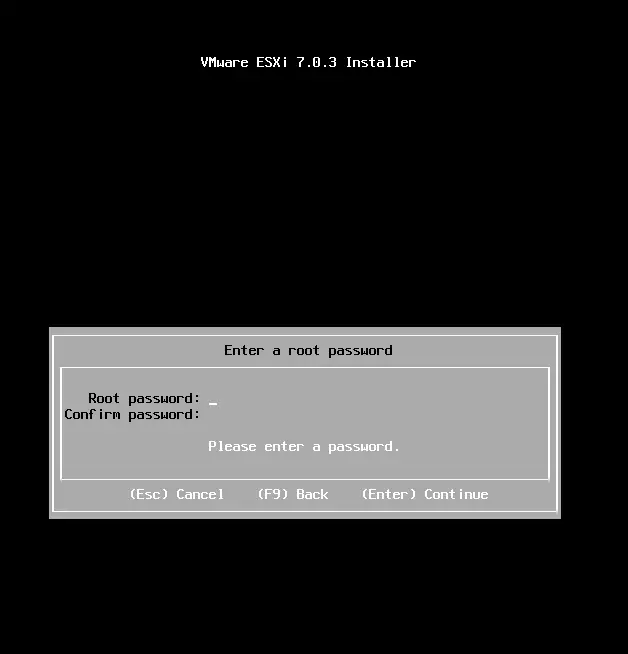

Enter your password and Press Enter.

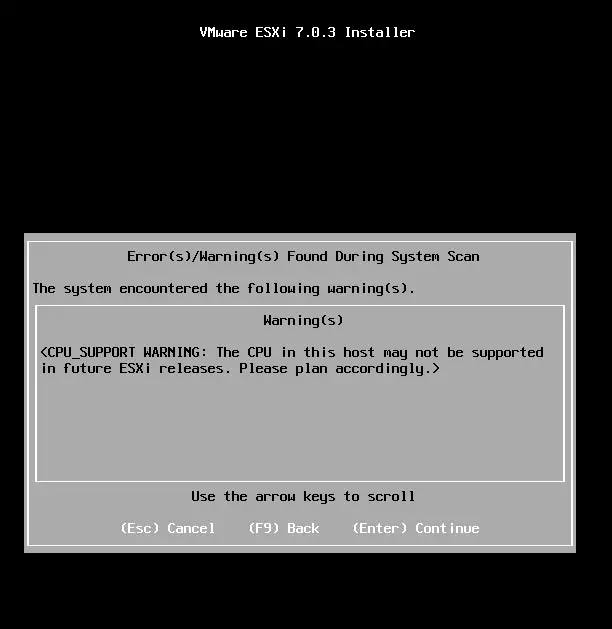

Here it is saying my I7 is old, imagine that, normally you will not get this unless it’s an older cpu. Press Enter to continue.

Hit F11 to install.

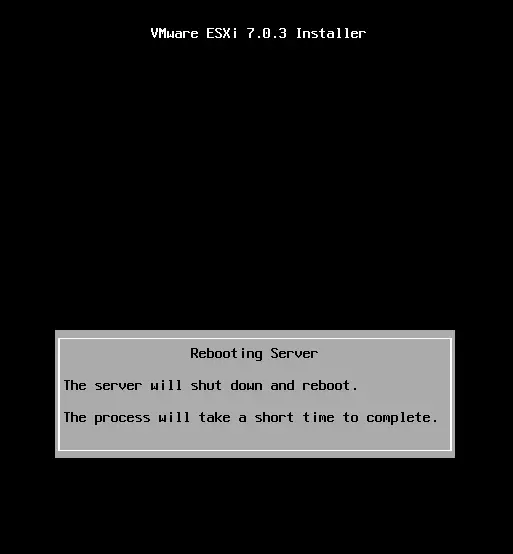

W00t, Time to reboot, Press Enter.

Lets go build some VM’s! Until next time.